Related Research Articles

In software engineering, multitier architecture is a client–server architecture in which presentation, application processing and data management functions are physically separated. The most widespread use of multitier architecture is the three-tier architecture.

A Beowulf cluster is a computer cluster of what are normally identical, commodity-grade computers networked into a small local area network with libraries and programs installed which allow processing to be shared among them. The result is a high-performance parallel computing cluster from inexpensive personal computer hardware.

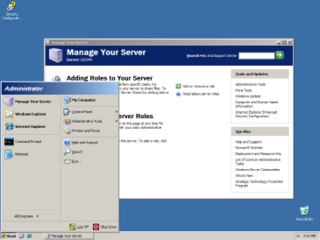

Windows Server 2003, codenamed "Whistler Server", is the second version of the Windows Server operating system produced by Microsoft. It is part of the Windows NT family of operating systems and was released to manufacturing on March 28, 2003 and generally available on April 24, 2003. Windows Server 2003 is the successor to the Server editions of Windows 2000 and the predecessor to Windows Server 2008. An updated version, Windows Server 2003 R2, was released to manufacturing on December 6, 2005. Windows Server 2003 is based on Windows XP.

Microsoft Exchange Server is a mail server and calendaring server developed by Microsoft. It runs exclusively on Windows Server operating systems.

Failover is switching to a redundant or standby computer server, system, hardware component or network upon the failure or abnormal termination of the previously active application, server, system, hardware component, or network in a computer network. Failover and switchover are essentially the same operation, except that failover is automatic and usually operates without warning, while switchover requires human intervention.

Multi-master replication is a method of database replication which allows data to be stored by a group of computers, and updated by any member of the group. All members are responsive to client data queries. The multi-master replication system is responsible for propagating the data modifications made by each member to the rest of the group and resolving any conflicts that might arise between concurrent changes made by different members.

Microsoft Cluster Server (MSCS) is a computer program that allows server computers to work together as a computer cluster, to provide failover and increased availability of applications, or parallel calculating power in case of high-performance computing (HPC) clusters.

High-availability clusters are groups of computers that support server applications that can be reliably utilized with a minimum amount of down-time. They operate by using high availability software to harness redundant computers in groups or clusters that provide continued service when system components fail. Without clustering, if a server running a particular application crashes, the application will be unavailable until the crashed server is fixed. HA clustering remedies this situation by detecting hardware/software faults, and immediately restarting the application on another system without requiring administrative intervention, a process known as failover. As part of this process, clustering software may configure the node before starting the application on it. For example, appropriate file systems may need to be imported and mounted, network hardware may have to be configured, and some supporting applications may need to be running as well.

The HPE Storage (formerly HP StorageWorks) is a portfolio of HPE storage products, includes online storage, nearline storage, storage networking, archiving, de-duplication, and storage software. HP and their predecessor, the Compaq Corporation, has developed some of industry-first storage technologies to simplify network storage. HP is a proponent of converged storage, a storage architecture that combines storage and compute into a single entity.

Apache Hadoop is a collection of open-source software utilities that facilitates using a network of many computers to solve problems involving massive amounts of data and computation. It provides a software framework for distributed storage and processing of big data using the MapReduce programming model. Hadoop was originally designed for computer clusters built from commodity hardware, which is still the common use. It has since also found use on clusters of higher-end hardware. All the modules in Hadoop are designed with a fundamental assumption that hardware failures are common occurrences and should be automatically handled by the framework.

The Red Hat Cluster includes software to create a high availability and load balancing cluster. Both can be used on the same system although this use case is unlikely. Both products, the High Availability Add-On and Load Balancer Add-On, are based on open-source community projects. Red Hat Cluster developers contribute code upstream for the community. Computational clustering is not part of cluster suite, but instead provided by Red Hat MRG.

OpenSAF is an open-source service-orchestration system for automating computer application deployment, scaling, and management. OpenSAF is consistent with, and expands upon, Service Availability Forum (SAF) and SCOPE Alliance standards.

The Base One Foundation Component Library (BFC) is a rapid application development toolkit for building secure, fault-tolerant, database applications on Windows and ASP.NET. In conjunction with Microsoft's Visual Studio integrated development environment, BFC provides a general-purpose web application framework for working with databases from Microsoft, Oracle, IBM, Sybase, and MySQL, running under Windows, Linux/Unix, or IBM iSeries or z/OS. BFC includes facilities for distributed computing, batch processing, queuing, and database command scripting, and these run under Windows or Linux with Wine.

The following tables compare general and technical information for notable computer cluster software. This software can be grossly separated in four categories: Job scheduler, nodes management, nodes installation and integrated stack.

A computer cluster is a set of computers that work together so that they can be viewed as a single system. Unlike grid computers, computer clusters have each node set to perform the same task, controlled and scheduled by software. The newest manifestation of cluster computing is cloud computing.

InMage was a computer software company based in the US and India. It marketed a product line called Scout that used continuous data protection (CDP) for backup and replication. Scout consisted of two product lines: the host-offload line, which uses a software agent on the protected servers, and the fabric line, which uses an agent on the Fibre Channel switch fabric. The software protects at the volume or block level, tracking all write changes. It allows for local or remote protection policies. The first version of the product was released in 2002.

Eucalyptus is a paid and open-source computer software for building Amazon Web Services (AWS)-compatible private and hybrid cloud computing environments, originally developed by the company Eucalyptus Systems. Eucalyptus is an acronym for Elastic Utility Computing Architecture for Linking Your Programs To Useful Systems. Eucalyptus enables pooling compute, storage, and network resources that can be dynamically scaled up or down as application workloads change. Mårten Mickos was the CEO of Eucalyptus. In September 2014, Eucalyptus was acquired by Hewlett-Packard and then maintained by DXC Technology. After DXC stopped developing the product in late 2017, AppScale Systems forked the code and started supporting Eucalyptus customers.

The following is a comparison of cloud-computing software and providers.

In computer science, a heartbeat is a periodic signal generated by hardware or software to indicate normal operation or to synchronize other parts of a computer system. Heartbeat mechanism is one of the common techniques in mission critical systems for providing high availability and fault tolerance of network services by detecting the network or systems failures of nodes or daemons which belongs to a network cluster—administered by a master server—for the purpose of automatic adaptation and rebalancing of the system by using the remaining redundant nodes on the cluster to take over the load of failed nodes for providing constant services. Usually a heartbeat is sent between machines at a regular interval in the order of seconds; a heartbeat message. If the endpoint does not receive a heartbeat for a time—usually a few heartbeat intervals—the machine that should have sent the heartbeat is assumed to have failed. Heartbeat messages are typically sent non-stop on a periodic or recurring basis from the originator's start-up until the originator's shutdown. When the destination identifies a lack of heartbeat messages during an anticipated arrival period, the destination may determine that the originator has failed, shutdown, or is generally no longer available.

Docker is a set of platform as a service (PaaS) products that use OS-level virtualization to deliver software in packages called containers. The service has both free and premium tiers. The software that hosts the containers is called Docker Engine. It was first released in 2013 and is developed by Docker, Inc.