In mathematics, a continuous function is a function that does not have any abrupt changes in value, known as discontinuities. More precisely, a function is continuous if arbitrarily small changes in its output can be assured by restricting to sufficiently small changes in its input. If not continuous, a function is said to be discontinuous. Up until the 19th century, mathematicians largely relied on intuitive notions of continuity, during which attempts such as the epsilon–delta definition were made to formalize it.

Discrete mathematics is the study of mathematical structures that are fundamentally discrete rather than continuous. In contrast to real numbers that have the property of varying "smoothly", the objects studied in discrete mathematics – such as integers, graphs, and statements in logic – do not vary smoothly in this way, but have distinct, separated values. Discrete mathematics therefore excludes topics in "continuous mathematics" such as calculus or Euclidean geometry. Discrete objects can often be enumerated by integers. More formally, discrete mathematics has been characterized as the branch of mathematics dealing with countable sets. However, there is no exact definition of the term "discrete mathematics." Indeed, discrete mathematics is described less by what is included than by what is excluded: continuously varying quantities and related notions.

A mathematical model is a description of a system using mathematical concepts and language. The process of developing a mathematical model is termed mathematical modeling. Mathematical models are used in the natural sciences and engineering disciplines, as well as in non-physical systems such as the social sciences. Mathematical models are also used in music, linguistics and philosophy.

Probability theory is the branch of mathematics concerned with probability. Although there are several different probability interpretations, probability theory treats the concept in a rigorous mathematical manner by expressing it through a set of axioms. Typically these axioms formalise probability in terms of a probability space, which assigns a measure taking values between 0 and 1, termed the probability measure, to a set of outcomes called the sample space. Any specified subset of these outcomes is called an event. Central subjects in probability theory include discrete and continuous random variables, probability distributions, and stochastic processes, which provide mathematical abstractions of non-deterministic or uncertain processes or measured quantities that may either be single occurrences or evolve over time in a random fashion. Although it is not possible to perfectly predict random events, much can be said about their behavior. Two major results in probability theory describing such behaviour are the law of large numbers and the central limit theorem.

In probability theory and statistics, a probability distribution is the mathematical function that gives the probabilities of occurrence of different possible outcomes for an experiment. It is a mathematical description of a random phenomenon in terms of its sample space and the probabilities of events.

In topology and related branches of mathematics, a topological space may be defined as a set of points, along with a set of neighbourhoods for each point, satisfying a set of axioms relating points and neighbourhoods. The definition of a topological space relies only upon set theory and is the most general notion of a mathematical space that allows for the definition of concepts such as continuity, connectedness, and convergence. Other spaces, such as manifolds and metric spaces, are specializations of topological spaces with extra structures or constraints. Being so general, topological spaces are a central unifying notion and appear in virtually every branch of modern mathematics. The branch of mathematics that studies topological spaces in their own right is called point-set topology or general topology.

In topology and related branches of mathematics, Tychonoff spaces and completely regular spaces are kinds of topological spaces. These conditions are examples of separation axioms.

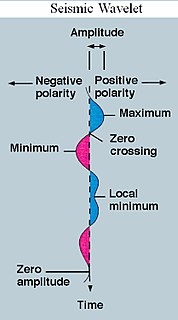

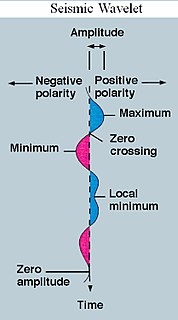

A wavelet is a wave-like oscillation with an amplitude that begins at zero, increases, and then decreases back to zero. It can typically be visualized as a "brief oscillation" like one recorded by a seismograph or heart monitor. Generally, wavelets are intentionally crafted to have specific properties that make them useful for signal processing.

Mathematical optimization or mathematical programming is the selection of a best element from some set of available alternatives. Optimization problems of sorts arise in all quantitative disciplines from computer science and engineering to operations research and economics, and the development of solution methods has been of interest in mathematics for centuries.

In topology and related areas of mathematics, the set of all possible topologies on a given set forms a partially ordered set. This order relation can be used for comparison of the topologies.

A time series is a series of data points indexed in time order. Most commonly, a time series is a sequence taken at successive equally spaced points in time. Thus it is a sequence of discrete-time data. Examples of time series are heights of ocean tides, counts of sunspots, and the daily closing value of the Dow Jones Industrial Average.

In mathematics and numerical analysis, the Ricker wavelet

Mathematical statistics is the application of probability theory, a branch of mathematics, to statistics, as opposed to techniques for collecting statistical data. Specific mathematical techniques which are used for this include mathematical analysis, linear algebra, stochastic analysis, differential equations, and measure theory.

In statistics, isotonic regression or monotonic regression is the technique of fitting a free-form line to a sequence of observations such that the fitted line is non-decreasing everywhere, and lies as close to the observations as possible.

In the mathematical fields of geometry and topology, a coarse structure on a set X is a collection of subsets of the cartesian product X × X with certain properties which allow the large-scale structure of metric spaces and topological spaces to be defined.

The cerebellar model arithmetic computer (CMAC) is a type of neural network based on a model of the mammalian cerebellum. It is also known as the cerebellar model articulation controller. It is a type of associative memory.

In mathematics, Neumann–Neumann methods are domain decomposition preconditioners named so because they solve a Neumann problem on each subdomain on both sides of the interface between the subdomains. Just like all domain decomposition methods, so that the number of iterations does not grow with the number of subdomains, Neumann–Neumann methods require the solution of a coarse problem to provide global communication. The balancing domain decomposition is a Neumann–Neumann method with a special kind of coarse problem.

In numerical analysis, coarse problem is an auxiliary system of equations used in an iterative method for the solution of a given larger system of equations. A coarse problem is basically a version of the same problem at a lower resolution, retaining its essential characteristics, but with fewer variables. The purpose of the coarse problem is to propagate information throughout the whole problem globally.

In mathematical dynamics, discrete time and continuous time are two alternative frameworks within which to model variables that evolve over time.

Equation-free modeling is a method for multiscale computation and computer-aided analysis. It is designed for a class of complicated systems in which one observes evolution at a macroscopic, coarse scale of interest, while accurate models are only given at a finely detailed, microscopic, level of description. The framework empowers one to perform macroscopic computational tasks using only appropriately initialized microscopic simulation on short time and small length scales. The methodology eliminates the derivation of explicit macroscopic evolution equations when these equations conceptually exist but are not available in closed form; hence the term equation-free.