Long-term memory (LTM) is the stage of the Atkinson–Shiffrin memory model where informative knowledge is held indefinitely. It is defined in contrast to short-term and working memory, which persist for only about 18 to 30 seconds. Long-term memory is commonly labelled as explicit memory (declarative), as well as episodic memory, semantic memory, autobiographical memory, and implicit memory.

Short-term memory is the capacity for holding, but not manipulating, a small amount of information in mind in an active, readily available state for a short period of time. For example, short-term memory can be used to remember a phone number that has just been recited. The duration of short-term memory is believed to be in the order of seconds. The most commonly cited capacity is The Magical Number Seven, Plus or Minus Two, despite the fact that Miller himself stated that the figure was intended as "little more than a joke" and that Cowan (2001) provided evidence that a more realistic figure is 4±1 units. In contrast, long-term memory can hold the information indefinitely.

Cognition is "the mental action or process of acquiring knowledge and understanding through thought, experience, and the senses". It encompasses many aspects of intellectual functions and processes such as attention, the formation of knowledge, memory and working memory, judgment and evaluation, reasoning and "computation", problem solving and decision making, comprehension and production of language. Cognitive processes use existing knowledge and generate new knowledge.

The Atkinson–Shiffrin model is a model of memory proposed in 1968 by Richard Atkinson and Richard Shiffrin. The model asserts that human memory has three separate components:

- a sensory register, where sensory information enters memory,

- a short-term store, also called working memory or short-term memory, which receives and holds input from both the sensory register and the long-term store, and

- a long-term store, where information which has been rehearsed in the short-term store is held indefinitely.

In cognitive psychology and neuroscience, spatial memory is that part of the memory responsible for the recording of information about one's environment and spatial orientation. For example, a person's spatial memory is required in order to navigate around a familiar city, just as a rat's spatial memory is needed to learn the location of food at the end of a maze. It is often argued that in both humans and animals, spatial memories are summarized as a cognitive map. Spatial memory has representations within working, short-term memory and long-term memory. Research indicates that there are specific areas of the brain associated with spatial memory. Many methods are used for measuring spatial memory in children, adults, and animals.

The spacing effect is the phenomenon whereby learning is greater when studying is spread out over time, as opposed to studying the same amount of content in a single session. That is, it is better to use spaced presentation rather than massed presentation. Practically, this effect suggests that "cramming" the night before an exam is not likely to be as effective as studying at intervals in a longer time frame. It is important to note, however, that the benefit of spaced presentations does not appear at short retention intervals, in which massed presentations tend to lead to better memory performance. This effect is a desirable difficulty; it challenges the learner but leads to better learning in the long-run.

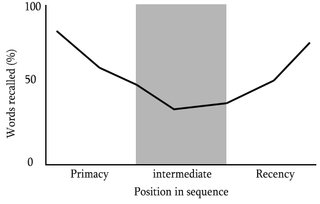

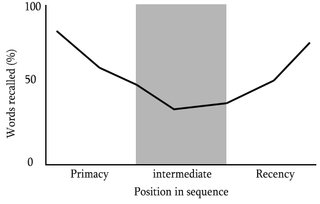

Serial-position effect is the tendency of a person to recall the first and last items in a series best, and the middle items worst. The term was coined by Hermann Ebbinghaus through studies he performed on himself, and refers to the finding that recall accuracy varies as a function of an item's position within a study list. When asked to recall a list of items in any order, people tend to begin recall with the end of the list, recalling those items best. Among earlier list items, the first few items are recalled more frequently than the middle items.

Rapid serial visual presentation is an experimental model frequently used to examine the temporal characteristics of attention. The RSVP paradigm requires participants to look at a continuous presentation of visual items which is around 10 items per second. They are all shown in the same place. The targets are placed inside this stream of continuous items. They are separate from the rest of the items known as distracters. The distracters can either be a color change or it can be letters that are among the numbers.

Inhibition of return (IOR) refers to an orientation mechanism that briefly enhances the speed and accuracy with which an object is detected after the object is attended, but then impairs detection speed and accuracy. IOR is usually measured with a cue-response paradigm, in which a person presses a button when he or she detects a target stimulus following the presentation of a cue that indicates the location in which the target will appear. The cue can be exogenous, or endogenous. Inhibition of return results from oculomotor activation, regardless of whether it was produced by exogenous signals or endougenously. Although IOR occurs for both visual and auditory stimuli, IOR is greater for visual stimuli, and is studied more often than auditory stimuli.

Memory is the process of storing and recalling information that was previously acquired. Memory occurs through three fundamental stages: encoding, storage, and retrieval. Storing refers to the process of placing newly acquired information into memory, which is modified in the brain for easier storage. Encoding this information makes the process of retrieval easier for the brain where it can be recalled and brought into conscious thinking. Modern memory psychology differentiates between the two distinct types of memory storage: short-term memory and long-term memory. Several models of memory have been proposed over the past century, some of them suggesting different relationships between short- and long-term memory to account for different ways of storing memory.

In psychology, context-dependent memory is the improved recall of specific episodes or information when the context present at encoding and retrieval are the same. One particularly common example of context-dependence at work occurs when an individual has lost an item in an unknown location. Typically, people try to systematically "retrace their steps" to determine all of the possible places where the item might be located. Based on the role that context plays in determining recall, it is not at all surprising that individuals often quite easily discover the lost item upon returning to the correct context. This concept is heavily related to the encoding specificity principle.

Emotion can have a powerful effect on humans and animals. Numerous studies have shown that the most vivid autobiographical memories tend to be of emotional events, which are likely to be recalled more often and with more clarity and detail than neutral events.

Perceptual learning is learning better perception skills such as differentiating two musical tones from one another or categorizations of spatial and temporal patterns relevant to real-world expertise as in reading, seeing relations among chess pieces, knowing whether or not an X-ray image shows a tumor.

N2pc refers to an ERP component linked to selective attention. The N2pc appears over visual cortex contralateral to the location in space to which subjects are attending; if subjects pay attention to the left side of the visual field, the N2pc appears in the right hemisphere of the brain, and vice versa. This characteristic makes it a useful tool for directly measuring the general direction of a person's attention with fine-grained temporal resolution.

Object-based attention refers to the relationship between an ‘object’ representation and a person’s visually stimulated, selective attention, as opposed to a relationship involving either a spatial or a feature representation; although these types of selective attention are not necessarily mutually exclusive. Research into object-based attention suggests that attention improves the quality of the sensory representation of a selected object, and results in the enhanced processing of that object’s features.

In cognitive psychology, intertrial priming is an accumulation of the priming effect over multiple trials, where "priming" is the effect of the exposure to one stimulus on subsequently presented stimuli. Intertrial priming occurs when a target feature is repeated from one trial to the next, and typically results in speeded response times to the target. A target is the stimulus participants are required to search for. For example, intertrial priming occurs when the task is to respond to either a red or a green target, and the response time to a red target is faster if the preceding trial also has a red target.

Visual spatial attention is a form of visual attention that involves directing attention to a location in space. Similar to its temporal counterpart visual temporal attention, these attention modules have been widely implemented in video analytics in computer vision to provide enhanced performance and human interpretable explanation of deep learning models.

Visual selective attention is a brain function that controls the processing of retinal input based on whether it is relevant or important. It selects particular representations to enter perceptual awareness and therefore guide behaviour. Through this process, less relevant information is suppressed.