Psychological statistics is application of formulas, theorems, numbers and laws to psychology. Statistical methods for psychology include development and application statistical theory and methods for modeling psychological data. These methods include psychometrics, factor analysis, experimental designs, and Bayesian statistics. The article also discusses journals in the same field.

Emotional intelligence (EI) is most often defined as the ability to perceive, use, understand, manage, and handle emotions. People with high emotional intelligence can recognize their own emotions and those of others, use emotional information to guide thinking and behavior, discern between different feelings and label them appropriately, and adjust emotions to adapt to environments.

Validity is the main extent to which a concept, conclusion or measurement is well-founded and likely corresponds accurately to the real world. The word "valid" is derived from the Latin validus, meaning strong. The validity of a measurement tool is the degree to which the tool measures what it claims to measure. Validity is based on the strength of a collection of different types of evidence described in greater detail below.

In the social sciences, scaling is the process of measuring or ordering entities with respect to quantitative attributes or traits. For example, a scaling technique might involve estimating individuals' levels of extraversion, or the perceived quality of products. Certain methods of scaling permit estimation of magnitudes on a continuum, while other methods provide only for relative ordering of the entities.

Quantitative marketing research is the application of quantitative research techniques to the field of marketing research. It has roots in both the positivist view of the world, and the modern marketing viewpoint that marketing is an interactive process in which both the buyer and seller reach a satisfying agreement on the "four Ps" of marketing: Product, Price, Place (location) and Promotion.

The g factor is a construct developed in psychometric investigations of cognitive abilities and human intelligence. It is a variable that summarizes positive correlations among different cognitive tasks, reflecting the fact that an individual's performance on one type of cognitive task tends to be comparable to that person's performance on other kinds of cognitive tasks. The g factor typically accounts for 40 to 50 percent of the between-individual performance differences on a given cognitive test, and composite scores based on many tests are frequently regarded as estimates of individuals' standing on the g factor. The terms IQ, general intelligence, general cognitive ability, general mental ability, and simply intelligence are often used interchangeably to refer to this common core shared by cognitive tests. However, the g factor itself is a mathematical construct indicating the level of observed correlation between cognitive tasks. The measured value of this construct depends on the cognitive tasks that are used, and little is known about the underlying causes of the observed correlations.

Construct validity concerns how well a set of indicators represent or reflect a concept that is not directly measurable. Construct validation is the accumulation of evidence to support the interpretation of what a measure reflects. Modern validity theory defines construct validity as the overarching concern of validity research, subsuming all other types of validity evidence such as content validity and criterion validity.

Personality Assessment Inventory (PAI), developed by Leslie Morey, is a self-report 344-item personality test that assesses a respondent's personality and psychopathology. Each item is a statement about the respondent that the respondent rates with a 4-point scale. It is used in various contexts, including psychotherapy, crisis/evaluation, forensic, personnel selection, pain/medical, and child custody assessment. The test construction strategy for the PAI was primarily deductive and rational. It shows good convergent validity with other personality tests, such as the Minnesota Multiphasic Personality Inventory and the Revised NEO Personality Inventory.

In psychometrics, criterion validity, or criterion-related validity, is the extent to which an operationalization of a construct, such as a test, relates to, or predicts, a theoretical representation of the construct—the criterion. Criterion validity is often divided into concurrent and predictive validity based on the timing of measurement for the "predictor" and outcome. Concurrent validity refers to a comparison between the measure in question and an outcome assessed at the same time. Standards for Educational & Psychological Tests states, "concurrent validity reflects only the status quo at a particular time." Predictive validity, on the other hand, compares the measure in question with an outcome assessed at a later time. Although concurrent and predictive validity are similar, it is cautioned to keep the terms and findings separated. "Concurrent validity should not be used as a substitute for predictive validity without an appropriate supporting rationale." Criterion validity is typically assessed by comparison with a gold standard test.

Concurrent validity is a type of evidence that can be gathered to defend the use of a test for predicting other outcomes. It is a parameter used in sociology, psychology, and other psychometric or behavioral sciences. Concurrent validity is demonstrated when a test correlates well with a measure that has previously been validated. The two measures may be for the same construct, but more often used for different, but presumably related, constructs.

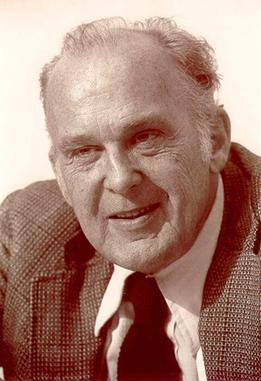

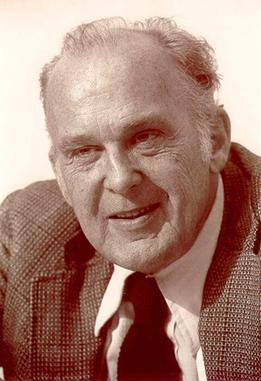

Donald Thomas Campbell was an American social scientist. He is noted for his work in methodology. He coined the term evolutionary epistemology and developed a selectionist theory of human creativity. A Review of General Psychology survey, published in 2002, ranked Campbell as the 33rd most cited psychologist of the 20th century.

In psychology, discriminant validity tests whether concepts or measurements that are not supposed to be related are actually unrelated.

In statistics, confirmatory factor analysis (CFA) is a special form of factor analysis, most commonly used in social science research. It is used to test whether measures of a construct are consistent with a researcher's understanding of the nature of that construct. As such, the objective of confirmatory factor analysis is to test whether the data fit a hypothesized measurement model. This hypothesized model is based on theory and/or previous analytic research. CFA was first developed by Jöreskog (1969) and has built upon and replaced older methods of analyzing construct validity such as the MTMM Matrix as described in Campbell & Fiske (1959).

Donald Winslow Fiske was an American psychologist.

The multitrait-multimethod (MTMM) matrix is an approach to examining construct validity developed by Campbell and Fiske (1959). It organizes convergent and discriminant validity evidence for comparison of how a measure relates to other measures. The conceptual approach has influenced experimental design and measurement theory in psychology, including applications in structural equation models.

Validity or Valid may refer to:

The Narcissistic Personality Inventory (NPI) was developed in 1979 by Raskin and Hall, and since then, has become one of the most widely utilized personality measures for non-clinical levels of the trait narcissism. Since its initial development, the NPI has evolved from 220 items to the more commonly employed NPI-40 (1984) and NPI-16 (2006), as well as the novel NPI-1 inventory (2014). Derived from the DSM-III criteria for Narcissistic personality disorder (NPD), the NPI has been employed heavily by personality and social psychology researchers.

The Levenson Self-Report Psychopathy scale (LSRP) is a 26-item, 4-point Likert scale, self-report inventory to measure primary and secondary psychopathy in non-institutionalised populations. It was developed in 1995 by Michael R. Levenson, Kent A. Kiehl and Cory M. Fitzpatrick. The scale was created for the purpose of conducting a psychological study examining antisocial disposition among a sample of 487 undergraduate students attending psychology classes at the University of California, Davis.

The Children's Depression Inventory is a psychological assessment that rates the severity of symptoms related to depression or dysthymic disorder in children and adolescents. The CDI is a 27-item scale that is self-rated and symptom-oriented. The assessment is now in its second edition. The 27 items on the assessment are grouped into five major factor areas. Clients rate themselves based on how they feel and think, with each statement being identified with a rating from 0 to 2. The CDI was developed by American clinical psychologist Maria Kovacs, PhD, and was published in 1979. It was developed by using the Beck Depression Inventory (BDI) of 1967 for adults as a model. The CDI is a widely used and accepted assessment for the severity of depressive symptoms in children and youth, with high reliability. It also has a well-established validity using a variety of different techniques, and good psychometric properties. The CDI is a Level B test.

The Dark Triad Dirty Dozen (DTDD) is a brief 12-question personality inventory test to assesses the possible presence of three co-morbid socially maladaptive, dark triad traits: Machiavellianism, narcissism, and psychopathy. The DTDD was developed to identify the dark triad traits among subclinical adult populations. It is a screening test. High scores on the DTDD do not necessary correlate with clinical diagnoses.