The Defense Advanced Research Projects Agency (DARPA) is a research and development agency of the United States Department of Defense responsible for the development of emerging technologies for use by the military.

Data mining is the process of extracting and discovering patterns in large data sets involving methods at the intersection of machine learning, statistics, and database systems. Data mining is an interdisciplinary subfield of computer science and statistics with an overall goal of extracting information from a data set and transforming the information into a comprehensible structure for further use. Data mining is the analysis step of the "knowledge discovery in databases" process, or KDD. Aside from the raw analysis step, it also involves database and data management aspects, data pre-processing, model and inference considerations, interestingness metrics, complexity considerations, post-processing of discovered structures, visualization, and online updating.

Surveillance is the monitoring of behavior, many activities, or information for the purpose of information gathering, influencing, managing or directing. This can include observation from a distance by means of electronic equipment, such as closed-circuit television (CCTV), or interception of electronically transmitted information like Internet traffic. It can also include simple technical methods, such as human intelligence gathering and postal interception.

Computer and network surveillance is the monitoring of computer activity and data stored locally on a computer or data being transferred over computer networks such as the Internet. This monitoring is often carried out covertly and may be completed by governments, corporations, criminal organizations, or individuals. It may or may not be legal and may or may not require authorization from a court or other independent government agencies. Computer and network surveillance programs are widespread today and almost all Internet traffic can be monitored.

The Information Awareness Office (IAO) was established by the United States Defense Advanced Research Projects Agency (DARPA) in January 2002 to bring together several DARPA projects focused on applying surveillance and information technology to track and monitor terrorists and other asymmetric threats to U.S. national security by achieving "Total Information Awareness" (TIA).

Total Information Awareness (TIA) was a mass detection program by the United States Information Awareness Office. It operated under this title from February to May 2003 before being renamed Terrorism Information Awareness.

Carnivore, later renamed DCS1000, was a system implemented by the Federal Bureau of Investigation (FBI) that was designed to monitor email and electronic communications. It used a customizable packet sniffer that could monitor all of a target user's Internet traffic. Carnivore was implemented in October 1997. By 2005 it had been replaced with improved commercial software.

Enterprise content management (ECM) extends the concept of content management by adding a timeline for each content item and, possibly, enforcing processes for its creation, approval, and distribution. Systems using ECM generally provide a secure repository for managed items, analog or digital. They also include one methods for importing content to bring manage new items, and several presentation methods to make items available for use. Although ECM content may be protected by digital rights management (DRM), it is not required. ECM is distinguished from general content management by its cognizance of the processes and procedures of the enterprise for which it is created.

Investigative Data Warehouse (IDW) is a searchable database operated by the FBI. It was created in 2004. Much of the nature and scope of the database is classified. The database is a centralization of multiple federal and state databases, including criminal records from various law enforcement agencies, the U.S. Department of the Treasury's Financial Crimes Enforcement Network (FinCEN), and public records databases. According to Michael Morehart's testimony before the House Committee on Financial Services in 2006, the "IDW is a centralized, web-enabled, closed system repository for intelligence and investigative data. This system, maintained by the FBI, allows appropriately trained and authorized personnel throughout the country to query for information of relevance to investigative and intelligence matters."

The Digital Collection System Network (DCSNet) is the Federal Bureau of Investigation (FBI)'s point-and-click surveillance system that can perform instant wiretaps on almost any telecommunications device in the United States.

Jaime Guillermo Carbonell was a computer scientist who made seminal contributions to the development of natural language processing tools and technologies. His extensive research in machine translation resulted in the development of several state-of-the-art language translation and artificial intelligence systems. He earned his B.S. degrees in Physics and in Mathematics from MIT in 1975 and did his Ph.D. under Dr. Roger Schank at Yale University in 1979. He joined Carnegie Mellon University as an assistant professor of computer science in 1979 and lived in Pittsburgh from then. He was affiliated with the Language Technologies Institute, Computer Science Department, Machine Learning Department, and Computational Biology Department at Carnegie Mellon.

MiTAP, or Mitre Text and Audio Processing, is a computer system that tries to automatically gather, translate, organize, and present information "for monitoring infectious disease outbreaks and other global events." It is also used in the FBI Investigative Data Warehouse.

Apache cTAKES: clinical Text Analysis and Knowledge Extraction System is an open-source Natural Language Processing (NLP) system that extracts clinical information from electronic health record unstructured text. It processes clinical notes, identifying types of clinical named entities — drugs, diseases/disorders, signs/symptoms, anatomical sites and procedures. Each named entity has attributes for the text span, the ontology mapping code, context, and negated/not negated.

The StingRay is an IMSI-catcher, a cellular phone surveillance device, manufactured by Harris Corporation. Initially developed for the military and intelligence community, the StingRay and similar Harris devices are in widespread use by local and state law enforcement agencies across Canada, the United States, and in the United Kingdom. Stingray has also become a generic name to describe these kinds of devices.

Cyber Insider Threat, or CINDER, is a digital threat method. In 2010, DARPA initiated a program under the same name to develop novel approaches to the detection of activities within military-interest networks that are consistent with the activities of cyber espionage.

The following outline is provided as an overview of and topical guide to natural-language processing:

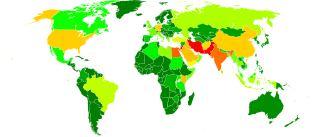

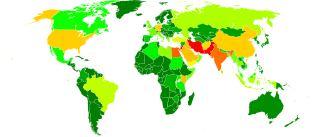

The practice of mass surveillance in the United States dates back to wartime monitoring and censorship of international communications from, to, or which passed through the United States. After the First and Second World Wars, mass surveillance continued throughout the Cold War period, via programs such as the Black Chamber and Project SHAMROCK. The formation and growth of federal law-enforcement and intelligence agencies such as the FBI, CIA, and NSA institutionalized surveillance used to also silence political dissent, as evidenced by COINTELPRO projects which targeted various organizations and individuals. During the Civil Rights Movement era, many individuals put under surveillance orders were first labelled as integrationists, then deemed subversive, and sometimes suspected to be supportive of the communist model of the United States' rival at the time, the Soviet Union. Other targeted individuals and groups included Native American activists, African American and Chicano liberation movement activists, and anti-war protesters.

Government hacking permits the exploitation of vulnerabilities in electronic products, especially software, to gain remote access to information of interest. This information allows government investigators to monitor user activity and interfere with device operation. Government attacks on security may include malware and encryption backdoors. The National Security Agency's PRISM program and Ethiopia's use of FinSpy are notable examples.

The Internet of Military Things (IoMT) is a class of Internet of things for combat operations and warfare. It is a complex network of interconnected entities, or "things", in the military domain that continually communicate with each other to coordinate, learn, and interact with the physical environment to accomplish a broad range of activities in a more efficient and informed manner. The concept of IoMT is largely driven by the idea that future military battles will be dominated by machine intelligence and cyber warfare and will likely take place in urban environments. By creating a miniature ecosystem of smart technology capable of distilling sensory information and autonomously governing multiple tasks at once, the IoMT is conceptually designed to offload much of the physical and mental burden that warfighters encounter in a combat setting.

Hancock is a C-based programming language, first developed by researchers at AT&T Labs in 1998, to analyze data streams. The language was intended by its creators to improve the efficiency and scale of data mining. Hancock works by creating profiles of individuals, utilizing data to provide behavioral and social network information.