In mathematics, the determinant is a scalar-valued function of the entries of a square matrix. The determinant of a matrix A is commonly denoted det(A), det A, or |A|. Its value characterizes some properties of the matrix and the linear map represented, on a given basis, by the matrix. In particular, the determinant is nonzero if and only if the matrix is invertible and the corresponding linear map is an isomorphism.

Multiplication is one of the four elementary mathematical operations of arithmetic, with the other ones being addition, subtraction, and division. The result of a multiplication operation is called a product.

A multiplication algorithm is an algorithm to multiply two numbers. Depending on the size of the numbers, different algorithms are more efficient than others. Numerous algorithms are known and there has been much research into the topic.

In mathematics, specifically in linear algebra, matrix multiplication is a binary operation that produces a matrix from two matrices. For matrix multiplication, the number of columns in the first matrix must be equal to the number of rows in the second matrix. The resulting matrix, known as the matrix product, has the number of rows of the first and the number of columns of the second matrix. The product of matrices A and B is denoted as AB.

In mathematics, the dot product or scalar product is an algebraic operation that takes two equal-length sequences of numbers, and returns a single number. In Euclidean geometry, the dot product of the Cartesian coordinates of two vectors is widely used. It is often called the inner product of Euclidean space, even though it is not the only inner product that can be defined on Euclidean space.

In mathematics, a square matrix is a matrix with the same number of rows and columns. An n-by-n matrix is known as a square matrix of order . Any two square matrices of the same order can be added and multiplied.

In linear algebra, the Cayley–Hamilton theorem states that every square matrix over a commutative ring satisfies its own characteristic equation.

In linear algebra, an invertible matrix is a square matrix which has an inverse. In other words, if some other matrix is multiplied by the invertible matrix, the result can be multiplied by an inverse to undo the operation. An invertible matrix multiplied by its inverse yields the identity matrix. Invertible matrices are the same size as their inverse.

In mathematics, and more specifically in computer algebra, computational algebraic geometry, and computational commutative algebra, a Gröbner basis is a particular kind of generating set of an ideal in a polynomial ring K[x1, ..., xn] over a field K. A Gröbner basis allows many important properties of the ideal and the associated algebraic variety to be deduced easily, such as the dimension and the number of zeros when it is finite. Gröbner basis computation is one of the main practical tools for solving systems of polynomial equations and computing the images of algebraic varieties under projections or rational maps.

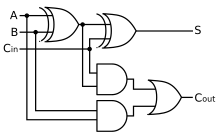

An adder, or summer, is a digital circuit that performs addition of numbers. In many computers and other kinds of processors, adders are used in the arithmetic logic units (ALUs). They are also used in other parts of the processor, where they are used to calculate addresses, table indices, increment and decrement operators and similar operations.

Modular exponentiation is exponentiation performed over a modulus. It is useful in computer science, especially in the field of public-key cryptography, where it is used in both Diffie–Hellman key exchange and RSA public/private keys.

In modular arithmetic computation, Montgomery modular multiplication, more commonly referred to as Montgomery multiplication, is a method for performing fast modular multiplication. It was introduced in 1985 by the American mathematician Peter L. Montgomery.

A fraction represents a part of a whole or, more generally, any number of equal parts. When spoken in everyday English, a fraction describes how many parts of a certain size there are, for example, one-half, eight-fifths, three-quarters. A common, vulgar, or simple fraction consists of an integer numerator, displayed above a line, and a non-zero integer denominator, displayed below that line. If these integers are positive, then the numerator represents a number of equal parts, and the denominator indicates how many of those parts make up a unit or a whole. For example, in the fraction 3/4, the numerator 3 indicates that the fraction represents 3 equal parts, and the denominator 4 indicates that 4 parts make up a whole. The picture to the right illustrates 3/4 of a cake.

A division algorithm is an algorithm which, given two integers N and D, computes their quotient and/or remainder, the result of Euclidean division. Some are applied by hand, while others are employed by digital circuit designs and software.

A Wallace multiplier is a hardware implementation of a binary multiplier, a digital circuit that multiplies two integers. It uses a selection of full and half adders to sum partial products in stages until two numbers are left. Wallace multipliers reduce as much as possible on each layer, whereas Dadda multipliers try to minimize the required number of gates by postponing the reduction to the upper layers.

A carry-save adder is a type of digital adder, used to efficiently compute the sum of three or more binary numbers. It differs from other digital adders in that it outputs two numbers, and the answer of the original summation can be achieved by adding these outputs together. A carry save adder is typically used in a binary multiplier, since a binary multiplier involves addition of more than two binary numbers after multiplication. A big adder implemented using this technique will usually be much faster than conventional addition of those numbers.

A binary multiplier is an electronic circuit used in digital electronics, such as a computer, to multiply two binary numbers.

The Lehmer random number generator, sometimes also referred to as the Park–Miller random number generator, is a type of linear congruential generator (LCG) that operates in multiplicative group of integers modulo n. The general formula is

In mathematics, a matrix is a rectangular array or table of numbers, symbols, or expressions, with elements or entries arranged in rows and columns, which is used to represent a mathematical object or property of such an object.

The carry-less product of two binary numbers is the result of carry-less multiplication of these numbers. This operation conceptually works like long multiplication except for the fact that the carry is discarded instead of applied to the more significant position. It can be used to model operations over finite fields, in particular multiplication of polynomials from GF(2)[X], the polynomial ring over GF(2).