In computer science, mutual exclusion is a property of concurrency control, which is instituted for the purpose of preventing race conditions. It is the requirement that one thread of execution never enters a critical section while a concurrent thread of execution is already accessing said critical section, which refers to an interval of time during which a thread of execution accesses a shared resource or shared memory.

In computer science, a thread of execution is the smallest sequence of programmed instructions that can be managed independently by a scheduler, which is typically a part of the operating system. In many cases, a thread is a component of a process.

In computer science, a lock or mutex is a synchronization primitive that prevents state from being modified or accessed by multiple threads of execution at once. Locks enforce mutual exclusion concurrency control policies, and with a variety of possible methods there exist multiple unique implementations for different applications.

A race condition or race hazard is the condition of an electronics, software, or other system where the system's substantive behavior is dependent on the sequence or timing of other uncontrollable events, leading to unexpected or inconsistent results. It becomes a bug when one or more of the possible behaviors is undesirable.

In concurrent programming, concurrent accesses to shared resources can lead to unexpected or erroneous behavior. Thus, the parts of the program where the shared resource is accessed need to be protected in ways that avoid the concurrent access. One way to do so is known as a critical section or critical region. This protected section cannot be entered by more than one process or thread at a time; others are suspended until the first leaves the critical section. Typically, the critical section accesses a shared resource, such as a data structure, peripheral device, or network connection, that would not operate correctly in the context of multiple concurrent accesses.

In concurrent programming, a monitor is a synchronization construct that prevents threads from concurrently accessing a shared object's state and allows them to wait for the state to change. They provide a mechanism for threads to temporarily give up exclusive access in order to wait for some condition to be met, before regaining exclusive access and resuming their task. A monitor consists of a mutex (lock) and at least one condition variable. A condition variable is explicitly 'signalled' when the object's state is modified, temporarily passing the mutex to another thread 'waiting' on the condition variable.

In computer science, the reentrant mutex is a particular type of mutual exclusion (mutex) device that may be locked multiple times by the same process/thread, without causing a deadlock.

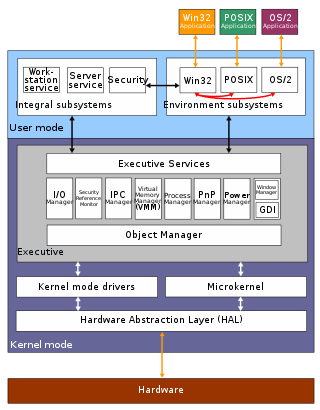

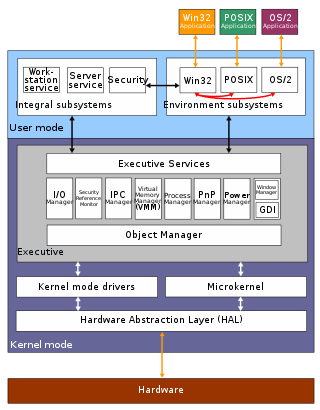

The architecture of Windows NT, a line of operating systems produced and sold by Microsoft, is a layered design that consists of two main components, user mode and kernel mode. It is a preemptive, reentrant multitasking operating system, which has been designed to work with uniprocessor and symmetrical multiprocessor (SMP)-based computers. To process input/output (I/O) requests, it uses packet-driven I/O, which utilizes I/O request packets (IRPs) and asynchronous I/O. Starting with Windows XP, Microsoft began making 64-bit versions of Windows available; before this, there were only 32-bit versions of these operating systems.

In computer science, future, promise, delay, and deferred refer to constructs used for synchronizing program execution in some concurrent programming languages. They describe an object that acts as a proxy for a result that is initially unknown, usually because the computation of its value is not yet complete.

Concurrent computing is a form of computing in which several computations are executed concurrently—during overlapping time periods—instead of sequentially—with one completing before the next starts.

In computer science, synchronization is the task of coordinating multiple processes to join up or handshake at a certain point, in order to reach an agreement or commit to a certain sequence of action.

The Microsoft Windows operating system supports a form of shared libraries known as "dynamic-link libraries", which are code libraries that can be used by multiple processes while only one copy is loaded into memory. This article provides an overview of the core libraries that are included with every modern Windows installation, on top of which most Windows applications are built.

The Java programming language and the Java virtual machine (JVM) is designed to support concurrent programming. All execution takes place in the context of threads. Objects and resources can be accessed by many separate threads. Each thread has its own path of execution, but can potentially access any object in the program. The programmer must ensure read and write access to objects is properly coordinated between threads. Thread synchronization ensures that objects are modified by only one thread at a time and prevents threads from accessing partially updated objects during modification by another thread. The Java language has built-in constructs to support this coordination.

Object Manager is a subsystem implemented as part of the Windows Executive which manages Windows resources. Resources, which are surfaced as logical objects, each reside in a namespace for categorization. Resources can be physical devices, files or folders on volumes, Registry entries or even running processes. All objects representing resources have an Object Type property and other metadata about the resource. Object Manager is a shared resource, and all subsystems that deal with the resources have to pass through the Object Manager.

Microsoft SQL Server is a proprietary relational database management system developed by Microsoft. As a database server, it is a software product with the primary function of storing and retrieving data as requested by other software applications—which may run either on the same computer or on another computer across a network. Microsoft markets at least a dozen different editions of Microsoft SQL Server, aimed at different audiences and for workloads ranging from small single-machine applications to large Internet-facing applications with many concurrent users.

Component Object Model (COM) is a binary-interface technology for software components from Microsoft that enables using objects in a language-neutral way between different programming languages, programming contexts, processes and machines.

In multithreaded computer programming, asynchronous method invocation (AMI), also known as asynchronous method calls or the asynchronous pattern is a design pattern in which the call site is not blocked while waiting for the called code to finish. Instead, the calling thread is notified when the reply arrives. Polling for a reply is an undesired option.

DioneOS is a multitasking preemptive, real-time operating system (RTOS). The system is designed for microcontrollers, originally released on 2 February 2011 for the Texas Instruments TI MSP430x, and then on 29 March 2013 for the ARM Cortex-M3. Target microcontroller platforms have limited resources, i.e., system clock frequency of tens of MHz, and memory amounts of tens to a few hundred kilobytes (KB). The RTOS is adapted to such conditions by providing a compact and efficient image. The efficiency term here means minimizing further central processing unit (CPU) load caused by system use. According to this definition, the system is more effective when it consumes less CPU time to execute its internal parts, e.g., managing threads.

In computer programming, the async/await pattern is a syntactic feature of many programming languages that allows an asynchronous, non-blocking function to be structured in a way similar to an ordinary synchronous function. It is semantically related to the concept of a coroutine and is often implemented using similar techniques, and is primarily intended to provide opportunities for the program to execute other code while waiting for a long-running, asynchronous task to complete, usually represented by promises or similar data structures. The feature is found in C#, C++, Python, F#, Hack, Julia, Dart, Kotlin, Rust, Nim, JavaScript, and Swift.

The Java programming language's Java Collections Framework version 1.5 and later defines and implements the original regular single-threaded Maps, and also new thread-safe Maps implementing the java.util.concurrent.ConcurrentMap interface among other concurrent interfaces. In Java 1.6, the java.util.NavigableMap interface was added, extending java.util.SortedMap, and the java.util.concurrent.ConcurrentNavigableMap interface was added as a subinterface combination.