In computational complexity theory, PSPACE is the set of all decision problems that can be solved by a Turing machine using a polynomial amount of space.

In computational complexity theory, a decision problem is P-complete if it is in P and every problem in P can be reduced to it by an appropriate reduction.

In computational complexity theory, a polynomial-time reduction is a method for solving one problem using another. One shows that if a hypothetical subroutine solving the second problem exists, then the first problem can be solved by transforming or reducing it to inputs for the second problem and calling the subroutine one or more times. If both the time required to transform the first problem to the second, and the number of times the subroutine is called is polynomial, then the first problem is polynomial-time reducible to the second.

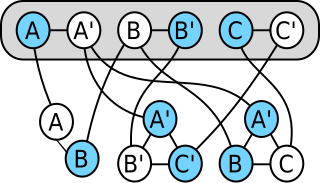

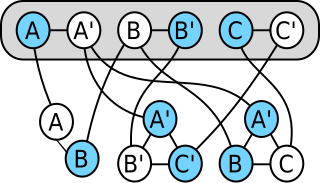

In computability theory and computational complexity theory, a many-one reduction is a reduction which converts instances of one decision problem into instances of a second decision problem where the instance reduced to is in the language if the initial instance was in its language and is not in the language if the initial instance was not in its language . Thus if we can decide whether instances are in the language , we can decide whether instances are in its language by applying the reduction and solving . Thus, reductions can be used to measure the relative computational difficulty of two problems. It is said that reduces to if, in layman's terms is harder to solve than . That is to say, any algorithm that solves can also be used as part of a program that solves .

In computer science, the time complexity is the computational complexity that describes the amount of computer time it takes to run an algorithm. Time complexity is commonly estimated by counting the number of elementary operations performed by the algorithm, supposing that each elementary operation takes a fixed amount of time to perform. Thus, the amount of time taken and the number of elementary operations performed by the algorithm are taken to be related by a constant factor.

In computational complexity theory, P, also known as PTIME or DTIME(nO ), is a fundamental complexity class. It contains all decision problems that can be solved by a deterministic Turing machine using a polynomial amount of computation time, or polynomial time.

In computability theory and computational complexity theory, a reduction is an algorithm for transforming one problem into another problem. A sufficiently efficient reduction from one problem to another may be used to show that the second problem is at least as difficult as the first.

In computational complexity theory, NL is the complexity class containing decision problems that can be solved by a nondeterministic Turing machine using a logarithmic amount of memory space.

In computational complexity theory, L is the complexity class containing decision problems that can be solved by a deterministic Turing machine using a logarithmic amount of writable memory space. Formally, the Turing machine has two tapes, one of which encodes the input and can only be read, whereas the other tape has logarithmic size but can be read as well as written. Logarithmic space is sufficient to hold a constant number of pointers into the input and a logarithmic number of boolean flags, and many basic logspace algorithms use the memory in this way.

In computational complexity theory, a log-space reduction is a reduction computable by a deterministic Turing machine using logarithmic space. Conceptually, this means it can keep a constant number of pointers into the input, along with a logarithmic number of fixed-size integers. It is possible that such a machine may not have space to write down its own output, so the only requirement is that any given bit of the output be computable in log-space. Formally, this reduction is executed via a log-space transducer.

In computational complexity theory, SL is the complexity class of problems log-space reducible to USTCON, which is the problem of determining whether there exists a path between two vertices in an undirected graph, otherwise described as the problem of determining whether two vertices are in the same connected component. This problem is also called the undirected reachability problem. It does not matter whether many-one reducibility or Turing reducibility is used. Although originally described in terms of symmetric Turing machines, that equivalent formulation is very complex, and the reducibility definition is what is used in practice.

Descriptive complexity is a branch of computational complexity theory and of finite model theory that characterizes complexity classes by the type of logic needed to express the languages in them. For example, PH, the union of all complexity classes in the polynomial hierarchy, is precisely the class of languages expressible by statements of second-order logic. This connection between complexity and the logic of finite structures allows results to be transferred easily from one area to the other, facilitating new proof methods and providing additional evidence that the main complexity classes are somehow "natural" and not tied to the specific abstract machines used to define them.

Finite model theory (FMT) is a subarea of model theory (MT). MT is the branch of logic which deals with the relation between a formal language (syntax) and its interpretations (semantics). FMT is a restriction of MT to interpretations on finite structures, which have a finite universe.

In computational complexity theory, the complement of a decision problem is the decision problem resulting from reversing the yes and no answers. Equivalently, if we define decision problems as sets of finite strings, then the complement of this set over some fixed domain is its complement problem.

In computational complexity theory, the Immerman–Szelepcsényi theorem states that nondeterministic space complexity classes are closed under complementation. It was proven independently by Neil Immerman and Róbert Szelepcsényi in 1987, for which they shared the 1995 Gödel Prize. In its general form the theorem states that NSPACE(s ) = co-NSPACE(s ) for any function s(n) ≥ log n. The result is equivalently stated as NL = co-NL; although this is the special case when s(n) = log n, it implies the general theorem by a standard padding argument. The result solved the second LBA problem.

In computer science, st-connectivity or STCON is a decision problem asking, for vertices s and t in a directed graph, if t is reachable from s.

In computational complexity theory, NL-complete is a complexity class containing the languages that are complete for NL, the class of decision problems that can be solved by a nondeterministic Turing machine using a logarithmic amount of memory space. The NL-complete languages are the most "difficult" or "expressive" problems in NL. If a method exists for solving any one of the NL-complete problems in logarithmic memory space, then NL = L.

In descriptive complexity, a branch of computational complexity, FO is a complexity class of structures that can be recognized by formulas of first-order logic, and also equals the complexity class AC0. Descriptive complexity uses the formalism of logic, but does not use several key notions associated with logic such as proof theory or axiomatization.

AC0 is a complexity class used in circuit complexity. It is the smallest class in the AC hierarchy, and consists of all families of circuits of depth O(1) and polynomial size, with unlimited-fanin AND gates and OR gates (we allow NOT gates only at the inputs). It thus contains NC0, which has only bounded-fanin AND and OR gates.

In descriptive complexity, a query is a mapping from structures of one signature to structures of another vocabulary. Neil Immerman, in his book Descriptive Complexity, "use[s] the concept of query as the fundamental paradigm of computation" (p. 17).