Related Research Articles

In software engineering and computer science, abstraction is the process of generalizing concrete details, such as attributes, away from the study of objects and systems to focus attention on details of greater importance. Abstraction is a fundamental concept in computer science and software engineering, especially within the object-oriented programming paradigm. Examples of this include:

Exokernel is an operating system kernel developed by the MIT Parallel and Distributed Operating Systems group, and also a class of similar operating systems.

A programming paradigm is a relatively high-level way to structure and conceptualize the implementation of a computer program. A programming language can be classified as supporting one or more paradigms.

In computing, dataflow is a broad concept, which has various meanings depending on the application and context. In the context of software architecture, data flow relates to stream processing or reactive programming.

An overlay network is a computer network that is layered on top of another network. The concept of overlay networking is distinct from the traditional model of OSI layered networks, and almost always assumes that the underlay network is an IP network of some kind.

SIGPLAN is the Association for Computing Machinery's Special Interest Group on programming languages.

In computer programming, flow-based programming (FBP) is a programming paradigm that defines applications as networks of black box processes, which exchange data across predefined connections by message passing, where the connections are specified externally to the processes. These black box processes can be reconnected endlessly to form different applications without having to be changed internally. FBP is thus naturally component-oriented.

End-user development (EUD) or end-user programming (EUP) refers to activities and tools that allow end-users – people who are not professional software developers – to program computers. People who are not professional developers can use EUD tools to create or modify software artifacts and complex data objects without significant knowledge of a programming language. In 2005 it was estimated that by 2012 there would be more than 55 million end-user developers in the United States, compared with fewer than 3 million professional programmers. Various EUD approaches exist, and it is an active research topic within the field of computer science and human-computer interaction. Examples include natural language programming, spreadsheets, scripting languages, visual programming, trigger-action programming and programming by example.

In computing, reactive programming is a declarative programming paradigm concerned with data streams and the propagation of change. With this paradigm, it is possible to express static or dynamic data streams with ease, and also communicate that an inferred dependency within the associated execution model exists, which facilitates the automatic propagation of the changed data flow.

In computing, network virtualization is the process of combining hardware and software network resources and network functionality into a single, software-based administrative entity, a virtual network. Network virtualization involves platform virtualization, often combined with resource virtualization.

The kernel is a computer program at the core of a computer's operating system and generally has complete control over everything in the system. The kernel is also responsible for preventing and mitigating conflicts between different processes. It is the portion of the operating system code that is always resident in memory and facilitates interactions between hardware and software components. A full kernel controls all hardware resources via device drivers, arbitrates conflicts between processes concerning such resources, and optimizes the utilization of common resources e.g. CPU & cache usage, file systems, and network sockets. On most systems, the kernel is one of the first programs loaded on startup. It handles the rest of startup as well as memory, peripherals, and input/output (I/O) requests from software, translating them into data-processing instructions for the central processing unit.

Distributed data flow refers to a set of events in a distributed application or protocol.

In computing, algorithmic skeletons, or parallelism patterns, are a high-level parallel programming model for parallel and distributed computing.

A communication protocol is a system of rules that allows two or more entities of a communications system to transmit information via any variation of a physical quantity. The protocol defines the rules, syntax, semantics, and synchronization of communication and possible error recovery methods. Protocols may be implemented by hardware, software, or a combination of both.

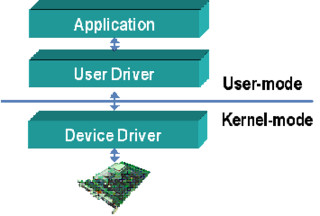

Device drivers are programs which allow software or higher-level computer programs to interact with a hardware device. These software components act as a link between the devices and the operating systems, communicating with each of these systems and executing commands. They provide an abstraction layer for the software above and also mediate the communication between the operating system kernel and the devices below.

Software-defined networking (SDN) is an approach to network management that enables dynamic and programmatically efficient network configuration to improve network performance and monitoring in a manner more akin to cloud computing than to traditional network management. SDN is meant to improve the static architecture of traditional networks and may be employed to centralize network intelligence in one network component by disassociating the forwarding process of network packets from the routing process. The control plane consists of one or more controllers, which are considered the brains of the SDN network, where the whole intelligence is incorporated. However, centralization has certain drawbacks related to security, scalability and elasticity.

Jennifer Rexford is an American computer scientist who is currently the Provost, Gordon Y. S. Wu Professor in Engineering, Professor of Computer Science, and formerly the Chair of the Department of Computer Science at Princeton University. Her research focuses on analysis of computer networks, and in particular network routing, performance measurement, and network management.

Apache Kafka is a distributed event store and stream-processing platform. It is an open-source system developed by the Apache Software Foundation written in Java and Scala. The project aims to provide a unified, high-throughput, low-latency platform for handling real-time data feeds. Kafka can connect to external systems via Kafka Connect, and provides the Kafka Streams libraries for stream processing applications. Kafka uses a binary TCP-based protocol that is optimized for efficiency and relies on a "message set" abstraction that naturally groups messages together to reduce the overhead of the network roundtrip. This "leads to larger network packets, larger sequential disk operations, contiguous memory blocks [...] which allows Kafka to turn a bursty stream of random message writes into linear writes."

Michael J. Freedman is an American computer scientist who is the Robert E. Kahn Professor of Computer Science at Princeton University, where he works on distributed systems, networking, and security. He is also the cofounder of database company Timescale.

Multitier programming is a programming paradigm for distributed software, which typically follows a multitier architecture, physically separating different functional aspects of the software into different tiers. Multitier programming allows functionalities that span multiple of such tiers to be developed in a single compilation unit using a single programming language. Without multitier programming, tiers are developed using different languages, e.g., JavaScript for the Web client, PHP for the Web server and SQL for the database. Multitier programming is often integrated into general-purpose languages by extending them with support for distribution.

References

- ↑ Voellmy, Andreas; et al. (July 10, 2010). "Don't Configure the Network, Program It" (PDF). cs.yale.edu. Retrieved February 22, 2011.

- ↑ Voellmy, Andreas; Hudak, Paul (2011). "Nettle: Taking the Sting Out of Programming Network Routers". Practical Aspects of Declarative Languages. Lecture Notes in Computer Science. 6359/2011: 235–249. doi:10.1007/978-3-642-18378-2_19. ISBN 978-3-642-18377-5.