In particle physics, the electroweak interaction is the unified description of two of the four known fundamental interactions of nature: electromagnetism and the weak interaction. Although these two forces appear very different at everyday low energies, the theory models them as two different aspects of the same force. Above the unification energy, on the order of 246 GeV, they would merge into a single electroweak force. Thus, if the universe is hot enough (approximately 1015 K, a temperature not exceeded since shortly after the Big Bang), then the electromagnetic force and weak force merge into a combined electroweak force. During the quark epoch, the electroweak force split into the electromagnetic and weak force.

In algebra and algebraic geometry, the spectrum of a commutative ring R, denoted by , is the set of all prime ideals of R. It is commonly augmented with the Zariski topology and with a structure sheaf, turning it into a locally ringed space. A locally ringed space of this form is called an affine scheme.

Loop quantum gravity (LQG) is a theory of quantum gravity, merging quantum mechanics and general relativity, making it a possible candidate for a theory of everything. Its goal is to unify gravity in a common theoretical framework with the other three fundamental forces of nature, beginning with relativity and adding quantum features. It competes with string theory that begins with quantum field theory and adds gravity.

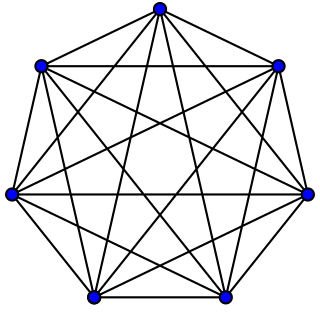

In mathematics, a hypergraph is a generalization of a graph in which an edge can join any number of vertices. Formally, a hypergraph is a pair where is a set of elements called nodes or vertices, and is a set of non-empty subsets of called hyperedges or edges. Therefore, is a subset of , where is the power set of .

In mathematics, K-theory is, roughly speaking, the study of a ring generated by vector bundles over a topological space or scheme. In algebraic topology, it is a cohomology theory known as topological K-theory. In algebra and algebraic geometry, it is referred to as algebraic K-theory. It is also a fundamental tool in the field of operator algebras. It can be seen as the study of certain kinds of invariants of large matrices.

In the calculus of variations, the Euler–Lagrange equation, Euler's equation, or Lagrange's equation, is a second-order partial differential equation whose solutions are the functions for which a given functional is stationary. It was developed by Swiss mathematician Leonhard Euler and Italian mathematician Joseph-Louis Lagrange in the 1750s.

In mathematics, the GrassmannianGr(k, V) is a space which parametrizes all k-dimensional linear subspaces of the n-dimensional vector space V. For example, the Grassmannian Gr(1, V) is the space of lines through the origin in V, so it is the same as the projective space of one dimension lower than V.

In mathematical statistics, the Kullback–Leibler divergence is a measure of how one probability distribution is different from a second, reference probability distribution. Applications include characterizing the relative(Shannon) entropy in information systems, randomness in continuous time-series, and information gain when comparing statistical models of inference. In contrast to variation of information, it is a distribution-wise asymmetric measure and thus does not qualify as a statistical metric of spread. In the simple case, a Kullback–Leibler divergence of 0 indicates that the two distributions in question are identical. In simplified terms, it is a measure of surprise, with diverse applications such as applied statistics, fluid mechanics, neuroscience and machine learning.

In mathematical statistics, the Fisher information is a way of measuring the amount of information that an observable random variable X carries about an unknown parameter θ of a distribution that models X. Formally, it is the variance of the score, or the expected value of the observed information. In Bayesian statistics, the Asymptotic distribution of the posterior mode depends on the Fisher information and not on the prior. The role of the Fisher information in the asymptotic theory of maximum-likelihood estimation was emphasized by the statistician Ronald Fisher. The Fisher information is also used in the calculation of the Jeffreys prior, which is used in Bayesian statistics.

In mathematics, especially in algebraic geometry and the theory of complex manifolds, coherent sheaves are a class of sheaves closely linked to the geometric properties of the underlying space. The definition of coherent sheaves is made with reference to a sheaf of rings that codifies this geometric information.

In mathematics and computer algebra, automatic differentiation (AD), also called algorithmic differentiation or computational differentiation, is a set of techniques to numerically evaluate the derivative of a function specified by a computer program. AD exploits the fact that every computer program, no matter how complicated, executes a sequence of elementary arithmetic operations and elementary functions. By applying the chain rule repeatedly to these operations, derivatives of arbitrary order can be computed automatically, accurately to working precision, and using at most a small constant factor more arithmetic operations than the original program.

In linear algebra, a circulant matrix is a special kind of Toeplitz matrix where each row vector is rotated one element to the right relative to the preceding row vector. In numerical analysis, circulant matrices are important because they are diagonalized by a discrete Fourier transform, and hence linear equations that contain them may be quickly solved using a fast Fourier transform. They can be interpreted analytically as the integral kernel of a convolution operator on the cyclic group and hence frequently appear in formal descriptions of spatially invariant linear operations.

In machine learning, kernel methods are a class of algorithms for pattern analysis, whose best known member is the support vector machine (SVM). The general task of pattern analysis is to find and study general types of relations in datasets. In its simplest form, the kernel trick means transforming data into another dimension that has a clear dividing margin between classes of data. For many algorithms that solve these tasks, the data in raw representation have to be explicitly transformed into feature vector representations via a user-specified feature map: in contrast, kernel methods require only a user-specified kernel, i.e., a similarity function over pairs of data points in raw representation.

In graph theory, the hypercube graphQn is the graph formed from the vertices and edges of an n-dimensional hypercube. For instance, the cubical graph Q3 is the graph formed by the 8 vertices and 12 edges of a three-dimensional cube. Qn has 2n vertices, 2n−1n edges, and is a regular graph with n edges touching each vertex.

The Hadamard code is an error-correcting code named after Jacques Hadamard that is used for error detection and correction when transmitting messages over very noisy or unreliable channels. In 1971, the code was used to transmit photos of Mars back to Earth from the NASA space probe Mariner 9. Because of its unique mathematical properties, the Hadamard code is not only used by engineers, but also intensely studied in coding theory, mathematics, and theoretical computer science. The Hadamard code is named after the French mathematician Jacques Hadamard. It is also known under the names Walsh code, Walsh family, and Walsh–Hadamard code in recognition of the American mathematician Joseph Leonard Walsh.

In mathematics and physics, a quantum graph is a linear, network-shaped structure of vertices connected on edges in which each edge is given a length and where a differential equation is posed on each edge. An example would be a power network consisting of power lines (edges) connected at transformer stations (vertices); the differential equations would then describe the voltage along each of the line, with boundary conditions for each edge provided at the adjacent vertices ensuring that the current added over all edges adds to zero at each vertex.

In mathematics, trace diagrams are a graphical means of performing computations in linear and multilinear algebra. They can be represented as graphs in which some edges are labeled by matrices. The simplest trace diagrams represent the trace and determinant of a matrix. Several results in linear algebra, such as Cramer's Rule and the Cayley–Hamilton theorem, have simple diagrammatic proofs. They are closely related to Penrose's graphical notation.

A coherent algebra is an algebra of complex square matrices that is closed under ordinary matrix multiplication, Schur product, transposition, and contains both the identity matrix and the all-ones matrix .