Digital video is an electronic representation of moving visual images (video) in the form of encoded digital data. This is in contrast to analog video, which represents moving visual images in the form of analog signals. Digital video comprises a series of digital images displayed in rapid succession.

MPEG-1 is a standard for lossy compression of video and audio. It is designed to compress VHS-quality raw digital video and CD audio down to about 1.5 Mbit/s without excessive quality loss, making video CDs, digital cable/satellite TV and digital audio broadcasting (DAB) practical.

MPEG-2 is a standard for "the generic coding of moving pictures and associated audio information". It describes a combination of lossy video compression and lossy audio data compression methods, which permit storage and transmission of movies using currently available storage media and transmission bandwidth. While MPEG-2 is not as efficient as newer standards such as H.264/AVC and H.265/HEVC, backwards compatibility with existing hardware and software means it is still widely used, for example in over-the-air digital television broadcasting and in the DVD-Video standard.

Quality of service (QoS) is the description or measurement of the overall performance of a service, such as a telephony or computer network or a cloud computing service, particularly the performance seen by the users of the network. To quantitatively measure quality of service, several related aspects of the network service are often considered, such as packet loss, bit rate, throughput, transmission delay, availability, jitter, etc.

The Real-time Transport Protocol (RTP) is a network protocol for delivering audio and video over IP networks. RTP is used in communication and entertainment systems that involve streaming media, such as telephony, video teleconference applications including WebRTC, television services and web-based push-to-talk features.

Time-division multiplexing (TDM) is a method of transmitting and receiving independent signals over a common signal path by means of synchronized switches at each end of the transmission line so that each signal appears on the line only a fraction of time in an alternating pattern. This method transmits two or more digital signals or analog signals over a common channel. It can be used when the bit rate of the transmission medium exceeds that of the signal to be transmitted. This form of signal multiplexing was developed in telecommunications for telegraphy systems in the late 19th century, but found its most common application in digital telephony in the second half of the 20th century.

In telecommunications and computer networking, a network packet is a formatted unit of data carried by a packet-switched network. A packet consists of control information and user data; the latter is also known as the payload. Control information provides data for delivering the payload. Typically, control information is found in packet headers and trailers.

IEEE 802.11e-2005 or 802.11e is an approved amendment to the IEEE 802.11 standard that defines a set of quality of service (QoS) enhancements for wireless LAN applications through modifications to the media access control (MAC) layer. The standard is considered of critical importance for delay-sensitive applications, such as Voice over Wireless LAN and streaming multimedia. The amendment has been incorporated into the published IEEE 802.11-2007 standard.

Advanced Television Systems Committee (ATSC) standards are an American set of standards for digital television transmission over terrestrial, cable and satellite networks. It is largely a replacement for the analog NTSC standard and, like that standard, is used mostly in the United States, Mexico, Canada, and South Korea. Several former NTSC users, in particular Japan, have not used ATSC during their digital television transition, because they adopted their own system called ISDB.

Digital Video Broadcasting - Satellite - Second Generation (DVB-S2) is a digital television broadcast standard that has been designed as a successor for the popular DVB-S system. It was developed in 2003 by the Digital Video Broadcasting Project, an international industry consortium, and ratified by ETSI in March 2005. The standard is based on, and improves upon DVB-S and the electronic news-gathering system, used by mobile units for sending sounds and images from remote locations worldwide back to their home television stations.

H.262 or MPEG-2 Part 2 is a video coding format standardised and jointly maintained by ITU-T Study Group 16 Video Coding Experts Group (VCEG) and ISO/IEC Moving Picture Experts Group (MPEG), and developed with the involvement of many companies. It is the second part of the ISO/IEC MPEG-2 standard. The ITU-T Recommendation H.262 and ISO/IEC 13818-2 documents are identical.

MPEG transport stream or simply transport stream (TS) is a standard digital container format for transmission and storage of audio, video, and Program and System Information Protocol (PSIP) data. It is used in broadcast systems such as DVB, ATSC and IPTV.

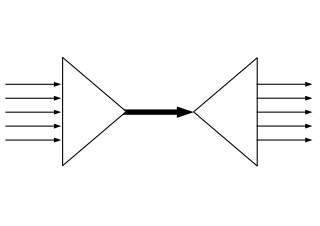

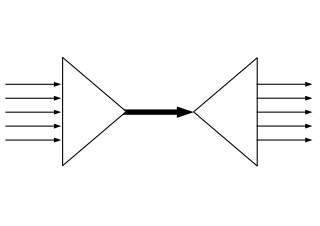

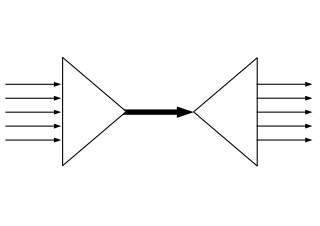

Statistical multiplexing is a type of communication link sharing, very similar to dynamic bandwidth allocation (DBA). In statistical multiplexing, a communication channel is divided into an arbitrary number of variable bitrate digital channels or data streams. The link sharing is adapted to the instantaneous traffic demands of the data streams that are transferred over each channel. This is an alternative to creating a fixed sharing of a link, such as in general time division multiplexing (TDM) and frequency division multiplexing (FDM). When performed correctly, statistical multiplexing can provide a link utilization improvement, called the statistical multiplexing gain.

Packet loss occurs when one or more packets of data travelling across a computer network fail to reach their destination. Packet loss is either caused by errors in data transmission, typically across wireless networks, or network congestion. Packet loss is measured as a percentage of packets lost with respect to packets sent.

An elementary stream (ES) as defined by the MPEG communication protocol is usually the output of an audio encoder or video encoder. An ES contains only one kind of data. An elementary stream is often referred to as "elementary", "data", "audio", or "video" bitstreams or streams. The format of the elementary stream depends upon the codec or data carried in the stream, but will often carry a common header when packetized into a packetized elementary stream.

In video coding, a group of pictures, or GOP structure, specifies the order in which intra- and inter-frames are arranged. The GOP is a collection of successive pictures within a coded video stream. Each coded video stream consists of successive GOPs, from which the visible frames are generated. Encountering a new GOP in a compressed video stream means that the decoder doesn't need any previous frames in order to decode the next ones, and allows fast seeking through the video.

ATSC-M/H is a U.S. standard for mobile digital TV that allows TV broadcasts to be received by mobile devices.

The zap time is the total duration of time from which the viewer changes the channel using a remote control to the point that the picture of the new channel is displayed. This includes the corresponding audio. These delays exist in all television systems, but they are more pronounced in digital television and systems that use the internet such as IPTV. Human interaction with the system is completely ignored in these measurements, so zap time is not the same as channel surfing.

Time-Sensitive Networking (TSN) is a set of standards under development by the Time-Sensitive Networking task group of the IEEE 802.1 working group. The TSN task group was formed in November 2012 by renaming the existing Audio Video Bridging Task Group and continuing its work. The name changed as a result of the extension of the working area of the standardization group. The standards define mechanisms for the time-sensitive transmission of data over deterministic Ethernet networks.

Deterministic Networking (DetNet) is an effort by the IETF DetNet Working Group to study implementation of deterministic data paths for real-time applications with extremely low data loss rates, packet delay variation (jitter), and bounded latency, such as audio and video streaming, industrial automation, and vehicle control.