Digital compositing is the process of digitally assembling multiple images to make a final image, typically for print, motion pictures or screen display. It is the digital analogue of optical film compositing.

2D computer graphics is the computer-based generation of digital images—mostly from two-dimensional models and by techniques specific to them. It may refer to the branch of computer science that comprises such techniques or to the models themselves.

In physics, the Navier–Stokes equations are a set of partial differential equations which describe the motion of viscous fluid substances, named after French engineer and physicist Claude-Louis Navier and Anglo-Irish physicist and mathematician George Gabriel Stokes.

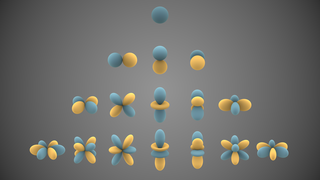

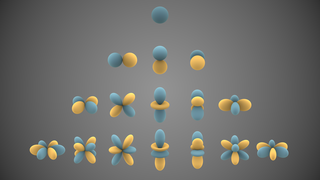

In mathematics and physical science, spherical harmonics are special functions defined on the surface of a sphere. They are often employed in solving partial differential equations in many scientific fields.

Fourier optics is the study of classical optics using Fourier transforms (FTs), in which the waveform being considered is regarded as made up of a combination, or superposition, of plane waves. It has some parallels to the Huygens–Fresnel principle, in which the wavefront is regarded as being made up of a combination of spherical wavefronts whose sum is the wavefront being studied. A key difference is that Fourier optics considers the plane waves to be natural modes of the propagation medium, as opposed to Huygens–Fresnel, where the spherical waves originate in the physical medium.

A 3D projection is a design technique used to display a three-dimensional (3D) object on a two-dimensional (2D) surface. These projections rely on visual perspective and aspect analysis to project a complex object for viewing capability on a simpler plane. This concept of extending 2D geometry to 3D was mastered by Heron of Alexandria in the first century. Heron could be called the father of 3D. 3D Projection is the basis of the concept for Computer Graphics simulating fluid flows to imitate realistic effects. Lucas Films 'ILM group is credited with introducing the concept.

In physics, mathematics and statistics, scale invariance is a feature of objects or laws that do not change if scales of length, energy, or other variables, are multiplied by a common factor, and thus represent a universality.

In mathematics, a compact (topological) group is a topological group whose topology is compact. Compact groups are a natural generalization of finite groups with the discrete topology and have properties that carry over in significant fashion. Compact groups have a well-understood theory, in relation to group actions and representation theory.

In statistics, a mixture model is a probabilistic model for representing the presence of subpopulations within an overall population, without requiring that an observed data set should identify the sub-population to which an individual observation belongs. Formally a mixture model corresponds to the mixture distribution that represents the probability distribution of observations in the overall population. However, while problems associated with "mixture distributions" relate to deriving the properties of the overall population from those of the sub-populations, "mixture models" are used to make statistical inferences about the properties of the sub-populations given only observations on the pooled population, without sub-population identity information.

In geometry, the notion of line or straight line was introduced by ancient mathematicians to represent straight objects with negligible width and depth. Lines are an idealization of such objects, which are often described in terms of two points or referred to using a single letter.

In mathematics, a Killing vector field, named after Wilhelm Killing, is a vector field on a Riemannian manifold that preserves the metric. Killing fields are the infinitesimal generators of isometries; that is, flows generated by Killing fields are continuous isometries of the manifold. More simply, the flow generates a symmetry, in the sense that moving each point on an object the same distance in the direction of the Killing vector will not distort distances on the object.

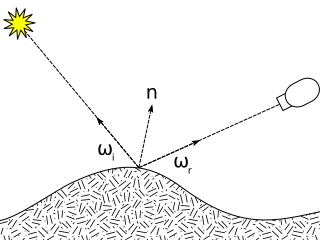

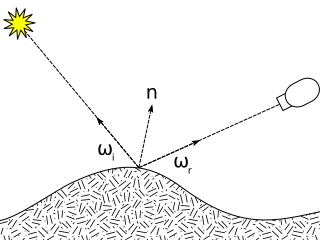

The bidirectional reflectance distribution function is a function of four real variables that defines how light is reflected at an opaque surface. It is employed in the optics of real-world light, in computer graphics algorithms, and in computer vision algorithms. The function takes an incoming light direction, , and outgoing direction, , and returns the ratio of reflected radiance exiting along to the irradiance incident on the surface from direction . Each direction is itself parameterized by azimuth angle and zenith angle , therefore the BRDF as a whole is a function of 4 variables. The BRDF has units sr−1, with steradians (sr) being a unit of solid angle.

In probability theory and statistics, the characteristic function of any real-valued random variable completely defines its probability distribution. If a random variable admits a probability density function, then the characteristic function is the Fourier transform of the probability density function. Thus it provides an alternative route to analytical results compared with working directly with probability density functions or cumulative distribution functions. There are particularly simple results for the characteristic functions of distributions defined by the weighted sums of random variables.

Currently a study branch of Computer Science Research, aims to create new views of a specific subject starting from a number of pictures taken from given point of views.

Modeling photon propagation with Monte Carlo methods is a flexible yet rigorous approach to simulate photon transport. In the method, local rules of photon transport are expressed as probability distributions which describe the step size of photon movement between sites of photon-tissue interaction and the angles of deflection in a photon's trajectory when a scattering event occurs. This is equivalent to modeling photon transport analytically by the radiative transfer equation (RTE), which describes the motion of photons using a differential equation. However, closed-form solutions of the RTE are often not possible; for some geometries, the diffusion approximation can be used to simplify the RTE, although this, in turn, introduces many inaccuracies, especially near sources and boundaries. In contrast, Monte Carlo simulations can be made arbitrarily accurate by increasing the number of photons traced. For example, see the movie, where a Monte Carlo simulation of a pencil beam incident on a semi-infinite medium models both the initial ballistic photon flow and the later diffuse propagation.

The image segmentation problem is concerned with partitioning an image into multiple regions according to some homogeneity criterion. This article is primarily concerned with graph theoretic approaches to image segmentation applying graph partitioning via minimum cut or maximum cut. Segmentation-based object categorization can be viewed as a specific case of spectral clustering applied to image segmentation.

Geographical distance is the distance measured along the surface of the earth. The formulae in this article calculate distances between points which are defined by geographical coordinates in terms of latitude and longitude. This distance is an element in solving the second (inverse) geodetic problem.

2D to 3D video conversion is the process of transforming 2D ("flat") film to 3D form, which in almost all cases is stereo, so it is the process of creating imagery for each eye from one 2D image.

In probability theory and directional statistics, a wrapped exponential distribution is a wrapped probability distribution that results from the "wrapping" of the exponential distribution around the unit circle.

Automatic basis function construction is the mathematical method of looking for a set of task-independent basis functions that map the state space to a lower-dimensional embedding, while still representing the value function accurately. Automatic basis construction is independent of prior knowledge of the domain, which allows it to perform well where expert-constructed basis functions are difficult or impossible to create.