Related Research Articles

Supervised learning (SL) is a paradigm in machine learning where input objects and a desired output value train a model. The training data is processed, building a function that maps new data on expected output values. An optimal scenario will allow for the algorithm to correctly determine output values for unseen instances. This requires the learning algorithm to generalize from the training data to unseen situations in a "reasonable" way. This statistical quality of an algorithm is measured through the so-called generalization error.

In academic publishing, scientific journal is a periodical publication designed to further the progress of science by disseminating new research findings to the scientific community. These journals serve as a platform for researchers, scholars, and scientists to share their latest discoveries, insights, and methodologies across a multitude of scientific disciplines. Unlike professional or trade magazines, scientific journals are characterized by their rigorous peer-review process, which aims to ensure the validity, reliability, and quality of the published content. With origins dating back to the 17th century, the publication of scientific journals has evolved significantly, playing a pivotal role in the advancement of scientific knowledge, fostering academic discourse, and facilitating collaboration within the scientific community.

In academic publishing, a preprint is a version of a scholarly or scientific paper that precedes formal peer review and publication in a peer-reviewed scholarly or scientific journal. The preprint may be available, often as a non-typeset version available free, before or after a paper is published in a journal.

Data envelopment analysis (DEA) is a nonparametric method in operations research and economics for the estimation of production frontiers. DEA has been applied in a large range of fields including international banking, economic sustainability, police department operations, and logistical applications Additionally, DEA has been used to assess the performance of natural language processing models, and it has found other applications within machine learning.

Stochastic gradient descent is an iterative method for optimizing an objective function with suitable smoothness properties. It can be regarded as a stochastic approximation of gradient descent optimization, since it replaces the actual gradient by an estimate thereof. Especially in high-dimensional optimization problems this reduces the very high computational burden, achieving faster iterations in exchange for a lower convergence rate.

Business mathematics are mathematics used by commercial enterprises to record and manage business operations. Commercial organizations use mathematics in accounting, inventory management, marketing, sales forecasting, and financial analysis.

Quantum annealing (QA) is an optimization process for finding the global minimum of a given objective function over a given set of candidate solutions, by a process using quantum fluctuations. Quantum annealing is used mainly for problems where the search space is discrete with many local minima; such as finding the ground state of a spin glass or the traveling salesman problem. The term "quantum annealing" was first proposed in 1988 by B. Apolloni, N. Cesa Bianchi and D. De Falco as a quantum-inspired classical algorithm. It was formulated in its present form by T. Kadowaki and H. Nishimori in 1998 though an imaginary-time variant without quantum coherence had been discussed by A. B. Finnila, M. A. Gomez, C. Sebenik and J. D. Doll in 1994.

Statistical finance is the application of econophysics to financial markets. Instead of the normative roots of finance, it uses a positivist framework. It includes exemplars from statistical physics with an emphasis on emergent or collective properties of financial markets. Empirically observed stylized facts are the starting point for this approach to understanding financial markets.

In computational physics, variational Monte Carlo (VMC) is a quantum Monte Carlo method that applies the variational method to approximate the ground state of a quantum system.

Uplift modelling, also known as incremental modelling, true lift modelling, or net modelling is a predictive modelling technique that directly models the incremental impact of a treatment on an individual's behaviour.

Learning to rank or machine-learned ranking (MLR) is the application of machine learning, typically supervised, semi-supervised or reinforcement learning, in the construction of ranking models for information retrieval systems. Training data may, for example, consist of lists of items with some partial order specified between items in each list. This order is typically induced by giving a numerical or ordinal score or a binary judgment for each item. The goal of constructing the ranking model is to rank new, unseen lists in a similar way to rankings in the training data.

Active learning is a special case of machine learning in which a learning algorithm can interactively query a human user, to label new data points with the desired outputs. The human user must possess knowledge/expertise in the problem domain, including the ability to consult/research authoritative sources when necessary. In statistics literature, it is sometimes also called optimal experimental design. The information source is also called teacher or oracle.

In machine learning, a hyperparameter is a parameter, such as the learning rate or choice of optimizer, which specifies details of the learning process, hence the name hyperparameter. This is in contrast to parameters which determine the model itself.

Scholarly peer review or academic peer review is the process of having a draft version of a researcher's methods and findings reviewed by experts in the same field. Peer review is widely used for helping the academic publisher decide whether the work should be accepted, considered acceptable with revisions, or rejected for official publication in an academic journal, a monograph or in the proceedings of an academic conference. If the identities of authors are not revealed to each other, the procedure is called dual-anonymous peer review.

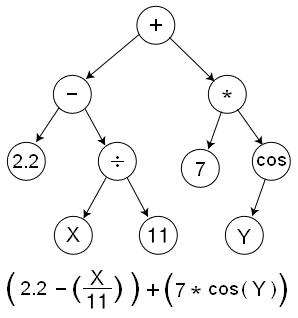

Symbolic regression (SR) is a type of regression analysis that searches the space of mathematical expressions to find the model that best fits a given dataset, both in terms of accuracy and simplicity.

In the field of artificial intelligence (AI), AI alignment research aims to steer AI systems toward a person's or group's intended goals, preferences, and ethical principles. An AI system is considered aligned if it advances its intended objectives. A misaligned AI system may pursue some objectives, but not the intended ones.

In machine learning, hyperparameter optimization or tuning is the problem of choosing a set of optimal hyperparameters for a learning algorithm. A hyperparameter is a parameter whose value is used to control the learning process.

Neural architecture search (NAS) is a technique for automating the design of artificial neural networks (ANN), a widely used model in the field of machine learning. NAS has been used to design networks that are on par or outperform hand-designed architectures. Methods for NAS can be categorized according to the search space, search strategy and performance estimation strategy used:

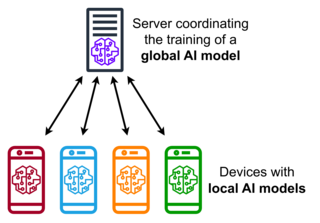

Federated learning is a sub-field of machine learning focusing on settings in which multiple entities collaboratively train a model while ensuring that their data remains decentralized. This stands in contrast to machine learning settings in which data is centrally stored. One of the primary defining characteristics of federated learning is data heterogeneity. Due to the decentralized nature of the clients' data, there is no guarantee that data samples held by each client are independently and identically distributed.

(Stochastic) variance reduction is an algorithmic approach to minimizing functions that can be decomposed into finite sums. By exploiting the finite sum structure, variance reduction techniques are able to achieve convergence rates that are impossible to achieve with methods that treat the objective as an infinite sum, as in the classical Stochastic approximation setting.

References

- ↑ "The "non-incremental" ERC challenge". 14 March 2018.

- ↑ Foster, Jacob G.; Rzhetsky, Andrey; Evans, James A. (October 2015). "Tradition and Innovation in Scientists' Research Strategies". American Sociological Review. 80 (5): 875–908. arXiv: 1302.6906 . doi:10.1177/0003122415601618. S2CID 742201.

- ↑ Schmidt, Robin M.; Schneider, Frank; Hennig, Philipp (1 July 2020). "Descending through a Crowded Valley -- Benchmarking Deep Learning Optimizers". 2007. arXiv: 2007.01547 . Bibcode:2020arXiv200701547S.

{{cite journal}}: Cite journal requires|journal=(help) - ↑ Irizarry, Estelle (July 1994). "Redundant and incremental publication". Journal of Scholarly Publishing. 25 (4): 212–220. ProQuest 213896623.