Related Research Articles

Psychological statistics is application of formulas, theorems, numbers and laws to psychology. Statistical methods for psychology include development and application statistical theory and methods for modeling psychological data. These methods include psychometrics, factor analysis, experimental designs, and Bayesian statistics. The article also discusses journals in the same field.

Psychometrics is a field of study within psychology concerned with the theory and technique of measurement. Psychometrics generally covers specialized fields within psychology and education devoted to testing, measurement, assessment, and related activities. Psychometrics is concerned with the objective measurement of latent constructs that cannot be directly observed. Examples of latent constructs include intelligence, introversion, mental disorders, and educational achievement. The levels of individuals on nonobservable latent variables are inferred through mathematical modeling based on what is observed from individuals' responses to items on tests and scales.

Validity is the main extent to which a concept, conclusion, or measurement is well-founded and likely corresponds accurately to the real world. The word "valid" is derived from the Latin validus, meaning strong. The validity of a measurement tool is the degree to which the tool measures what it claims to measure. Validity is based on the strength of a collection of different types of evidence described in greater detail below.

In the social sciences, scaling is the process of measuring or ordering entities with respect to quantitative attributes or traits. For example, a scaling technique might involve estimating individuals' levels of extraversion, or the perceived quality of products. Certain methods of scaling permit estimation of magnitudes on a continuum, while other methods provide only for relative ordering of the entities.

In psychometrics, item response theory is a paradigm for the design, analysis, and scoring of tests, questionnaires, and similar instruments measuring abilities, attitudes, or other variables. It is a theory of testing based on the relationship between individuals' performances on a test item and the test takers' levels of performance on an overall measure of the ability that item was designed to measure. Several different statistical models are used to represent both item and test taker characteristics. Unlike simpler alternatives for creating scales and evaluating questionnaire responses, it does not assume that each item is equally difficult. This distinguishes IRT from, for instance, Likert scaling, in which "All items are assumed to be replications of each other or in other words items are considered to be parallel instruments". By contrast, item response theory treats the difficulty of each item as information to be incorporated in scaling items.

A Likert scale is a psychometric scale named after its inventor, American social psychologist Rensis Likert, which is commonly used in research questionnaires. It is the most widely used approach to scaling responses in survey research, such that the term is often used interchangeably with rating scale, although there are other types of rating scales.

A personality test is a method of assessing human personality constructs. Most personality assessment instruments are in fact introspective self-report questionnaire measures or reports from life records (L-data) such as rating scales. Attempts to construct actual performance tests of personality have been very limited even though Raymond Cattell with his colleague Frank Warburton compiled a list of over 2000 separate objective tests that could be used in constructing objective personality tests. One exception, however, was the Objective-Analytic Test Battery, a performance test designed to quantitatively measure 10 factor-analytically discerned personality trait dimensions. A major problem with both L-data and Q-data methods is that because of item transparency, rating scales, and self-report questionnaires are highly susceptible to motivational and response distortion ranging from lack of adequate self-insight to downright dissimulation depending on the reason/motivation for the assessment being undertaken.

Construct validity concerns how well a set of indicators represent or reflect a concept that is not directly measurable. Construct validation is the accumulation of evidence to support the interpretation of what a measure reflects. Modern validity theory defines construct validity as the overarching concern of validity research, subsuming all other types of validity evidence such as content validity and criterion validity.

Structural equation modeling (SEM) is a diverse set of methods used by scientists for both observational and experimental research. SEM is used mostly in the social and behavioral science fields, but it is also used in epidemiology, business, and other fields. A common definition of SEM is, "...a class of methodologies that seeks to represent hypotheses about the means, variances, and covariances of observed data in terms of a smaller number of 'structural' parameters defined by a hypothesized underlying conceptual or theoretical model,".

Mathematical psychology is an approach to psychological research that is based on mathematical modeling of perceptual, thought, cognitive and motor processes, and on the establishment of law-like rules that relate quantifiable stimulus characteristics with quantifiable behavior. The mathematical approach is used with the goal of deriving hypotheses that are more exact and thus yield stricter empirical validations. There are five major research areas in mathematical psychology: learning and memory, perception and psychophysics, choice and decision-making, language and thinking, and measurement and scaling.

Quantitative psychology is a field of scientific study that focuses on the mathematical modeling, research design and methodology, and statistical analysis of psychological processes. It includes tests and other devices for measuring cognitive abilities. Quantitative psychologists develop and analyze a wide variety of research methods, including those of psychometrics, a field concerned with the theory and technique of psychological measurement.

In statistics, inter-rater reliability is the degree of agreement among independent observers who rate, code, or assess the same phenomenon.

A computerized classification test (CCT) refers to a Performance Appraisal System that is administered by computer for the purpose of classifying examinees. The most common CCT is a mastery test where the test classifies examinees as "Pass" or "Fail," but the term also includes tests that classify examinees into more than two categories. While the term may generally be considered to refer to all computer-administered tests for classification, it is usually used to refer to tests that are interactively administered or of variable-length, similar to computerized adaptive testing (CAT). Like CAT, variable-length CCTs can accomplish the goal of the test with a fraction of the number of items used in a conventional fixed-form test.

In statistics, confirmatory factor analysis (CFA) is a special form of factor analysis, most commonly used in social science research. It is used to test whether measures of a construct are consistent with a researcher's understanding of the nature of that construct. As such, the objective of confirmatory factor analysis is to test whether the data fit a hypothesized measurement model. This hypothesized model is based on theory and/or previous analytic research. CFA was first developed by Jöreskog (1969) and has built upon and replaced older methods of analyzing construct validity such as the MTMM Matrix as described in Campbell & Fiske (1959).

Measuring the Mind: Conceptual Issues in Contemporary Psychometrics is a book by Dutch academic Denny Borsboom, Assistant Professor of Psychological Methods at the University of Amsterdam, at time of publication. The book discusses the extent to which psychology can measure mental attributes such as intelligence and examines the philosophical issues that arise from such attempts.

Psychometric software refers to specialized programs used for the psychometric analysis of data obtained from tests, questionnaires, polls or inventories that measure latent psychoeducational variables. Although some psychometric analyses can be performed using general statistical software such as SPSS, most require specialized tools designed specifically for psychometric purposes.

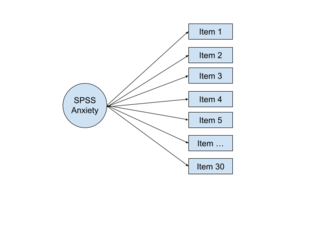

In multivariate statistics, exploratory factor analysis (EFA) is a statistical method used to uncover the underlying structure of a relatively large set of variables. EFA is a technique within factor analysis whose overarching goal is to identify the underlying relationships between measured variables. It is commonly used by researchers when developing a scale and serves to identify a set of latent constructs underlying a battery of measured variables. It should be used when the researcher has no a priori hypothesis about factors or patterns of measured variables. Measured variables are any one of several attributes of people that may be observed and measured. Examples of measured variables could be the physical height, weight, and pulse rate of a human being. Usually, researchers would have a large number of measured variables, which are assumed to be related to a smaller number of "unobserved" factors. Researchers must carefully consider the number of measured variables to include in the analysis. EFA procedures are more accurate when each factor is represented by multiple measured variables in the analysis.

Measurement invariance or measurement equivalence is a statistical property of measurement that indicates that the same construct is being measured across some specified groups. For example, measurement invariance can be used to study whether a given measure is interpreted in a conceptually similar manner by respondents representing different genders or cultural backgrounds. Violations of measurement invariance may preclude meaningful interpretation of measurement data. Tests of measurement invariance are increasingly used in fields such as psychology to supplement evaluation of measurement quality rooted in classical test theory.

Empathy quotient (EQ) is a psychological self-report measure of empathy developed by Simon Baron-Cohen and Sally Wheelwright at the Autism Research Centre at the University of Cambridge. EQ is based on a definition of empathy that includes cognition and affect.

The Mokken scale is a psychometric method of data reduction. A Mokken scale is a unidimensional scale that consists of hierarchically-ordered items that measure the same underlying, latent concept. This method is named after the political scientist Rob Mokken who suggested it in 1971.

References

- Guilford, J. P. (1936). Psychometric methods. New York: McGraw-Hill.

- Kline, P. (1986). A handbook of test construction. London: Methuen. ISBN 0416394302.