Related Research Articles

In machine learning, a neural network is a model inspired by the structure and function of biological neural networks in animal brains.

Microsoft Developer Network (MSDN) was the division of Microsoft responsible for managing the firm's relationship with developers and testers, such as hardware developers interested in the operating system (OS), and software developers developing on the various OS platforms or using the API or scripting languages of Microsoft's applications. The relationship management was situated in assorted media: web sites, newsletters, developer conferences, trade media, blogs and DVD distribution.

Machine learning (ML) is a field of study in artificial intelligence concerned with the development and study of statistical algorithms that can learn from data and generalize to unseen data and thus perform tasks without explicit instructions. Recently, artificial neural networks have been able to surpass many previous approaches in performance.

Microsoft Translator is a multilingual machine translation cloud service provided by Microsoft. Microsoft Translator is a part of Microsoft Cognitive Services and integrated across multiple consumer, developer, and enterprise products, including Bing, Microsoft Office, SharePoint, Microsoft Edge, Microsoft Lync, Yammer, Skype Translator, Visual Studio, and Microsoft Translator apps for Windows, Windows Phone, iPhone and Apple Watch, and Android phone and Android Wear.

Learning to rank or machine-learned ranking (MLR) is the application of machine learning, typically supervised, semi-supervised or reinforcement learning, in the construction of ranking models for information retrieval systems. Training data may, for example, consist of lists of items with some partial order specified between items in each list. This order is typically induced by giving a numerical or ordinal score or a binary judgment for each item. The goal of constructing the ranking model is to rank new, unseen lists in a similar way to rankings in the training data.

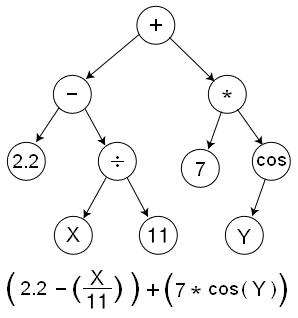

Symbolic regression (SR) is a type of regression analysis that searches the space of mathematical expressions to find the model that best fits a given dataset, both in terms of accuracy and simplicity.

Eclipse Deeplearning4j is a programming library written in Java for the Java virtual machine (JVM). It is a framework with wide support for deep learning algorithms. Deeplearning4j includes implementations of the restricted Boltzmann machine, deep belief net, deep autoencoder, stacked denoising autoencoder and recursive neural tensor network, word2vec, doc2vec, and GloVe. These algorithms all include distributed parallel versions that integrate with Apache Hadoop and Spark.

The following table compares notable software frameworks, libraries and computer programs for deep learning.

Microsoft Cognitive Toolkit, previously known as CNTK and sometimes styled as The Microsoft Cognitive Toolkit, is a deprecated deep learning framework developed by Microsoft Research. Microsoft Cognitive Toolkit describes neural networks as a series of computational steps via a directed graph.

Keras is an open-source library that provides a Python interface for artificial neural networks. Keras was first independent software, then integrated into the TensorFlow library, and later supporting more. "Keras 3 is a full rewrite of Keras [and can be used] as a low-level cross-framework language to develop custom components such as layers, models, or metrics that can be used in native workflows in JAX, TensorFlow, or PyTorch — with one codebase." Keras 3 will be the default Keras version for TensorFlow 2.16 onwards, but Keras 2 can still be used.

Chainer is an open source deep learning framework written purely in Python on top of NumPy and CuPy Python libraries. The development is led by Japanese venture company Preferred Networks in partnership with IBM, Intel, Microsoft, and Nvidia.

The following outline is provided as an overview of and topical guide to machine learning:

Caffe is a deep learning framework, originally developed at University of California, Berkeley. It is open source, under a BSD license. It is written in C++, with a Python interface.

The Open Neural Network Exchange (ONNX) [] is an open-source artificial intelligence ecosystem of technology companies and research organizations that establish open standards for representing machine learning algorithms and software tools to promote innovation and collaboration in the AI sector. ONNX is available on GitHub.

ML.NET is a free software machine learning library for the C# and F# programming languages. It also supports Python models when used together with NimbusML. The preview release of ML.NET included transforms for feature engineering like n-gram creation, and learners to handle binary classification, multi-class classification, and regression tasks. Additional ML tasks like anomaly detection and recommendation systems have since been added, and other approaches like deep learning will be included in future versions.

Simple Encrypted Arithmetic Library or SEAL is a free and open-source cross platform software library developed by Microsoft Research that implements various forms of homomorphic encryption.

LightGBM, short for Light Gradient-Boosting Machine, is a free and open-source distributed gradient-boosting framework for machine learning, originally developed by Microsoft. It is based on decision tree algorithms and used for ranking, classification and other machine learning tasks. The development focus is on performance and scalability.

Owl Scientific Computing is a software system for scientific and engineering computing developed in the Department of Computer Science and Technology, University of Cambridge. The System Research Group (SRG) in the department recognises Owl as one of the representative systems developed in SRG in the 2010s. The source code is licensed under the MIT License and can be accessed from the GitHub repository.

A graph neural network (GNN) belongs to a class of artificial neural networks for processing data that can be represented as graphs.

References

- 1 2 3 "James McCaffrey: Senior Research Software Engineer". Microsoft Research. Microsoft. Retrieved January 8, 2022.

- ↑ "Syncfusion Free Ebooks | Keras Succinctly". www.syncfusion.com. Retrieved February 17, 2021.

- ↑ "Syncfusion Free Ebooks | Introduction to CNTK Succinctly". www.syncfusion.com. Retrieved February 17, 2021.

- ↑ "Syncfusion Free Ebooks | Bing Maps V8 Succinctly". www.syncfusion.com. Retrieved February 17, 2021.

- ↑ "Syncfusion Free Ebooks | R-Programming Succinctly". www.syncfusion.com. Retrieved February 17, 2021.

- ↑ "Syncfusion Free Ebooks | SciPy Programming Succinctly". www.syncfusion.com. Retrieved February 17, 2021.

- ↑ "Syncfusion Free Ebooks | Machine Learning Using C# Succinctly". www.syncfusion.com. Retrieved February 17, 2021.

- ↑ "Syncfusion Free Ebooks | Neural Networks Using C# Succinctly". www.syncfusion.com. Retrieved February 17, 2021.

- Introduced a description and C# language implementation of the factoradic, in fact a type of factorial number system, in "Using Permutations in .NET for Improved Systems Security", McCaffrey, J. D., August 2003, MSDN Library. See http://msdn2.microsoft.com/en-us/library/aa302371.aspx and "String Permutations", MSDN Magazine, June 2006 (Vol. 21, No. 7).

- Laisant, Charles-Ange (1888), "Sur la numération factorielle, application aux permutations", Bulletin de la Société Mathématique de France (in French), 16: 176–183; a previous description of a factorial number system.

- Introduced a description and C# language implementation of the combinadic, in fact a type of combinatorial number system, in "Generating the mth Lexicographical Element of a Mathematical Combination", McCaffrey, J. D., July 2004, MSDN Library. See http://msdn2.microsoft.com/en-us/library/aa289166(VS.71).aspx.

- Applied Combinatorial Mathematics, Ed. E. F. Beckenbach (1964), pp. 27−30; a previous description of a combinatorial representation of integers.

- McCaffrey, James D., ".NET Test Automation Recipes", Apress Publishing, 2006. ISBN 1-59059-663-3.