Multivariate statistics is a subdivision of statistics encompassing the simultaneous observation and analysis of more than one outcome variable, i.e., multivariate random variables. Multivariate statistics concerns understanding the different aims and background of each of the different forms of multivariate analysis, and how they relate to each other. The practical application of multivariate statistics to a particular problem may involve several types of univariate and multivariate analyses in order to understand the relationships between variables and their relevance to the problem being studied.

The following outline is provided as an overview of and topical guide to statistics:

Chemometrics is the science of extracting information from chemical systems by data-driven means. Chemometrics is inherently interdisciplinary, using methods frequently employed in core data-analytic disciplines such as multivariate statistics, applied mathematics, and computer science, in order to address problems in chemistry, biochemistry, medicine, biology and chemical engineering. In this way, it mirrors other interdisciplinary fields, such as psychometrics and econometrics.

In statistics, a mixture model is a probabilistic model for representing the presence of subpopulations within an overall population, without requiring that an observed data set should identify the sub-population to which an individual observation belongs. Formally a mixture model corresponds to the mixture distribution that represents the probability distribution of observations in the overall population. However, while problems associated with "mixture distributions" relate to deriving the properties of the overall population from those of the sub-populations, "mixture models" are used to make statistical inferences about the properties of the sub-populations given only observations on the pooled population, without sub-population identity information. Mixture models are used for clustering, under the name model-based clustering, and also for density estimation.

ROOT is an object-oriented computer program and library developed by CERN. It was originally designed for particle physics data analysis and contains several features specific to the field, but it is also used in other applications such as astronomy and data mining. The latest minor release is 6.28, as of 2023-02-03.

Ordination or gradient analysis, in multivariate analysis, is a method complementary to data clustering, and used mainly in exploratory data analysis. In contrast to cluster analysis, ordination orders quantities in a latent space. In the ordination space, quantities that are near each other share attributes, and dissimilar objects are farther from each other. Such relationships between the objects, on each of several axes or latent variables, are then characterized numerically and/or graphically in a biplot.

In statistics, a latent class model (LCM) is a model for clustering multivariate discrete data. It assumes that the data arise from a mixture of discrete distributions, within each of which the variables are independent. It is called a latent class model because the class to which each data point belongs is unobserved, or latent.

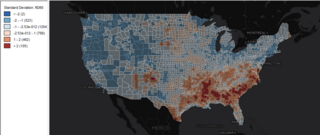

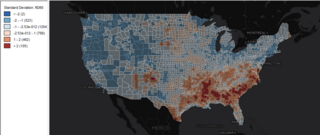

GeoDa is a free software package that conducts spatial data analysis, geovisualization, spatial autocorrelation and spatial modeling.

In statistics, confirmatory factor analysis (CFA) is a special form of factor analysis, most commonly used in social science research. It is used to test whether measures of a construct are consistent with a researcher's understanding of the nature of that construct. As such, the objective of confirmatory factor analysis is to test whether the data fit a hypothesized measurement model. This hypothesized model is based on theory and/or previous analytic research. CFA was first developed by Jöreskog (1969) and has built upon and replaced older methods of analyzing construct validity such as the MTMM Matrix as described in Campbell & Fiske (1959).

In statistics, the restrictedmaximum likelihood (REML) approach is a particular form of maximum likelihood estimation that does not base estimates on a maximum likelihood fit of all the information, but instead uses a likelihood function calculated from a transformed set of data, so that nuisance parameters have no effect.

In statistics, a generalized estimating equation (GEE) is used to estimate the parameters of a generalized linear model with a possible unmeasured correlation between observations from different timepoints. Although some believe that Generalized estimating equations are robust in everything even with the wrong choice of working-correlation matrix, Generalized estimating equations are only robust to loss of consistency with the wrong choice.

Detrended correspondence analysis (DCA) is a multivariate statistical technique widely used by ecologists to find the main factors or gradients in large, species-rich but usually sparse data matrices that typify ecological community data. DCA is frequently used to suppress artifacts inherent in most other multivariate analyses when applied to gradient data.

Psychometric software is software that is used for psychometric analysis of data from tests, questionnaires, or inventories reflecting latent psychoeducational variables. While some psychometric analyses can be performed with standard statistical software like SPSS, most analyses require specialized tools.

The Unscrambler X is a commercial software product for multivariate data analysis, used for calibration of multivariate data which is often in the application of analytical data such as near infrared spectroscopy and Raman spectroscopy, and development of predictive models for use in real-time spectroscopic analysis of materials. The software was originally developed in 1986 by Harald Martens and later by CAMO Software.

Jacqueline Meulman is a Dutch statistician and professor emerita of Applied Statistics at the Mathematical Institute of Leiden University.

The following outline is provided as an overview of and topical guide to machine learning:

Paul D. McNicholas is an Irish-Canadian statistician. He is a professor and University Scholar in the Department of Mathematics and Statistics at McMaster University. In 2015, McNicholas was awarded the Tier 1 Canada Research Chair in Computational Statistics. McNicholas uses computational statistics techniques, and mixture models in particular, to gain insight into large and complex datasets. He is editor-in-chief of the Journal of Classification.

In statistics, cluster analysis is the algorithmic grouping of objects into homogeneous groups based on numerical measurements. Model-based clustering bases this on a statistical model for the data, usually a mixture model. This has several advantages, including a principled statistical basis for clustering, and ways to choose the number of clusters, to choose the best clustering model, to assess the uncertainty of the clustering, and to identify outliers that do not belong to any group.