In mathematics, Lady Windermere's Fan is a telescopic identity employed to relate global and local error of a numerical algorithm. The name is derived from Oscar Wilde's 1892 play Lady Windermere's Fan, A Play About a Good Woman .

In mathematics, Lady Windermere's Fan is a telescopic identity employed to relate global and local error of a numerical algorithm. The name is derived from Oscar Wilde's 1892 play Lady Windermere's Fan, A Play About a Good Woman .

Let be the exact solution operator so that:

with denoting the initial time and the function to be approximated with a given .

Further let , be the numerical approximation at time , . can be attained by means of the approximation operator so that:

The approximation operator represents the numerical scheme used. For a simple explicit forward Euler method with step width this would be:

The local error is then given by:

In abbreviation we write:

Then Lady Windermere's Fan for a function of a single variable writes as:

with a global error of

In number theory, an arithmetic, arithmetical, or number-theoretic function is for most authors any function f(n) whose domain is the positive integers and whose range is a subset of the complex numbers. Hardy & Wright include in their definition the requirement that an arithmetical function "expresses some arithmetical property of n".

In mathematics, convolution is a mathematical operation on two functions that produces a third function that expresses how the shape of one is modified by the other. The term convolution refers to both the result function and to the process of computing it. It is defined as the integral of the product of the two functions after one is reversed and shifted. The integral is evaluated for all values of shift, producing the convolution function.

In theoretical physics, a Feynman diagram is a pictorial representation of the mathematical expressions describing the behavior and interaction of subatomic particles. The scheme is named after American physicist Richard Feynman, who introduced the diagrams in 1948. The interaction of subatomic particles can be complex and difficult to understand; Feynman diagrams give a simple visualization of what would otherwise be an arcane and abstract formula. According to David Kaiser, "Since the middle of the 20th century, theoretical physicists have increasingly turned to this tool to help them undertake critical calculations. Feynman diagrams have revolutionized nearly every aspect of theoretical physics." While the diagrams are applied primarily to quantum field theory, they can also be used in other fields, such as solid-state theory. Frank Wilczek wrote that the calculations which won him the 2004 Nobel Prize in Physics "would have been literally unthinkable without Feynman diagrams, as would [Wilczek's] calculations that established a route to production and observation of the Higgs particle."

In probability theory, a normaldistribution is a type of continuous probability distribution for a real-valued random variable. The general form of its probability density function is

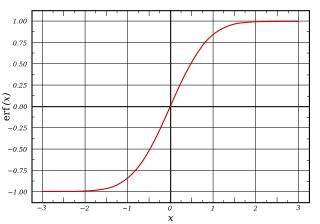

In mathematics, the error function, often denoted by erf, is a complex function of a complex variable defined as:

In mathematics, the Dedekind eta function, named after Richard Dedekind, is a modular form of weight 1/2 and is a function defined on the upper half-plane of complex numbers, where the imaginary part is positive. It also occurs in bosonic string theory.

In mathematics and in signal processing, the Hilbert transform is a specific linear operator that takes a function, u(t) of a real variable and produces another function of a real variable H(u)(t). This linear operator is given by convolution with the function . The Hilbert transform has a particularly simple representation in the frequency domain: It imparts a phase shift of ±90° to every frequency component of a function, the sign of the shift depending on the sign of the frequency. The Hilbert transform is important in signal processing, where it is a component of the analytic representation of a real-valued signal u(t). The Hilbert transform was first introduced by David Hilbert in this setting, to solve a special case of the Riemann–Hilbert problem for analytic functions.

In the theory of stochastic processes, the Kosambi–Karhunen–Loève theorem is a representation of a stochastic process as an infinite linear combination of orthogonal functions, analogous to a Fourier series representation of a function on a bounded interval. The transformation is also known as Hotelling transform and eigenvector transform, and is closely related to principal component analysis (PCA) technique widely used in image processing and in data analysis in many fields.

In mathematics, the Iverson bracket, named after Kenneth E. Iverson, is a notation that generalises the Kronecker delta, which is the Iverson bracket of the statement x = y. It maps any statement to a function of the free variables in it that takes the value one for the values of the variables for which the statement is true, and takes the value zero otherwise. It is generally denoted by putting the statement inside square brackets:

In mathematics, a symplectic integrator (SI) is a numerical integration scheme for Hamiltonian systems. Symplectic integrators form the subclass of geometric integrators which, by definition, are canonical transformations. They are widely used in nonlinear dynamics, molecular dynamics, discrete element methods, accelerator physics, plasma physics, quantum physics, and celestial mechanics.

In mathematics, delay differential equations (DDEs) are a type of differential equation in which the derivative of the unknown function at a certain time is given in terms of the values of the function at previous times. DDEs are also called time-delay systems, systems with aftereffect or dead-time, hereditary systems, equations with deviating argument, or differential-difference equations. They belong to the class of systems with the functional state, i.e. partial differential equations (PDEs) which are infinite dimensional, as opposed to ordinary differential equations (ODEs) having a finite dimensional state vector. Four points may give a possible explanation of the popularity of DDEs:

Oblate spheroidal coordinates are a three-dimensional orthogonal coordinate system that results from rotating the two-dimensional elliptic coordinate system about the non-focal axis of the ellipse, i.e., the symmetry axis that separates the foci. Thus, the two foci are transformed into a ring of radius in the x-y plane. Oblate spheroidal coordinates can also be considered as a limiting case of ellipsoidal coordinates in which the two largest semi-axes are equal in length.

The Volterra series is a model for non-linear behavior similar to the Taylor series. It differs from the Taylor series in its ability to capture "memory" effects. The Taylor series can be used for approximating the response of a nonlinear system to a given input if the output of this system depends strictly on the input at that particular time. In the Volterra series the output of the nonlinear system depends on the input to the system at all other times. This provides the ability to capture the "memory" effect of devices like capacitors and inductors.

In mathematics, a mock modular form is the holomorphic part of a harmonic weak Maass form, and a mock theta function is essentially a mock modular form of weight 1/2. The first examples of mock theta functions were described by Srinivasa Ramanujan in his last 1920 letter to G. H. Hardy and in his lost notebook. Sander Zwegers discovered that adding certain non-holomorphic functions to them turns them into harmonic weak Maass forms.

The time-evolving block decimation (TEBD) algorithm is a numerical scheme used to simulate one-dimensional quantum many-body systems, characterized by at most nearest-neighbour interactions. It is dubbed Time-evolving Block Decimation because it dynamically identifies the relevant low-dimensional Hilbert subspaces of an exponentially larger original Hilbert space. The algorithm, based on the Matrix Product States formalism, is highly efficient when the amount of entanglement in the system is limited, a requirement fulfilled by a large class of quantum many-body systems in one dimension.

In mathematics, the theory of optimal stopping or early stopping is concerned with the problem of choosing a time to take a particular action, in order to maximise an expected reward or minimise an expected cost. Optimal stopping problems can be found in areas of statistics, economics, and mathematical finance. A key example of an optimal stopping problem is the secretary problem. Optimal stopping problems can often be written in the form of a Bellman equation, and are therefore often solved using dynamic programming.

Diffusion Monte Carlo (DMC) or diffusion quantum Monte Carlo is a quantum Monte Carlo method that uses a Green's function to solve the Schrödinger equation. DMC is potentially numerically exact, meaning that it can find the exact ground state energy within a given error for any quantum system. When actually attempting the calculation, one finds that for bosons, the algorithm scales as a polynomial with the system size, but for fermions, DMC scales exponentially with the system size. This makes exact large-scale DMC simulations for fermions impossible; however, DMC employing a clever approximation known as the fixed-node approximation can still yield very accurate results.

Truncation errors in numerical integration are of two kinds:

In the mathematical field of group theory, an Artin transfer is a certain homomorphism from an arbitrary finite or infinite group to the commutator quotient group of a subgroup of finite index. Originally, such mappings arose as group theoretic counterparts of class extension homomorphisms of abelian extensions of algebraic number fields by applying Artin's reciprocity maps to ideal class groups and analyzing the resulting homomorphisms between quotients of Galois groups. However, independently of number theoretic applications, a partial order on the kernels and targets of Artin transfers has recently turned out to be compatible with parent-descendant relations between finite p-groups, which can be visualized in descendant trees. Therefore, Artin transfers provide a valuable tool for the classification of finite p-groups and for searching and identifying particular groups in descendant trees by looking for patterns defined by the kernels and targets of Artin transfers. These strategies of pattern recognition are useful in purely group theoretic context, as well as for applications in algebraic number theory concerning Galois groups of higher p-class fields and Hilbert p-class field towers.

In numerical analysis, the local linearization (LL) method is a general strategy for designing numerical integrators for differential equations based on a local (piecewise) linearization of the given equation on consecutive time intervals. The numerical integrators are then iteratively defined as the solution of the resulting piecewise linear equation at the end of each consecutive interval. The LL method has been developed for a variety of equations such as the ordinary, delayed, random and stochastic differential equations. The LL integrators are key component in the implementation of inference methods for the estimation of unknown parameters and unobserved variables of differential equations given time series of observations. The LL schemes are ideals to deal with complex models in a variety of fields as neuroscience, finance, forestry management, control engineering, mathematical statistics, etc.