Alfred Habdank Skarbek Korzybski was a Polish-American independent scholar who developed a field called general semantics, which he viewed as both distinct from, and more encompassing than, the field of semantics. He argued that human knowledge of the world is limited both by the human nervous system and the languages humans have developed, and thus no one can have direct access to reality, given that the most we can know is that which is filtered through the brain's responses to reality. His best known dictum is "The map is not the territory".

Computational linguistics is an interdisciplinary field concerned with the computational modelling of natural language, as well as the study of appropriate computational approaches to linguistic questions. In general, computational linguistics draws upon linguistics, computer science, artificial intelligence, mathematics, logic, philosophy, cognitive science, cognitive psychology, psycholinguistics, anthropology and neuroscience, among others.

Cognitive science is the interdisciplinary, scientific study of the mind and its processes with input from linguistics, psychology, neuroscience, philosophy, computer science/artificial intelligence, and anthropology. It examines the nature, the tasks, and the functions of cognition. Cognitive scientists study intelligence and behavior, with a focus on how nervous systems represent, process, and transform information. Mental faculties of concern to cognitive scientists include language, perception, memory, attention, reasoning, and emotion; to understand these faculties, cognitive scientists borrow from fields such as linguistics, psychology, artificial intelligence, philosophy, neuroscience, and anthropology. The typical analysis of cognitive science spans many levels of organization, from learning and decision to logic and planning; from neural circuitry to modular brain organization. One of the fundamental concepts of cognitive science is that "thinking can best be understood in terms of representational structures in the mind and computational procedures that operate on those structures."

Natural language processing (NLP) is an interdisciplinary subfield of computer science and linguistics. It is primarily concerned with giving computers the ability to support and manipulate speech. It involves processing natural language datasets, such as text corpora or speech corpora, using either rule-based or probabilistic machine learning approaches. The goal is a computer capable of "understanding" the contents of documents, including the contextual nuances of the language within them. The technology can then accurately extract information and insights contained in the documents as well as categorize and organize the documents themselves.

Semiotics is the systematic study of sign processes (semiosis) and meaning-making. Semiosis is any activity, conduct, or process that involves signs, where a sign is defined as anything that communicates something, usually called a meaning, to the sign's interpreter. The meaning can be intentional, such as a word uttered with a specific meaning; or unintentional, such as a symptom being a sign of a particular medical condition. Signs can also communicate feelings and may communicate internally or through any of the senses: visual, auditory, tactile, olfactory, or gustatory (taste). Contemporary semiotics is a branch of science that studies meaning-making and various types of knowledge.

General semantics is concerned with how events translate to perceptions, how they are further modified by the names and labels we apply to them, and how we might gain a measure of control over our own cognitive, emotional, and behavioral responses. Proponents characterize general semantics as an antidote to certain kinds of delusional thought patterns in which incomplete and possibly warped mental constructs are projected onto the world and treated as reality itself. After partial launches under the names human engineering and humanology, Polish-American originator Alfred Korzybski (1879–1950) fully launched the program as general semantics in 1933 with the publication of Science and Sanity: An Introduction to Non-Aristotelian Systems and General Semantics.

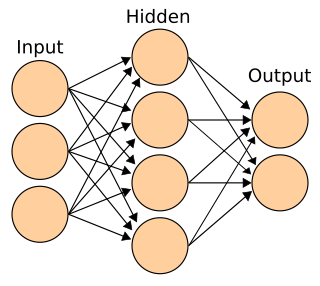

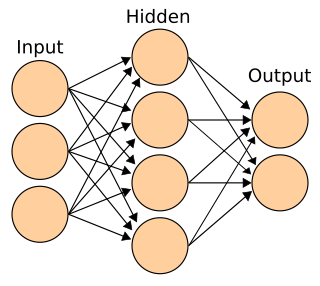

Connectionism is the name of an approach to the study of human mental processes and cognition that utilizes mathematical models known as connectionist networks or artificial neural networks. Connectionism has had many 'waves' since its beginnings.

Symbolic interactionism is a sociological theory that develops from practical considerations and alludes to humans' particular use of shared language to create common symbols and meanings, for use in both intra- and interpersonal communication. According to Macionis, symbolic interactionism is "a framework for building theory that sees society as the product of everyday interactions of individuals". In other words, it is a frame of reference to better understand how individuals interact with one another to create symbolic worlds, and in return, how these worlds shape individual behaviors. It is a framework that helps understand how society is preserved and created through repeated interactions between individuals. The interpretation process that occurs between interactions helps create and recreate meaning. It is the shared understanding and interpretations of meaning that affect the interaction between individuals. Individuals act on the premise of a shared understanding of meaning within their social context. Thus, interaction and behavior is framed through the shared meaning that objects and concepts have attached to them. From this view, people live in both natural and symbolic environments.

Samuel Ichiye Hayakawa was a Canadian-born American academic and politician of Japanese ancestry. A professor of English, he served as president of San Francisco State University and then as U.S. Senator from California from 1977 to 1983.

Game semantics is an approach to formal semantics that grounds the concepts of truth or validity on game-theoretic concepts, such as the existence of a winning strategy for a player, somewhat resembling Socratic dialogues or medieval theory of Obligationes.

The study of how language influences thought has a long history in a variety of fields. There are two bodies of thought forming around this debate. One body of thought stems from linguistics and is known as the Sapir–Whorf hypothesis. There is a strong and a weak version of the hypothesis which argue for more or less influence of language on thought. The strong version, linguistic determinism, argues that without language there is and can be no thought, while the weak version, linguistic relativity, supports the idea that there are some influences from language on thought. And on the opposing side, there are 'language of thought' theories (LOTH) which believe that public language is inessential to private thought. LOTH theories address the debate of whether thought is possible without language which is related to the question of whether language evolved for thought. These ideas are difficult to study because it proves challenging to parse the effects of culture versus thought versus language in all academic fields.

Ray Jackendoff is an American linguist. He is professor of philosophy, Seth Merrin Chair in the Humanities and, with Daniel Dennett, co-director of the Center for Cognitive Studies at Tufts University. He has always straddled the boundary between generative linguistics and cognitive linguistics, committed to both the existence of an innate universal grammar and to giving an account of language that is consistent with the current understanding of the human mind and cognition.

Linguistic determinism is the concept that language and its structures limit and determine human knowledge or thought, as well as thought processes such as categorization, memory, and perception. The term implies that people's native languages will affect their thought process and therefore people will have different thought processes based on their mother tongues.

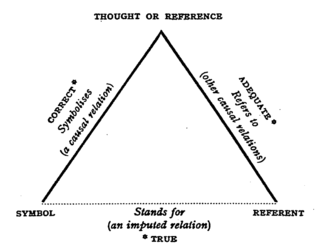

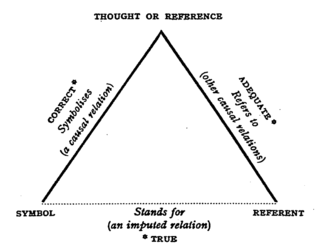

The Meaning of Meaning: A Study of the Influence of Language upon Thought and of the Science of Symbolism (1923) is a book by C. K. Ogden and I. A. Richards. It is accompanied by two supplementary essays by Bronisław Malinowski and F. G. Crookshank. The conception of the book arose during a two-hour conversation between Ogden and Richards held on a staircase in a house next to the Cavendish Laboratories at 11 pm on Armistice Day, 1918.

The structural differential is a physical chart or three-dimensional model illustrating the abstracting processes of the human nervous system. In one form, it appears as a pegboard with tags. Created by Alfred Korzybski, and awarded a U.S. patent on May 26, 1925, it is used as a training device in general semantics. The device is intended to show that human "knowledge" of, or acquaintance with, anything is partial—not total.

Dwight Le Merton Bolinger was an American linguist and Professor of Romance Languages and Literatures at Harvard University. He began his career as the first editor of the "Among the New Words" feature for American Speech. As an expert in Spanish, he was elected president of the American Association of Teachers of Spanish and Portuguese in 1960. He was known for the support and encouragement he gave younger scholars and for his hands-on approach to the analysis of human language. His work touched on a wide range of subjects, including semantics, intonation, phonesthesia, and the politics of language.

Cognitive anthropology is an approach within cultural anthropology and biological anthropology in which scholars seek to explain patterns of shared knowledge, cultural innovation, and transmission over time and space using the methods and theories of the cognitive sciences often through close collaboration with historians, ethnographers, archaeologists, linguists, musicologists, and other specialists engaged in the description and interpretation of cultural forms. Cognitive anthropology is concerned with what people from different groups know and how that implicit knowledge, in the sense of what they think subconsciously, changes the way people perceive and relate to the world around them.

Levels of Knowing and Existence: Studies in General Semantics is a textbook written by Professor Harry L. Weinberg that provides a broad overview of general semantics in language accessible to the layman.

Formal semantics is the study of grammatical meaning in natural languages using formal tools from logic, mathematics and theoretical computer science. It is an interdisciplinary field, sometimes regarded as a subfield of both linguistics and philosophy of language. It provides accounts of what linguistic expressions mean and how their meanings are composed from the meanings of their parts. The enterprise of formal semantics can be thought of as that of reverse-engineering the semantic components of natural languages' grammars.

In the philosophy of artificial intelligence, GOFAI is classical symbolic AI, as opposed to other approaches, such as neural networks, situated robotics, narrow symbolic AI or neuro-symbolic AI. The term was coined by philosopher John Haugeland in his 1985 book Artificial Intelligence: The Very Idea.