Systems engineering is an interdisciplinary field of engineering and engineering management that focuses on how to design and manage complex systems over their life cycles. At its core, systems engineering utilizes systems thinking principles to organize this body of knowledge. The individual outcome of such efforts, an engineered system, can be defined as a combination of components that work in synergy to collectively perform a useful function.

Static program analysis is the analysis of computer software that is performed without actually executing programs, in contrast with dynamic analysis, which is analysis performed on programs while they are executing. In most cases the analysis is performed on some version of the source code, and in the other cases, some form of the object code.

In computer science, specifically software engineering and hardware engineering, formal methods are a particular kind of mathematically based technique for the specification, development and verification of software and hardware systems. The use of formal methods for software and hardware design is motivated by the expectation that, as in other engineering disciplines, performing appropriate mathematical analysis can contribute to the reliability and robustness of a design.

Software development is the process of conceiving, specifying, designing, programming, documenting, testing, and bug fixing involved in creating and maintaining applications, frameworks, or other software components. Software development is a process of writing and maintaining the source code, but in a broader sense, it includes all that is involved between the conception of the desired software through to the final manifestation of the software, sometimes in a planned and structured process. Therefore, software development may include research, new development, prototyping, modification, reuse, re-engineering, maintenance, or any other activities that result in software products.

In the context of hardware and software systems, formal verification is the act of proving or disproving the correctness of intended algorithms underlying a system with respect to a certain formal specification or property, using formal methods of mathematics.

Software verification is a discipline of software engineering whose goal is to assure that software fully satisfies all the expected requirements.

In software project management, software testing, and software engineering, verification and validation (V&V) is the process of checking that a software system meets specifications and that it fulfills its intended purpose. It may also be referred to as software quality control. It is normally the responsibility of software testers as part of the software development lifecycle. In simple terms, software verification is: "Assuming we should build X, does our software achieve its goals without any bugs or gaps?" On the other hand, software validation is: "Was X what we should have built? Does X meet the high level requirements?"

Reliability engineering is a sub-discipline of systems engineering that emphasizes dependability in the lifecycle management of a product. Dependability, or reliability, describes the ability of a system or component to function under stated conditions for a specified period of time. Reliability is closely related to availability, which is typically described as the ability of a component or system to function at a specified moment or interval of time.

A measurement systems analysis (MSA) is a thorough assessment of a measurement process, and typically includes a specially designed experiment that seeks to identify the components of variation in that measurement process.

Dynamic testing is a term used in software engineering to describe the testing of the dynamic behavior of code. That is, dynamic analysis refers to the examination of the physical response from the system to variables that are not constant and change with time. In dynamic testing the software must actually be compiled and run. It involves working with the software, giving input values and checking if the output is as expected by executing specific test cases which can be done manually or with the use of an automated process. This is in contrast to static testing. Unit tests, integration tests, system tests and acceptance tests utilize dynamic testing. Usability tests involving a mock version made in paper or cardboard can be classified as static tests when taking into account that no program has been executed; or, as dynamic ones when considering the interaction between users and such mock version is effectively the most basic form of a prototype.

The (Stream) X-Machine Testing Methodology is a complete functional testing approach to software- and hardware testing that exploits the scalability of the Stream X-Machine model of computation. Using this methodology, it is likely to identify a finite test-set that exhaustively determines whether the tested system's implementation matches its specification. This goal is achieved by a divide-and-conquer approach, in which the design is decomposed by refinement into a collection of Stream X-Machines, which are implemented as separate modules, then tested bottom-up. At each integration stage, the testing method guarantees that the tested components are correctly integrated.

Imagix 4D is a source code analysis tool from Imagix Corporation, used primarily for understanding, documenting, and evolving existing C, C++ and Java software.

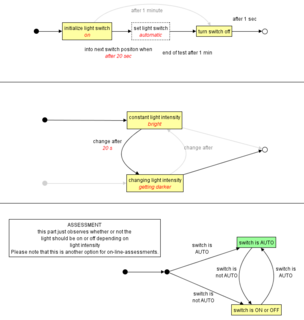

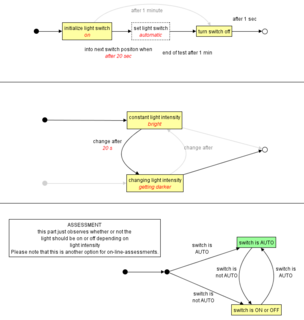

TPT is a systematic test methodology for the automated software test and verification of embedded control systems, cyber-physical systems, and dataflow programs. TPT is specialised on testing and validation of embedded systems whose inputs and outputs can be represented as signals and is a dedicated method for testing continuous behaviour of systems. Most control systems belong to this system class. The outstanding characteristic of control systems is the fact that they interact closely interlinked with a real world environment. Controllers need to observe their environment and react correspondingly to its behaviour. The system works in an interactional cycle with its environment and is subject to temporal constraints. Testing these systems is to stimulate and to check the timing behaviour. Traditional functional testing methods use scripts – TPT uses model-based testing.

Random testing is a black-box software testing technique where programs are tested by generating random, independent inputs. Results of the output are compared against software specifications to verify that the test output is pass or fail. In case of absence of specifications the exceptions of the language are used which means if an exception arises during test execution then it means there is a fault in the program, it is also used as way to avoid biased testing.

Protocol engineering is the application of systematic methods to the development of communication protocols. It uses many of the principles of software engineering, but it is specific to the development of distributed systems.