In computer science, code coverage is a percentage measure of the degree to which the source code of a program is executed when a particular test suite is run. A program with high test coverage has more of its source code executed during testing, which suggests it has a lower chance of containing undetected software bugs compared to a program with low test coverage. Many different metrics can be used to calculate test coverage. Some of the most basic are the percentage of program subroutines and the percentage of program statements called during execution of the test suite.

Software testing is the act of examining the artifacts and the behavior of the software under test by validation and verification. Software testing can also provide an objective, independent view of the software to allow the business to appreciate and understand the risks of software implementation. Test techniques include, but are not necessarily limited to:

In computing, an optimizing compiler is a compiler that tries to minimize or maximize some attributes of an executable computer program. Common requirements are to minimize a program's execution time, memory footprint, storage size, and power consumption.

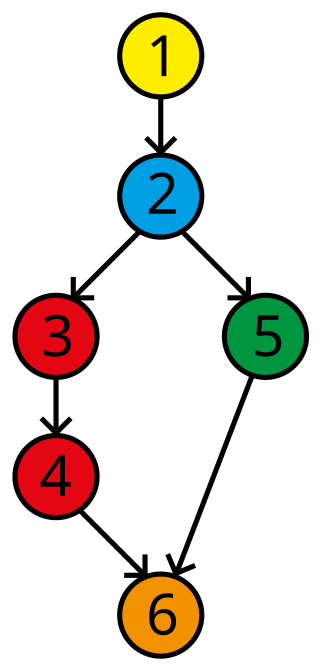

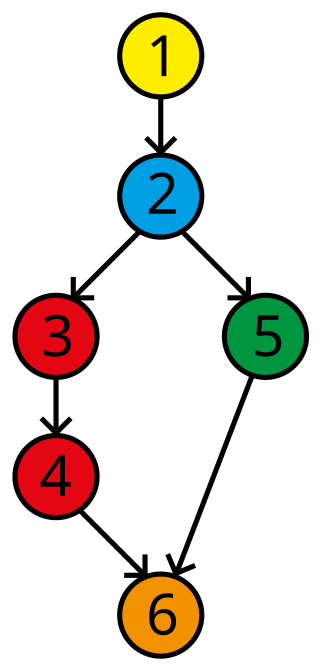

In computer science, control flow is the order in which individual statements, instructions or function calls of an imperative program are executed or evaluated. The emphasis on explicit control flow distinguishes an imperative programming language from a declarative programming language.

In compiler construction, a basic block is a straight-line code sequence with no branches in except to the entry and no branches out except at the exit. This restricted form makes a basic block highly amenable to analysis. Compilers usually decompose programs into their basic blocks as a first step in the analysis process. Basic blocks form the vertices or nodes in a control-flow graph.

White-box testing is a method of software testing that tests internal structures or workings of an application, as opposed to its functionality. In white-box testing, an internal perspective of the system is used to design test cases. The tester chooses inputs to exercise paths through the code and determine the expected outputs. This is analogous to testing nodes in a circuit, e.g. in-circuit testing (ICT). White-box testing can be applied at the unit, integration and system levels of the software testing process. Although traditional testers tended to think of white-box testing as being done at the unit level, it is used for integration and system testing more frequently today. It can test paths within a unit, paths between units during integration, and between subsystems during a system–level test. Though this method of test design can uncover many errors or problems, it has the potential to miss unimplemented parts of the specification or missing requirements. Where white-box testing is design-driven, that is, driven exclusively by agreed specifications of how each component of software is required to behave, white-box test techniques can accomplish assessment for unimplemented or missing requirements.

Cyclomatic complexity is a software metric used to indicate the complexity of a program. It is a quantitative measure of the number of linearly independent paths through a program's source code. It was developed by Thomas J. McCabe, Sr. in 1976.

Dynamic program analysis is analysis of computer software that involves executing the program in question. Dynamic program analysis includes familiar techniques from software engineering such as unit testing, debugging, and measuring code coverage, but also includes lesser-known techniques like program slicing and invariant inference. Dynamic program analysis is widely applied in security in the form of runtime memory error detection, fuzzing, dynamic symbolic execution, and taint tracking.

A decision-to-decision path, or DD-path, is a path of execution between two decisions. More recent versions of the concept also include the decisions themselves in their own DD-paths.

LDRA Testbed is core static and dynamic analysis engines for both host and embedded software. LDRA Testbed is made by Liverpool Data Research Associates (LDRA). LDRA Testbed provides the means to enforce compliance with coding standards such as MISRA, JSF++ AV, CERT C, CWE and provides visibility of software flaws that might typically pass through the standard build and test process to become latent problems. In addition, test effectiveness feedback is provided through structural coverage analysis reporting facilities, which support the requirements of the DO-178B standard up to and including Level-A.

Control tables are tables that control the control flow or play a major part in program control. There are no rigid rules about the structure or content of a control table—its qualifying attribute is its ability to direct control flow in some way through "execution" by a processor or interpreter. The design of such tables is sometimes referred to as table-driven design. In some cases, control tables can be specific implementations of finite-state-machine-based automata-based programming. If there are several hierarchical levels of control table they may behave in a manner equivalent to UML state machines

Professor Michael A. Hennell is a British computer scientist who has made leading contributions in the field of software testing.

Liverpool Data Research Associates (LDRA) is a provider of software analysis, and test and requirements traceability tools for the Public and Private sectors and a pioneer in static and dynamic software analysis.

High performance computing applications run on massively parallel supercomputers consist of concurrent programs designed using multi-threaded, multi-process models. The applications may consist of various constructs with varying degree of parallelism. Although high performance concurrent programs use similar design patterns, models and principles as that of sequential programs, unlike sequential programs, they typically demonstrate non-deterministic behavior. The probability of bugs increases with the number of interactions between the various parallel constructs. Race conditions, data races, deadlocks, missed signals and live lock are common error types.

Tcov is a source code coverage analysis and statement-by-statement profiling tool for software written in Fortran, C and C++. Tcov generates exact counts of the number of times each statement in a program is executed and annotates source code to add instrumentation. It is a standard utility, provided free of cost with Sun Studio software.

Gcov is a source code coverage analysis and statement-by-statement profiling tool. Gcov generates exact counts of the number of times each statement in a program is executed and annotates source code to add instrumentation. Gcov comes as a standard utility with the GNU Compiler Collection (GCC) suite.

Parasoft C/C++test is an integrated set of tools for testing C and C++ source code that software developers use to analyze, test, find defects, and measure the quality and security of their applications. It supports software development practices that are part of development testing, including static code analysis, dynamic code analysis, unit test case generation and execution, code coverage analysis, regression testing, runtime error detection, requirements traceability, and code review. It's a commercial tool that supports operation on Linux, Windows, and Solaris platforms as well as support for on-target embedded testing and cross compilers.

Java code coverage tools are of two types: first, tools that add statements to the Java source code and require its recompilation. Second, tools that instrument the bytecode, either before or during execution. The goal is to find out which parts of the code are tested by registering the lines of code executed when running a test.

This article discusses a set of tactics useful in software testing. It is intended as a comprehensive list of tactical approaches to Software Quality Assurance (more widely colloquially known as Quality Assurance and general application of the test method.

This glossary of computer science is a list of definitions of terms and concepts used in computer science, its sub-disciplines, and related fields, including terms relevant to software, data science, and computer programming.