Related Research Articles

Information retrieval (IR) in computing and information science is the task of identifying and retrieving information system resources that are relevant to an information need. The information need can be specified in the form of a search query. In the case of document retrieval, queries can be based on full-text or other content-based indexing. Information retrieval is the science of searching for information in a document, searching for documents themselves, and also searching for the metadata that describes data, and for databases of texts, images or sounds.

A translation memory (TM) is a database that stores "segments", which can be sentences, paragraphs or sentence-like units that have previously been translated, in order to aid human translators. The translation memory stores the source text and its corresponding translation in language pairs called “translation units”. Individual words are handled by terminology bases and are not within the domain of TM.

In linguistics and natural language processing, a corpus or text corpus is a dataset, consisting of natively digital and older, digitalized, language resources, either annotated or unannotated.

The National Center for Biotechnology Information (NCBI) is part of the United States National Library of Medicine (NLM), a branch of the National Institutes of Health (NIH). It is approved and funded by the government of the United States. The NCBI is located in Bethesda, Maryland, and was founded in 1988 through legislation sponsored by US Congressman Claude Pepper.

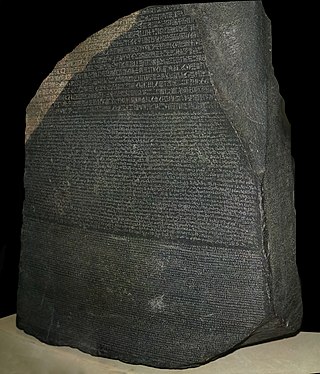

A parallel text is a text placed alongside its translation or translations. Parallel text alignment is the identification of the corresponding sentences in both halves of the parallel text. The Loeb Classical Library and the Clay Sanskrit Library are two examples of dual-language series of texts. Reference Bibles may contain the original languages and a translation, or several translations by themselves, for ease of comparison and study; Origen's Hexapla placed six versions of the Old Testament side by side. A famous example is the Rosetta Stone, whose discovery allowed the Ancient Egyptian language to begin being deciphered.

Eur-Lex is an official website of European Union law and other public documents of the European Union (EU), published in 24 official languages of the EU. The Official Journal (OJ) of the European Union is also published on EUR-Lex. Users can access EUR-Lex free of charge and also register for a free account, which offers extra features.

Machine translation can use a method based on dictionary entries, which means that the words will be translated as a dictionary does – word by word, usually without much correlation of meaning between them. Dictionary lookups may be done with or without morphological analysis or lemmatisation. While this approach to machine translation is probably the least sophisticated, dictionary-based machine translation is ideally suitable for the translation of long lists of phrases on the subsentential level, e.g. inventories or simple catalogs of products and services.

Latent semantic analysis (LSA) is a technique in natural language processing, in particular distributional semantics, of analyzing relationships between a set of documents and the terms they contain by producing a set of concepts related to the documents and terms. LSA assumes that words that are close in meaning will occur in similar pieces of text. A matrix containing word counts per document is constructed from a large piece of text and a mathematical technique called singular value decomposition (SVD) is used to reduce the number of rows while preserving the similarity structure among columns. Documents are then compared by cosine similarity between any two columns. Values close to 1 represent very similar documents while values close to 0 represent very dissimilar documents.

The Text REtrieval Conference (TREC) is an ongoing series of workshops focusing on a list of different information retrieval (IR) research areas, or tracks. It is co-sponsored by the National Institute of Standards and Technology (NIST) and the Intelligence Advanced Research Projects Activity, and began in 1992 as part of the TIPSTER Text program. Its purpose is to support and encourage research within the information retrieval community by providing the infrastructure necessary for large-scale evaluation of text retrieval methodologies and to increase the speed of lab-to-product transfer of technology.

Office Open XML is a zipped, XML-based file format developed by Microsoft for representing spreadsheets, charts, presentations and word processing documents. Ecma International standardized the initial version as ECMA-376. ISO and IEC standardized later versions as ISO/IEC 29500.

Isearch is open-source text retrieval software first developed in 1994 by Nassib Nassar as part of the Isite Z39.50 information framework. The project started at the Clearinghouse for Networked Information Discovery and Retrieval (CNIDR) of the North Carolina supercomputing center MCNC and funded by the National Science Foundation to follow in the track of WAIS and develop prototype systems for distributed information networks encompassing Internet applications, library catalogs and other information resources.

Search engine indexing is the collecting, parsing, and storing of data to facilitate fast and accurate information retrieval. Index design incorporates interdisciplinary concepts from linguistics, cognitive psychology, mathematics, informatics, and computer science. An alternate name for the process, in the context of search engines designed to find web pages on the Internet, is web indexing.

Enterprise search is the practice of making content from multiple enterprise-type sources, such as databases and intranets, searchable to a defined audience.

The Hamshahri Corpus is a sizable Persian corpus based on the Iranian newspaper Hamshahri, one of the first online Persian-language newspapers in Iran. It was initially collected and compiled by Ehsan Darrudi at DBRG Group of University of Tehran. Later, a team headed by Abolfazl AleAhmad built on this corpus and created the first Persian text collection suitable for information retrieval evaluation tasks.

The Information Retrieval Facility (IRF), founded 2006 and located in Vienna, Austria, was a research platform for networking and collaboration for professionals in the field of information retrieval. It ceased operations in 2012.

A concept search is an automated information retrieval method that is used to search electronically stored unstructured text for information that is conceptually similar to the information provided in a search query. In other words, the ideas expressed in the information retrieved in response to a concept search query are relevant to the ideas contained in the text of the query.

XQuery is a query and functional programming language that queries and transforms collections of structured and unstructured data, usually in the form of XML, text and with vendor-specific extensions for other data formats. The language is developed by the XML Query working group of the W3C. The work is closely coordinated with the development of XSLT by the XSL Working Group; the two groups share responsibility for XPath, which is a subset of XQuery.

Reed Technology and Information Services Inc. is a company that provides electronic content management services, engaging in data capture and conversion, preservation, analysis, e-submission and publication for corporate, legal and government clients. The company was founded in 1961 and is based in Horsham, Pennsylvania, with an additional office in Alexandria, Virginia.

PATENTSCOPE is a global patent database and search system developed and maintained by the World Intellectual Property Organization. It provides free and open access to a vast collection of international patent documents, including patent applications, granted patents, and related technical information.

References

- ↑ Merz C., (2003) A Corpus Query Tool For Syntactically Annotated Corpora Licentiate Thesis, The University of Zurich, Department of Computation linguistic, Switzerland

- ↑ Biber D., Conrad S., and Reppen R. (2000) Corpus Linguistics: Investigating Language Structure and Use. Cambridge University Press, 2nd edition

- ↑ "MAREC, University of Technology Vienna". www.ifs.tuwien.ac.at. Retrieved 1 December 2020.

- ↑ Manning, C. D. and Schütze, H. (2002) Foundations of statistical natural language processing Cambridge, MA, Massachusetts Institute of Technology (MIT) ISBN 0-262-13360-1.

- ↑ European Patent Office (2009) Guidelines for examination in the European Patent Office, Published by European Patent Office, Germany (April 2009)

- ↑ Järvelin A., Talvensaari T., Järvelin Anni, (2008) Data driven methods for improving mono- and cross-lingual IR performance in noisy environments, Proceedings of the second workshop on Analytics for noisy unstructured text data, (Singapore)

- ↑ Taleb, A.; Legrand, J.; Takache, H.; Taha, S.; Pruvost, J. (2017). "Investigation of lipid production by nitrogen-starved Parachlorella kessleri under continuous illumination and day/night cycles for biodiesel application". Journal of Applied Phycology. 30 (2): 761–772. doi:10.1007/s10811-017-1286-0. S2CID 13925039.