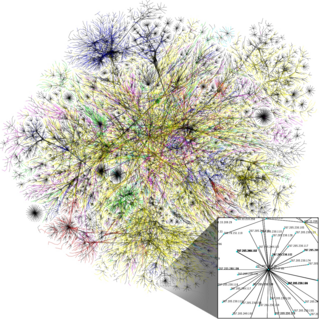

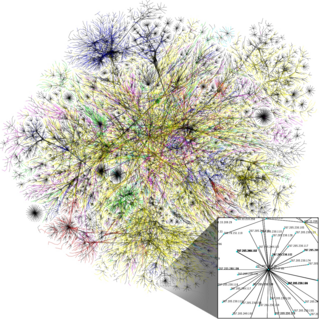

The Internet is the global system of interconnected computer networks that uses the Internet protocol suite (TCP/IP) to communicate between networks and devices. It is a network of networks that consists of private, public, academic, business, and government networks of local to global scope, linked by a broad array of electronic, wireless, and optical networking technologies. The Internet carries a vast range of information resources and services, such as the interlinked hypertext documents and applications of the World Wide Web (WWW), electronic mail, telephony, and file sharing.

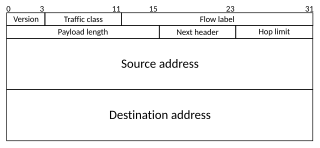

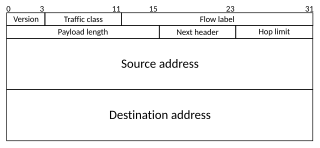

Internet Protocol version 6 (IPv6) is the most recent version of the Internet Protocol (IP), the communications protocol that provides an identification and location system for computers on networks and routes traffic across the Internet. IPv6 was developed by the Internet Engineering Task Force (IETF) to deal with the long-anticipated problem of IPv4 address exhaustion, and was intended to replace IPv4. In December 1998, IPv6 became a Draft Standard for the IETF, which subsequently ratified it as an Internet Standard on 14 July 2017.

In computing, a denial-of-service attack is a cyber-attack in which the perpetrator seeks to make a machine or network resource unavailable to its intended users by temporarily or indefinitely disrupting services of a host connected to a network. Denial of service is typically accomplished by flooding the targeted machine or resource with superfluous requests in an attempt to overload systems and prevent some or all legitimate requests from being fulfilled.

Network address translation (NAT) is a method of mapping an IP address space into another by modifying network address information in the IP header of packets while they are in transit across a traffic routing device. The technique was originally used to bypass the need to assign a new address to every host when a network was moved, or when the upstream Internet service provider was replaced, but could not route the network's address space. It has become a popular and essential tool in conserving global address space in the face of IPv4 address exhaustion. One Internet-routable IP address of a NAT gateway can be used for an entire private network.

In computer networking, a proxy server is a server application that acts as an intermediary between a client requesting a resource and the server providing that resource. It improves privacy, security, and performance in the process.

SOCKS is an Internet protocol that exchanges network packets between a client and server through a proxy server. SOCKS5 optionally provides authentication so only authorized users may access a server. Practically, a SOCKS server proxies TCP connections to an arbitrary IP address, and provides a means for UDP packets to be forwarded.

Network utilities are software utilities designed to analyze and configure various aspects of computer networks. The majority of them originated on Unix systems, but several later ports to other operating systems exist.

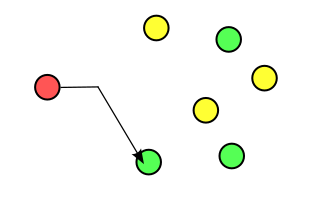

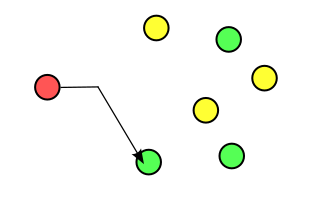

Anycast is a network addressing and routing methodology in which a single IP address is shared by devices in multiple locations. Routers direct packets addressed to this destination to the location nearest the sender, using their normal decision-making algorithms, typically the lowest number of BGP network hops. Anycast routing is widely used by content delivery networks such as web and name servers, to bring their content closer to end users.

Deep packet inspection (DPI) is a type of data processing that inspects in detail the data being sent over a computer network, and may take actions such as alerting, blocking, re-routing, or logging it accordingly. Deep packet inspection is often used for baselining application behavior, analyzing network usage, troubleshooting network performance, ensuring that data is in the correct format, checking for malicious code, eavesdropping, and internet censorship, among other purposes. There are multiple headers for IP packets; network equipment only needs to use the first of these for normal operation, but use of the second header is normally considered to be shallow packet inspection despite this definition.

In computer networks, a tunneling protocol is a communication protocol which allows for the movement of data from one network to another. It can, for example, allow private network communications to be sent across a public network, or for one network protocol to be carried over an incompatible network, through a process called encapsulation.

Network monitoring is the use of a system that constantly monitors a computer network for slow or failing components and that notifies the network administrator in case of outages or other trouble. Network monitoring is part of network management.

IP multicast is a method of sending Internet Protocol (IP) datagrams to a group of interested receivers in a single transmission. It is the IP-specific form of multicast and is used for streaming media and other network applications. It uses specially reserved multicast address blocks in IPv4 and IPv6.

The AMPRNet or Network 44 is used in amateur radio for packet radio and digital communications between computer networks managed by amateur radio operators. Like other amateur radio frequency allocations, an IP range of 44.0.0.0/8 was provided in 1981 for Amateur Radio Digital Communications and self-administered by radio amateurs. In 2001, undocumented and dual-use of 44.0.0.0/8 as a network telescope began, recording the spread of the Code Red II worm in July 2001. In mid-2019, part of IPv4 range was sold off for conventional use, due to IPv4 address exhaustion.

Packet Clearing House (PCH) is the international nonprofit organization responsible for providing operational support and security to critical internet infrastructure, including Internet exchange points and the core of the domain name system. The organization also works in the areas of cybersecurity coordination, regulatory policy and Internet governance.

In computer networks, network traffic measurement is the process of measuring the amount and type of traffic on a particular network. This is especially important with regard to effective bandwidth management.

The following outline is provided as an overview of and topical guide to the Internet.

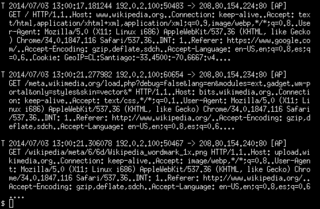

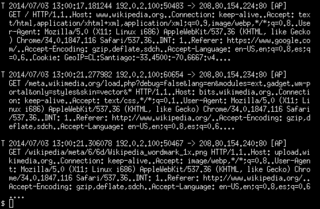

Network forensics is a sub-branch of digital forensics relating to the monitoring and analysis of computer network traffic for the purposes of information gathering, legal evidence, or intrusion detection. Unlike other areas of digital forensics, network investigations deal with volatile and dynamic information. Network traffic is transmitted and then lost, so network forensics is often a pro-active investigation.

ngrep is a network packet analyzer written by Jordan Ritter. It has a command-line interface, and relies upon the pcap library and the GNU regex library.

QUIC is a general-purpose transport layer network protocol initially designed by Jim Roskind at Google, implemented, and deployed in 2012, announced publicly in 2013 as experimentation broadened, and described at an IETF meeting. QUIC is used by more than half of all connections from the Chrome web browser to Google's servers. Microsoft Edge, Firefox and Safari support it.

RIPE Atlas is a global, open, distributed Internet measurement platform, consisting of thousands of measurement devices that measure Internet connectivity in real time.