Quantum entanglement is the phenomenon of a group of particles being generated, interacting, or sharing spatial proximity in such a way that the quantum state of each particle of the group cannot be described independently of the state of the others, including when the particles are separated by a large distance. The topic of quantum entanglement is at the heart of the disparity between classical and quantum physics: entanglement is a primary feature of quantum mechanics not present in classical mechanics.

The Game of Life, also known as Conway's Game of Life or simply Life, is a cellular automaton devised by the British mathematician John Horton Conway in 1970. It is a zero-player game, meaning that its evolution is determined by its initial state, requiring no further input. One interacts with the Game of Life by creating an initial configuration and observing how it evolves. It is Turing complete and can simulate a universal constructor or any other Turing machine.

A cellular automaton is a discrete model of computation studied in automata theory. Cellular automata are also called cellular spaces, tessellation automata, homogeneous structures, cellular structures, tessellation structures, and iterative arrays. Cellular automata have found application in various areas, including physics, theoretical biology and microstructure modeling.

Quantum decoherence is the loss of quantum coherence. Quantum decoherence has been studied to understand how quantum systems convert to systems which can be explained by classical mechanics. Beginning out of attempts to extend the understanding of quantum mechanics, the theory has developed in several directions and experimental studies have confirmed some of the key issues. Quantum computing relies on quantum coherence and is one of the primary practical applications of the concept.

Quantum turbulence is the name given to the turbulent flow – the chaotic motion of a fluid at high flow rates – of quantum fluids, such as superfluids. The idea that a form of turbulence might be possible in a superfluid via the quantized vortex lines was first suggested by Richard Feynman. The dynamics of quantum fluids are governed by quantum mechanics, rather than classical physics which govern classical (ordinary) fluids. Some examples of quantum fluids include superfluid helium, Bose–Einstein condensates (BECs), polariton condensates, and nuclear pasta theorized to exist inside neutron stars. Quantum fluids exist at temperatures below the critical temperature at which Bose-Einstein condensation takes place.

In classical electromagnetism, polarization density is the vector field that expresses the volumetric density of permanent or induced electric dipole moments in a dielectric material. When a dielectric is placed in an external electric field, its molecules gain electric dipole moment and the dielectric is said to be polarized.

Quantum tomography or quantum state tomography is the process by which a quantum state is reconstructed using measurements on an ensemble of identical quantum states. The source of these states may be any device or system which prepares quantum states either consistently into quantum pure states or otherwise into general mixed states. To be able to uniquely identify the state, the measurements must be tomographically complete. That is, the measured operators must form an operator basis on the Hilbert space of the system, providing all the information about the state. Such a set of observations is sometimes called a quorum. The term tomography was first used in the quantum physics literature in a 1993 paper introducing experimental optical homodyne tomography.

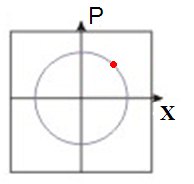

The Peres–Horodecki criterion is a necessary condition, for the joint density matrix of two quantum mechanical systems and , to be separable. It is also called the PPT criterion, for positive partial transpose. In the 2×2 and 2×3 dimensional cases the condition is also sufficient. It is used to decide the separability of mixed states, where the Schmidt decomposition does not apply. The theorem was discovered in 1996 by Asher Peres and the Horodecki family

In quantum mechanics, separable states are multipartite quantum states that can be written as a convex combination of product states. Product states are multipartite quantum states that can be written as a tensor product of states in each space. The physical intuition behind these definitions is that product states have no correlation between the different degrees of freedom, while separable states might have correlations, but all such correlations can be explained as due to a classical random variable, as opposed as being due to entanglement.

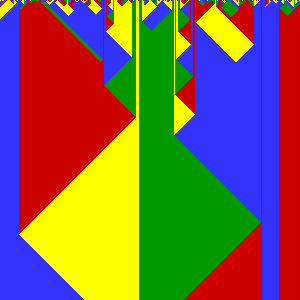

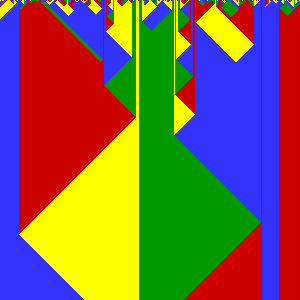

Rule 30 is an elementary cellular automaton introduced by Stephen Wolfram in 1983. Using Wolfram's classification scheme, Rule 30 is a Class III rule, displaying aperiodic, chaotic behaviour.

Wolfram code is a widely used numbering system for one-dimensional cellular automaton rules, introduced by Stephen Wolfram in a 1983 paper and popularized in his book A New Kind of Science.

A cyclic cellular automaton is a kind of cellular automaton rule developed by David Griffeath and studied by several other cellular automaton researchers. In this system, each cell remains unchanged until some neighboring cell has a modular value exactly one unit larger than that of the cell itself, at which point it copies its neighbor's value. One-dimensional cyclic cellular automata can be interpreted as systems of interacting particles, while cyclic cellular automata in higher dimensions exhibit complex spiraling behavior.

Rule 184 is a one-dimensional binary cellular automaton rule, notable for solving the majority problem as well as for its ability to simultaneously describe several, seemingly quite different, particle systems:

In the mathematical study of cellular automata, Rule 90 is an elementary cellular automaton based on the exclusive or function. It consists of a one-dimensional array of cells, each of which can hold either a 0 or a 1 value. In each time step all values are simultaneously replaced by the XOR of their two neighboring values. Martin, Odlyzko & Wolfram (1984) call it "the simplest non-trivial cellular automaton", and it is described extensively in Stephen Wolfram's 2002 book A New Kind of Science.

In applied mathematics, the numerical sign problem is the problem of numerically evaluating the integral of a highly oscillatory function of a large number of variables. Numerical methods fail because of the near-cancellation of the positive and negative contributions to the integral. Each has to be integrated to very high precision in order for their difference to be obtained with useful accuracy.

The Curtis–Hedlund–Lyndon theorem is a mathematical characterization of cellular automata in terms of their symbolic dynamics. It is named after Morton L. Curtis, Gustav A. Hedlund, and Roger Lyndon; in his 1969 paper stating the theorem, Hedlund credited Curtis and Lyndon as co-discoverers. It has been called "one of the fundamental results in symbolic dynamics".

In quantum mechanics, specifically time-dependent density functional theory, the Runge–Gross theorem shows that for a many-body system evolving from a given initial wavefunction, there exists a one-to-one mapping between the potential in which the system evolves and the density of the system. The potentials under which the theorem holds are defined up to an additive purely time-dependent function: such functions only change the phase of the wavefunction and leave the density invariant. Most often the RG theorem is applied to molecular systems where the electronic density, ρ(r,t) changes in response to an external scalar potential, v(r,t), such as a time-varying electric field.

A reversible cellular automaton is a cellular automaton in which every configuration has a unique predecessor. That is, it is a regular grid of cells, each containing a state drawn from a finite set of states, with a rule for updating all cells simultaneously based on the states of their neighbors, such that the previous state of any cell before an update can be determined uniquely from the updated states of all the cells. The time-reversed dynamics of a reversible cellular automaton can always be described by another cellular automaton rule, possibly on a much larger neighborhood.

In quantum mechanics, negativity is a measure of quantum entanglement which is easy to compute. It is a measure deriving from the PPT criterion for separability. It has shown to be an entanglement monotone and hence a proper measure of entanglement.

The entanglement of formation is a quantity that measures the entanglement of a bipartite quantum state.