Linear programming is a method to achieve the best outcome in a mathematical model whose requirements are represented by linear relationships. Linear programming is a special case of mathematical programming.

In mathematical optimization, Dantzig's simplex algorithm is a popular algorithm for linear programming.

In computer science, the time complexity is the computational complexity that describes the amount of computer time it takes to run an algorithm. Time complexity is commonly estimated by counting the number of elementary operations performed by the algorithm, supposing that each elementary operation takes a fixed amount of time to perform. Thus, the amount of time taken and the number of elementary operations performed by the algorithm are taken to be related by a constant factor.

An integer programming problem is a mathematical optimization or feasibility program in which some or all of the variables are restricted to be integers. In many settings the term refers to integer linear programming (ILP), in which the objective function and the constraints are linear.

Combinatorial optimization is a subfield of mathematical optimization that is related to operations research, algorithm theory, and computational complexity theory. It has important applications in several fields, including artificial intelligence, machine learning, auction theory, software engineering, applied mathematics and theoretical computer science.

In graph theory, a vertex cover of a graph is a set of vertices that includes at least one endpoint of every edge of the graph. In computer science, the problem of finding a minimum vertex cover is a classical optimization problem. It is NP-hard, so it cannot be solved by a polynomial-time algorithm if P ≠ NP. Moreover, it is hard to approximate - it cannot be approximated up to a factor smaller than 2 if the unique games conjecture is true. On the other hand, it has several simple 2-factor approximations. It is a typical example of an NP-hard optimization problem that has an approximation algorithm. Its decision version, the vertex cover problem, was one of Karp's 21 NP-complete problems and is therefore a classical NP-complete problem in computational complexity theory. Furthermore, the vertex cover problem is fixed-parameter tractable and a central problem in parameterized complexity theory.

In computer science and operations research, approximation algorithms are efficient algorithms that find approximate solutions to optimization problems with provable guarantees on the distance of the returned solution to the optimal one. Approximation algorithms naturally arise in the field of theoretical computer science as a consequence of the widely believed P ≠ NP conjecture. Under this conjecture, a wide class of optimization problems cannot be solved exactly in polynomial time. The field of approximation algorithms, therefore, tries to understand how closely it is possible to approximate optimal solutions to such problems in polynomial time. In an overwhelming majority of the cases, the guarantee of such algorithms is a multiplicative one expressed as an approximation ratio or approximation factor i.e., the optimal solution is always guaranteed to be within a (predetermined) multiplicative factor of the returned solution. However, there are also many approximation algorithms that provide an additive guarantee on the quality of the returned solution. A notable example of an approximation algorithm that provides both is the classic approximation algorithm of Lenstra, Shmoys and Tardos for scheduling on unrelated parallel machines.

In computer science, a polynomial-time approximation scheme (PTAS) is a type of approximation algorithm for optimization problems.

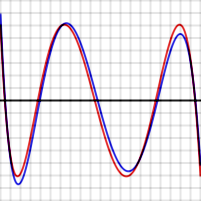

In mathematics, approximation theory is concerned with how functions can best be approximated with simpler functions, and with quantitatively characterizing the errors introduced thereby. Note that what is meant by best and simpler will depend on the application.

In statistics, ordinary least squares (OLS) is a type of linear least squares method for estimating the unknown parameters in a linear regression model. OLS chooses the parameters of a linear function of a set of explanatory variables by the principle of least squares: minimizing the sum of the squares of the differences between the observed dependent variable in the given dataset and those predicted by the linear function of the independent variable.

In computational complexity theory, the unique games conjecture is a conjecture made by Subhash Khot in 2002. The conjecture postulates that the problem of determining the approximate value of a certain type of game, known as a unique game, has NP-hard computational complexity. It has broad applications in the theory of hardness of approximation. If the unique games conjecture is true and P ≠ NP, then for many important problems it is not only impossible to get an exact solution in polynomial time, but also impossible to get a good polynomial-time approximation. The problems for which such an inapproximability result would hold include constraint satisfaction problems, which crop up in a wide variety of disciplines.

In mathematical optimization, the ellipsoid method is an iterative method for minimizing convex functions. When specialized to solving feasible linear optimization problems with rational data, the ellipsoid method is an algorithm which finds an optimal solution in a number of steps that is polynomial in the input size.

Semidefinite programming (SDP) is a subfield of convex optimization concerned with the optimization of a linear objective function over the intersection of the cone of positive semidefinite matrices with an affine space, i.e., a spectrahedron.

In computational complexity theory, a computational hardness assumption is the hypothesis that a particular problem cannot be solved efficiently. It is not known how to prove (unconditional) hardness for essentially any useful problem. Instead, computer scientists rely on reductions to formally relate the hardness of a new or complicated problem to a computational hardness assumption about a problem that is better-understood.

The study of facility location problems (FLP), also known as location analysis, is a branch of operations research and computational geometry concerned with the optimal placement of facilities to minimize transportation costs while considering factors like avoiding placing hazardous materials near housing, and competitors' facilities. The techniques also apply to cluster analysis.

In statistics, polynomial regression is a form of regression analysis in which the relationship between the independent variable x and the dependent variable y is modelled as an nth degree polynomial in x. Polynomial regression fits a nonlinear relationship between the value of x and the corresponding conditional mean of y, denoted E(y |x). Although polynomial regression fits a nonlinear model to the data, as a statistical estimation problem it is linear, in the sense that the regression function E(y | x) is linear in the unknown parameters that are estimated from the data. For this reason, polynomial regression is considered to be a special case of multiple linear regression.

In mathematics and optimization, a pseudo-Boolean function is a function of the form

In computational complexity theory, a branch of computer science, the Max/min CSP/Ones classification theorems state necessary and sufficient conditions that determine the complexity classes of problems about satisfying a subset S of boolean relations. They are similar to Schaefer's dichotomy theorem, which classifies the complexity of satisfying finite sets of relations; however, the Max/min CSP/Ones classification theorems give information about the complexity of approximating an optimal solution to a problem defined by S.

In statistics, linear regression is a linear approach for modelling the relationship between a scalar response and one or more explanatory variables. The case of one explanatory variable is called simple linear regression; for more than one, the process is called multiple linear regression. This term is distinct from multivariate linear regression, where multiple correlated dependent variables are predicted, rather than a single scalar variable.

Identical-machines scheduling is an optimization problem in computer science and operations research. We are given n jobs J1, J2, ..., Jn of varying processing times, which need to be scheduled on m identical machines, such that a certain objective function is optimized, for example, the makespan is minimized.