In topology and related branches of mathematics, a connected space is a topological space that cannot be represented as the union of two or more disjoint non-empty open subsets. Connectedness is one of the principal topological properties that are used to distinguish topological spaces.

In mathematics, an equivalence relation is a binary relation that is reflexive, symmetric and transitive. The relation "is equal to" is the canonical example of an equivalence relation.

In mathematics, when the elements of some set S have a notion of equivalence defined on them, then one may naturally split the set S into equivalence classes. These equivalence classes are constructed so that elements a and b belong to the same equivalence class if, and only if, they are equivalent.

In quantum mechanics, identical particles are particles that cannot be distinguished from one another, even in principle. Species of identical particles include, but are not limited to, elementary particles, composite subatomic particles, as well as atoms and molecules. Quasiparticles also behave in this way. Although all known indistinguishable particles only exist at the quantum scale, there is no exhaustive list of all possible sorts of particles nor a clear-cut limit of applicability, as explored in quantum statistics.

In mathematics, especially group theory, two elements a and b of a group are conjugate if there is an element g in the group such that b = g–1ag. This is an equivalence relation whose equivalence classes are called conjugacy classes.

In topology and related branches of mathematics, a topological space X is a T0 space or Kolmogorov space (named after Andrey Kolmogorov) if for every pair of distinct points of X, at least one of them has a neighborhood not containing the other. In a T0 space, all points are topologically distinguishable.

In mathematics, specifically group theory, a subgroup H of a group G may be used to decompose the underlying set of G into disjoint equal-size pieces called cosets. There are two types of cosets: left cosets and right cosets. Cosets have the same number of elements (cardinality) as does H. Furthermore, H itself is a coset, which is both a left coset and a right coset. The number of left cosets of H in G is equal to the number of right cosets of H in G. The common value is called the index of H in G and is usually denoted by [G : H].

In the theory of formal languages, the Myhill–Nerode theorem provides a necessary and sufficient condition for a language to be regular. The theorem is named for John Myhill and Anil Nerode, who proved it at the University of Chicago in 1958.

In mathematics, a partition of a set is a grouping of its elements into non-empty subsets, in such a way that every element is included in exactly one subset.

In computer science, a selection algorithm is an algorithm for finding the kth smallest number in a list or array; such a number is called the kth order statistic. This includes the cases of finding the minimum, maximum, and median elements. There are O(n)-time selection algorithms, and sublinear performance is possible for structured data; in the extreme, O(1) for an array of sorted data. Selection is a subproblem of more complex problems like the nearest neighbor and shortest path problems. Many selection algorithms are derived by generalizing a sorting algorithm, and conversely some sorting algorithms can be derived as repeated application of selection.

Common knowledge is a special kind of knowledge for a group of agents. There is common knowledge of p in a group of agents G when all the agents in G know p, they all know that they know p, they all know that they all know that they know p, and so on ad infinitum.

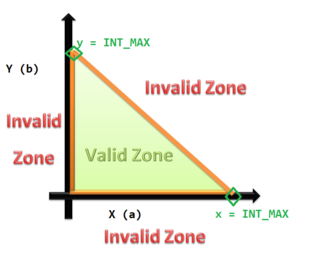

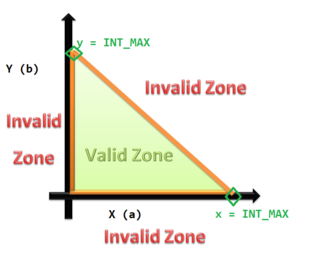

Equivalence partitioning or equivalence class partitioning (ECP) is a software testing technique that divides the input data of a software unit into partitions of equivalent data from which test cases can be derived. In principle, test cases are designed to cover each partition at least once. This technique tries to define test cases that uncover classes of errors, thereby reducing the total number of test cases that must be developed. An advantage of this approach is reduction in the time required for testing software due to lesser number of test cases.

Boundary value analysis is a software testing technique in which tests are designed to include representatives of boundary values in a range. The idea comes from the boundary. Given that we have a set of test vectors to test the system, a topology can be defined on that set. Those inputs which belong to the same equivalence class as defined by the equivalence partitioning theory would constitute the basis. Given that the basis sets are neighbors, there would exist a boundary between them. The test vectors on either side of the boundary are called boundary values. In practice this would require that the test vectors can be ordered, and that the individual parameters follows some kind of order.

In combinatorics, the twelvefold way is a systematic classification of 12 related enumerative problems concerning two finite sets, which include the classical problems of counting permutations, combinations, multisets, and partitions either of a set or of a number. The idea of the classification is credited to Gian-Carlo Rota, and the name was suggested by Joel Spencer.

Algorithm characterizations are attempts to formalize the word algorithm. Algorithm does not have a generally accepted formal definition. Researchers are actively working on this problem. This article will present some of the "characterizations" of the notion of "algorithm" in more detail.

Unicode equivalence is the specification by the Unicode character encoding standard that some sequences of code points represent essentially the same character. This feature was introduced in the standard to allow compatibility with preexisting standard character sets, which often included similar or identical characters.

In the metrical theory of regular continued fractions, the kth complete quotient ζ k is obtained by ignoring the first k partial denominators ai. For example, if a regular continued fraction is given by

In topology, two points of a topological space X are topologically indistinguishable if they have exactly the same neighborhoods. That is, if x and y are points in X, and Nx is the set of all neighborhoods that contain x, and Ny is the set of all neighborhoods that contain y, then x and y are "topologically indistinguishable" if and only if Nx = Ny. (See Hausdorff's axiomatic neighborhood systems.)

In automata theory, DFA minimization is the task of transforming a given deterministic finite automaton (DFA) into an equivalent DFA that has a minimum number of states. Here, two DFAs are called equivalent if they recognize the same regular language. Several different algorithms accomplishing this task are known and described in standard textbooks on automata theory.

Heap's algorithm generates all possible permutations of n objects. It was first proposed by B. R. Heap in 1963. The algorithm minimizes movement: it generates each permutation from the previous one by interchanging a single pair of elements; the other n−2 elements are not disturbed. In a 1977 review of permutation-generating algorithms, Robert Sedgewick concluded that it was at that time the most effective algorithm for generating permutations by computer.