A document type definition (DTD) is a set of markup declarations that define a document type for an SGML-family markup language.

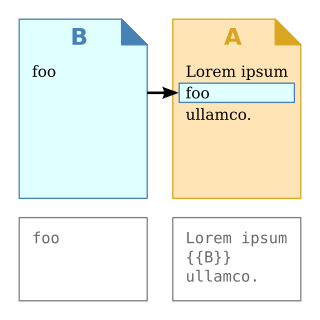

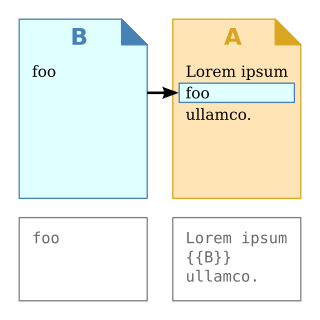

In computer science, transclusion is the inclusion of part or all of an electronic document into one or more other documents by hypertext reference. Transclusion is usually performed when the referencing document is displayed, and is normally automatic and transparent to the end user. The result of transclusion is a single integrated document made of parts assembled dynamically from separate sources, possibly stored on different computers in disparate places.

Synchronized Multimedia Integration Language ) is a World Wide Web Consortium recommended Extensible Markup Language (XML) markup language to describe multimedia presentations. It defines markup for timing, layout, animations, visual transitions, and media embedding, among other things. SMIL allows presenting media items such as text, images, video, audio, links to other SMIL presentations, and files from multiple web servers. SMIL markup is written in XML, and has similarities to HTML.

Jakarta Enterprise Beans is one of several Java APIs for modular construction of enterprise software. EJB is a server-side software component that encapsulates business logic of an application. An EJB web container provides a runtime environment for web related software components, including computer security, Java servlet lifecycle management, transaction processing, and other web services. The EJB specification is a subset of the Java EE specification.

MPEG-7 is a multimedia content description standard. It was standardized in ISO/IEC 15938. This description will be associated with the content itself, to allow fast and efficient searching for material that is of interest to the user. MPEG-7 is formally called Multimedia Content Description Interface. Thus, it is not a standard which deals with the actual encoding of moving pictures and audio, like MPEG-1, MPEG-2 and MPEG-4. It uses XML to store metadata, and can be attached to timecode in order to tag particular events, or synchronise lyrics to a song, for example.

MHEG-5, or ISO/IEC 13522-5, is part of a set of international standards relating to the presentation of multimedia information, standardised by the Multimedia and Hypermedia Experts Group (MHEG). It is most commonly used as a language to describe interactive television services.

Hypermedia, an extension of the term hypertext, is a nonlinear medium of information that includes graphics, audio, video, plain text and hyperlinks. This designation contrasts with the broader term multimedia, which may include non-interactive linear presentations as well as hypermedia. It is also related to the field of electronic literature. The term was first used in a 1965 article written by Ted Nelson.

Representational state transfer (REST) is a software architectural style that defines a set of constraints to be used for creating Web services. Web services that conform to the REST architectural style, called RESTful Web services, provide interoperability between computer systems on the internet. RESTful Web services allow the requesting systems to access and manipulate textual representations of Web resources by using a uniform and predefined set of stateless operations. Other kinds of Web services, such as SOAP Web services, expose their own arbitrary sets of operations.

MPEG-4 Part 11Scene description and application engine was published as ISO/IEC 14496-11 in 2005. MPEG-4 Part 11 is also known as BIFS, XMT, MPEG-J. It defines:

Component-based software engineering (CBSE), also called components-based development (CBD), is a branch of software engineering that emphasizes the separation of concerns with respect to the wide-ranging functionality available throughout a given software system. It is a reuse-based approach to defining, implementing and composing loosely coupled independent components into systems. This practice aims to bring about an equally wide-ranging degree of benefits in both the short-term and the long-term for the software itself and for organizations that sponsor such software.

EAR is a file format used by Java EE for packaging one or more modules into a single archive so that the deployment of the various modules onto an application server happens simultaneously and coherently. It also contains XML files called deployment descriptors which describe how to deploy the modules.

WebML is a visual notation and a methodology for designing complex data-intensive Web applications. It provides graphical, yet formal, specifications, embodied in a complete design process, which can be assisted by visual design tools.

Batik is a pure-Java library that can be used to render, generate, and manipulate SVG graphics. IBM supported the project and then donated the code to the Apache Software Foundation, where other companies and teams decided to join efforts. Batik provides a set of core modules that provide functionality to:

XHTML+SMIL is a W3C Note that describes an integration of SMIL semantics with XHTML and CSS. It is based generally upon the HTML+TIME submission. The language is also known as HTML+SMIL.

Crazy Eddie's GUI (CEGUI) is a graphical user interface (GUI) library for the programming language C++. It was designed for the needs of video games, but is usable for non-game tasks, such as applications and tools. It is designed for user flexibility in look-and-feel, and to be adaptable to the user's choice in tools and operating systems.

Animation of Scalable Vector Graphics, an open XML-based standard vector graphics format, is possible through various means:

ABNT NBR 15606 refers to a collection of technical standards that govern the transmission of digital terrestrial television in Brazil.

Multimodal Architecture and Interfaces is an open standard developed by the World Wide Web Consortium since 2005. It was published as a Recommendation of the W3C on October 25, 2012. The document is a technical report specifying a multimodal system architecture and its generic interfaces to facilitate integration and multimodal interaction management in a computer system. It has been developed by the W3C's Multimodal Interaction Working Group.

The SAML metadata standard belongs to the family of XML-based standards known as the Security Assertion Markup Language (SAML) published by OASIS in 2005. A SAML metadata document describes a SAML deployment such as a SAML identity provider or a SAML service provider. Deployments share metadata to establish a baseline of trust and interoperability.