Related Research Articles

The P versus NP problem is a major unsolved problem in theoretical computer science. Informally, it asks whether every problem whose solution can be quickly verified can also be quickly solved.

In computational complexity theory, NP is a complexity class used to classify decision problems. NP is the set of decision problems for which the problem instances, where the answer is "yes", have proofs verifiable in polynomial time by a deterministic Turing machine, or alternatively the set of problems that can be solved in polynomial time by a nondeterministic Turing machine.

In mathematics, a polynomial is a mathematical expression consisting of indeterminates and coefficients, that involves only the operations of addition, subtraction, multiplication and exponentiation to nonnegative integer powers, and has a finite number of terms. An example of a polynomial of a single indeterminate x is x2 − 4x + 7. An example with three indeterminates is x3 + 2xyz2 − yz + 1.

Hilbert's tenth problem is the tenth on the list of mathematical problems that the German mathematician David Hilbert posed in 1900. It is the challenge to provide a general algorithm that, for any given Diophantine equation, can decide whether the equation has a solution with all unknowns taking integer values.

In combinatorics, a branch of mathematics, a matroid is a structure that abstracts and generalizes the notion of linear independence in vector spaces. There are many equivalent ways to define a matroid axiomatically, the most significant being in terms of: independent sets; bases or circuits; rank functions; closure operators; and closed sets or flats. In the language of partially ordered sets, a finite simple matroid is equivalent to a geometric lattice.

In graph theory, the Robertson–Seymour theorem states that the undirected graphs, partially ordered by the graph minor relationship, form a well-quasi-ordering. Equivalently, every family of graphs that is closed under minors can be defined by a finite set of forbidden minors, in the same way that Wagner's theorem characterizes the planar graphs as being the graphs that do not have the complete graph K5 or the complete bipartite graph K3,3 as minors.

In graph theory, an undirected graph H is called a minor of the graph G if H can be formed from G by deleting edges, vertices and by contracting edges.

In mathematics, a constructive proof is a method of proof that demonstrates the existence of a mathematical object by creating or providing a method for creating the object. This is in contrast to a non-constructive proof, which proves the existence of a particular kind of object without providing an example. For avoiding confusion with the stronger concept that follows, such a constructive proof is sometimes called an effective proof.

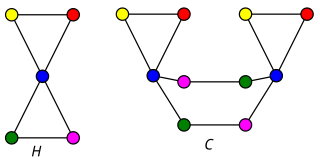

In graph theory, graph coloring is a special case of graph labeling; it is an assignment of labels traditionally called "colors" to elements of a graph subject to certain constraints. In its simplest form, it is a way of coloring the vertices of a graph such that no two adjacent vertices are of the same color; this is called a vertex coloring. Similarly, an edge coloring assigns a color to each edge so that no two adjacent edges are of the same color, and a face coloring of a planar graph assigns a color to each face or region so that no two faces that share a boundary have the same color.

In quantum computing, a quantum algorithm is an algorithm that runs on a realistic model of quantum computation, the most commonly used model being the quantum circuit model of computation. A classical algorithm is a finite sequence of instructions, or a step-by-step procedure for solving a problem, where each step or instruction can be performed on a classical computer. Similarly, a quantum algorithm is a step-by-step procedure, where each of the steps can be performed on a quantum computer. Although all classical algorithms can also be performed on a quantum computer, the term quantum algorithm is generally reserved for algorithms that seem inherently quantum, or use some essential feature of quantum computation such as quantum superposition or quantum entanglement.

In computational complexity theory, P, also known as PTIME or DTIME(nO(1)), is a fundamental complexity class. It contains all decision problems that can be solved by a deterministic Turing machine using a polynomial amount of computation time, or polynomial time.

In graph theory, a perfect graph is a graph in which the chromatic number equals the size of the maximum clique, both in the graph itself and in every induced subgraph. In all graphs, the chromatic number is greater than or equal to the size of the maximum clique, but they can be far apart. A graph is perfect when these numbers are equal, and remain equal after the deletion of arbitrary subsets of vertices.

In topological graph theory, a mathematical discipline, a linkless embedding of an undirected graph is an embedding of the graph into three-dimensional Euclidean space in such a way that no two cycles of the graph are linked. A flat embedding is an embedding with the property that every cycle is the boundary of a topological disk whose interior is disjoint from the graph. A linklessly embeddable graph is a graph that has a linkless or flat embedding; these graphs form a three-dimensional analogue of the planar graphs. Complementarily, an intrinsically linked graph is a graph that does not have a linkless embedding.

In polyhedral combinatorics, a branch of mathematics, Steinitz's theorem is a characterization of the undirected graphs formed by the edges and vertices of three-dimensional convex polyhedra: they are exactly the 3-vertex-connected planar graphs. That is, every convex polyhedron forms a 3-connected planar graph, and every 3-connected planar graph can be represented as the graph of a convex polyhedron. For this reason, the 3-connected planar graphs are also known as polyhedral graphs.

The #P-completeness of 01-permanent, sometimes known as Valiant's theorem, is a mathematical proof about the permanent of matrices, considered a seminal result in computational complexity theory. In a 1979 scholarly paper, Leslie Valiant proved that the computational problem of computing the permanent of a matrix is #P-hard, even if the matrix is restricted to have entries that are all 0 or 1. In this restricted case, computing the permanent is even #P-complete, because it corresponds to the #P problem of counting the number of permutation matrices one can get by changing ones into zeroes.

Quantum complexity theory is the subfield of computational complexity theory that deals with complexity classes defined using quantum computers, a computational model based on quantum mechanics. It studies the hardness of computational problems in relation to these complexity classes, as well as the relationship between quantum complexity classes and classical complexity classes.

In graph theory, a branch of mathematics, a cycle basis of an undirected graph is a set of simple cycles that forms a basis of the cycle space of the graph. That is, it is a minimal set of cycles that allows every even-degree subgraph to be expressed as a symmetric difference of basis cycles.

The Fisher–Kasteleyn–Temperley (FKT) algorithm, named after Michael Fisher, Pieter Kasteleyn, and Neville Temperley, counts the number of perfect matchings in a planar graph in polynomial time. This same task is #P-complete for general graphs. For matchings that are not required to be perfect, counting them remains #P-complete even for planar graphs. The key idea of the FKT algorithm is to convert the problem into a Pfaffian computation of a skew-symmetric matrix derived from a planar embedding of the graph. The Pfaffian of this matrix is then computed efficiently using standard determinant algorithms.

In graph theory, a planar cover of a finite graph G is a finite covering graph of G that is itself a planar graph. Every graph that can be embedded into the projective plane has a planar cover; an unsolved conjecture of Seiya Negami states that these are the only graphs with planar covers.

References

- 1 2 Fellows, M. R.; Langston, M. A. (1988). "Nonconstructive tools for proving polynomial-time decidability". Journal of the ACM. 35 (3): 727. doi: 10.1145/44483.44491 . S2CID 16587284.

- ↑ Brown, D. J.; Fellows, M. R.; Langston, M. A. (2007). "Polynomial-time self-reducibility: Theoretical motivations and practical results∗". International Journal of Computer Mathematics. 31 (1–2): 1–9. doi:10.1080/00207168908803783.

- 1 2 Grebinski, V.; Kucherov, G. (2000). "Optimal Reconstruction of Graphs under the Additive Model" (PDF). Algorithmica. 28: 104–124. doi:10.1007/s004530010033. S2CID 33176053.

- ↑ Kimmel, S. (2013). "Quantum Adversary (Upper) Bound". Chicago Journal of Theoretical Computer Science. 19: 1–14. arXiv: 1101.0797 . doi:10.4086/cjtcs.2013.004. S2CID 119264518.