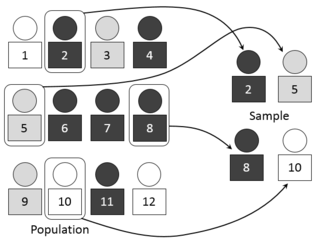

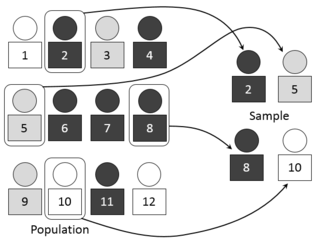

Cluster sampling is a sampling plan used when mutually homogeneous yet internally heterogeneous groupings are evident in a statistical population. It is often used in marketing research. In this sampling plan, the total population is divided into these groups and a simple random sample of the groups is selected. The elements in each cluster are then sampled. If all elements in each sampled cluster are sampled, then this is referred to as a "one-stage" cluster sampling plan. If a simple random subsample of elements is selected within each of these groups, this is referred to as a "two-stage" cluster sampling plan. A common motivation for cluster sampling is to reduce the total number of interviews and costs given the desired accuracy. For a fixed sample size, the expected random error is smaller when most of the variation in the population is present internally within the groups, and not between the groups.

The information entropy, often just entropy, is a basic quantity in information theory associated to any random variable, which can be interpreted as the average level of "information", "surprise", or "uncertainty" inherent in the variable's possible outcomes. The concept of information entropy was introduced by Claude Shannon in his 1948 paper "A Mathematical Theory of Communication".

The molecular mass (m) is the mass of a given molecule: it is measured in daltons. Different molecules of the same compound may have different molecular masses because they contain different isotopes of an element. The related quantity relative molecular mass, as defined by IUPAC, is the ratio of the mass of a molecule to the unified atomic mass unit and is unitless. The molecular mass and relative molecular mass are distinct from but related to the molar mass. The molar mass is defined as the mass of a given substance divided by the amount of a substance and is expressed in g/mol. The molar mass is usually the more appropriate figure when dealing with macroscopic (weigh-able) quantities of a substance.

Observational error is the difference between a measured value of a quantity and its true value. In statistics, an error is not a "mistake". Variability is an inherent part of the results of measurements and of the measurement process.

In statistics, the standard deviation is a measure of the amount of variation or dispersion of a set of values. A low standard deviation indicates that the values tend to be close to the mean of the set, while a high standard deviation indicates that the values are spread out over a wider range.

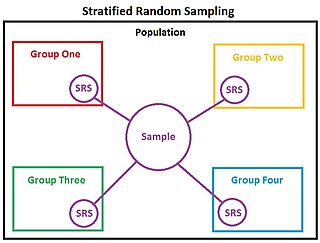

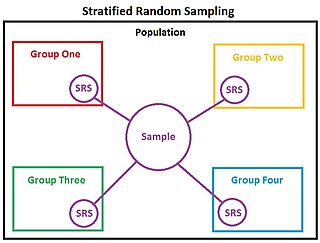

In statistics, stratified sampling is a method of sampling from a population which can be partitioned into subpopulations.

In measurement of a set, accuracy is closeness of the measurements to a specific value, while precision is the closeness of the measurements to each other.

A video codec is an electronic circuit or software that compresses or decompresses digital video. It converts uncompressed video to a compressed format or vice versa. In the context of video compression, "codec" is a concatenation of "encoder" and "decoder"—a device that only compresses is typically called an encoder, and one that only decompresses is a decoder.

In chemistry, the molar mass of a chemical compound is defined as the mass of a sample of that compound divided by the amount of substance in that sample, measured in moles. The molar mass is a bulk, not molecular, property of a substance. The molar mass is an average of many instances of the compound, which often vary in mass due to the presence of isotopes. Most commonly, the molar mass is computed from the standard atomic weights and is thus a terrestrial average and a function of the relative abundance of the isotopes of the constituent atoms on earth. The molar mass is appropriate for converting between the mass of a substance and the amount of a substance for bulk quantities.

In statistics, quality assurance, and survey methodology, sampling is the selection of a subset of individuals from within a statistical population to estimate characteristics of the whole population. Statisticians attempt for the samples to represent the population in question. Two advantages of sampling are lower cost and faster data collection than measuring the entire population.

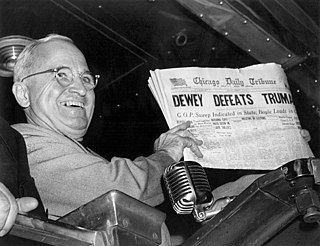

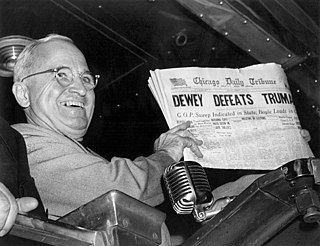

An opinion poll, often simply referred to as a poll or a survey, is a human research survey of public opinion from a particular sample. Opinion polls are usually designed to represent the opinions of a population by conducting a series of questions and then extrapolating generalities in ratio or within confidence intervals.

A straw poll or straw vote is an ad hoc or unofficial vote. It is used to show the popular opinion on a certain matter, and can be used to help politicians know the majority opinion and help them decide what to say in order to gain votes.

Statistics, when used in a misleading fashion, can trick the casual observer into believing something other than what the data shows. That is, a misuse of statistics occurs when a statistical argument asserts a falsehood. In some cases, the misuse may be accidental. In others, it is purposeful and for the gain of the perpetrator. When the statistical reason involved is false or misapplied, this constitutes a statistical fallacy.

Nuclear magnetic resonance spectroscopy, most commonly known as NMR spectroscopy or magnetic resonance spectroscopy (MRS), is a spectroscopic technique to observe local magnetic fields around atomic nuclei. The sample is placed in a magnetic field and the NMR signal is produced by excitation of the nuclei sample with radio waves into nuclear magnetic resonance, which is detected with sensitive radio receivers. The intramolecular magnetic field around an atom in a molecule changes the resonance frequency, thus giving access to details of the electronic structure of a molecule and its individual functional groups. As the fields are unique or highly characteristic to individual compounds, in modern organic chemistry practice, NMR spectroscopy is the definitive method to identify monomolecular organic compounds. Similarly, biochemists use NMR to identify proteins and other complex molecules. Besides identification, NMR spectroscopy provides detailed information about the structure, dynamics, reaction state, and chemical environment of molecules. The most common types of NMR are proton and carbon-13 NMR spectroscopy, but it is applicable to any kind of sample that contains nuclei possessing spin.

In statistics, sampling errors are incurred when the statistical characteristics of a population are estimated from a subset, or sample, of that population. Since the sample does not include all members of the population, statistics on the sample, such as means and quartiles, generally differ from the characteristics of the entire population, which are known as parameters. For example, if one measures the height of a thousand individuals from a country of one million, the average height of the thousand is typically not the same as the average height of all one million people in the country. Since sampling is typically done to determine the characteristics of a whole population, the difference between the sample and population values is considered an error. Exact measurement of sampling error is generally not feasible since the true population values are unknown.

Temporal resolution (TR) refers to the discrete resolution of a measurement with respect to time. Often there is a trade-off between the temporal resolution of a measurement and its spatial resolution, due to Heisenberg's uncertainty principle. In some contexts such as particle physics, this trade-off can be attributed to the finite speed of light and the fact that it takes a certain period of time for the photons carrying information to reach the observer. In this time, the system might have undergone changes itself. Thus, the longer the light has to travel, the lower the temporal resolution.

A radar tracker is a component of a radar system, or an associated command and control (C2) system, that associates consecutive radar observations of the same target into tracks. It is particularly useful when the radar system is reporting data from several different targets or when it is necessary to combine the data from several different radars or other sensors.

The standard atomic weight of a chemical element is the weighted arithmetic mean of the relative atomic masses (Ar) of all isotopes of that element weighted by each isotope's abundance on Earth. The standard atomic weight of each chemical element is determined and published by the Commission on Isotopic Abundances and Atomic Weights (CIAAW) of the International Union of Pure and Applied Chemistry (IUPAC) based on natural, stable, terrestrial sources of the element. It is the most common and practical atomic weight used by scientists.

In statistics, a simple random sample is a subset of individuals chosen from a larger set. Each individual is chosen randomly and entirely by chance, such that each individual has the same probability of being chosen at any stage during the sampling process, and each subset of k individuals has the same probability of being chosen for the sample as any other subset of k individuals. This process and technique is known as simple random sampling, and should not be confused with systematic random sampling. A simple random sample is an unbiased surveying technique.

Hofstede's cultural dimensions theory is a framework for cross-cultural communication, developed by Geert Hofstede. It shows the effects of a society's culture on the values of its members, and how these values relate to behaviour, using a structure derived from factor analysis.