Related Research Articles

In number theory, integer factorization is the decomposition of a composite number into a product of smaller integers. If these factors are further restricted to prime numbers, the process is called prime factorization.

Pattern recognition is the automated recognition of patterns and regularities in data. It has applications in statistical data analysis, signal processing, image analysis, information retrieval, bioinformatics, data compression, computer graphics and machine learning. Pattern recognition has its origins in statistics and engineering; some modern approaches to pattern recognition include the use of machine learning, due to the increased availability of big data and a new abundance of processing power. However, these activities can be viewed as two facets of the same field of application, and together they have undergone substantial development over the past few decades. A modern definition of pattern recognition is:

The field of pattern recognition is concerned with the automatic discovery of regularities in data through the use of computer algorithms and with the use of these regularities to take actions such as classifying the data into different categories.

In computational intelligence (CI), an evolutionary algorithm (EA) is a subset of evolutionary computation, a generic population-based metaheuristic optimization algorithm. An EA uses mechanisms inspired by biological evolution, such as reproduction, mutation, recombination, and selection. Candidate solutions to the optimization problem play the role of individuals in a population, and the fitness function determines the quality of the solutions. Evolution of the population then takes place after the repeated application of the above operators.

Machine learning (ML) is the study of computer algorithms that improve automatically through experience and by the use of data. It is seen as a part of artificial intelligence. Machine learning algorithms build a model based on sample data, known as "training data", in order to make predictions or decisions without being explicitly programmed to do so. Machine learning algorithms are used in a wide variety of applications, such as email filtering and computer vision, where it is difficult or unfeasible to develop conventional algorithms to perform the needed tasks.

In bioinformatics, sequence analysis is the process of subjecting a DNA, RNA or peptide sequence to any of a wide range of analytical methods to understand its features, function, structure, or evolution. Methodologies used include sequence alignment, searches against biological databases, and others. Since the development of methods of high-throughput production of gene and protein sequences, the rate of addition of new sequences to the databases increased exponentially. Such a collection of sequences does not, by itself, increase the scientist's understanding of the biology of organisms. However, comparing these new sequences to those with known functions is a key way of understanding the biology of an organism from which the new sequence comes. Thus, sequence analysis can be used to assign function to genes and proteins by the study of the similarities between the compared sequences. Nowadays, there are many tools and techniques that provide the sequence comparisons and analyze the alignment product to understand its biology.

In statistics, Markov chain Monte Carlo (MCMC) methods comprise a class of algorithms for sampling from a probability distribution. By constructing a Markov chain that has the desired distribution as its equilibrium distribution, one can obtain a sample of the desired distribution by recording states from the chain. The more steps are included, the more closely the distribution of the sample matches the actual desired distribution. Various algorithms exist for constructing chains, including the Metropolis–Hastings algorithm.

Neuroevolution, or neuro-evolution, is a form of artificial intelligence that uses evolutionary algorithms to generate artificial neural networks (ANN), parameters, topology and rules. It is most commonly applied in artificial life, general game playing and evolutionary robotics. The main benefit is that neuroevolution can be applied more widely than supervised learning algorithms, which require a syllabus of correct input-output pairs. In contrast, neuroevolution requires only a measure of a network's performance at a task. For example, the outcome of a game can be easily measured without providing labeled examples of desired strategies. Neuroevolution is commonly used as part of the reinforcement learning paradigm, and it can be contrasted with conventional deep learning techniques that use gradient descent on a neural network with a fixed topology.

A randomized algorithm is an algorithm that employs a degree of randomness as part of its logic. The algorithm typically uses uniformly random bits as an auxiliary input to guide its behavior, in the hope of achieving good performance in the "average case" over all possible choices of random determined by the random bits; thus either the running time, or the output are random variables.

A haplotype is a group of alleles in an organism that are inherited together from a single parent.

An electropherogram, or electrophoregram, is a record or chart produced when electrophoresis is used in an analytical technique, primarily in the fields of molecular biology or biochemistry.

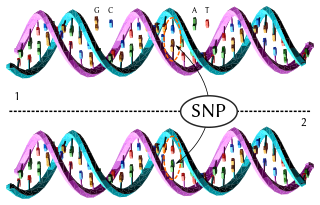

A tag SNP is a representative single nucleotide polymorphism (SNP) in a region of the genome with high linkage disequilibrium that represents a group of SNPs called a haplotype. It is possible to identify genetic variation and association to phenotypes without genotyping every SNP in a chromosomal region. This reduces the expense and time of mapping genome areas associated with disease, since it eliminates the need to study every individual SNP. Tag SNPs are useful in whole-genome SNP association studies in which hundreds of thousands of SNPs across the entire genome are genotyped.

Sequencing by hybridization is a class of methods for determining the order in which nucleotides occur on a strand of DNA. Typically used for looking for small changes relative to a known DNA sequence. The binding of one strand of DNA to its complementary strand in the DNA double-helix is sensitive to even single-base mismatches when the hybrid region is short or if specialized mismatch detection proteins are present. This is exploited in a variety of ways, most notably via DNA chips or microarrays with thousands to billions of synthetic oligonucleotides found in a genome of interest plus many known variations or even all possible single-base variations.

In probability theory, a Markov model is a stochastic model used to model pseudo-randomly changing systems. It is assumed that future states depend only on the current state, not on the events that occurred before it. Generally, this assumption enables reasoning and computation with the model that would otherwise be intractable. For this reason, in the fields of predictive modelling and probabilistic forecasting, it is desirable for a given model to exhibit the Markov property.

In mathematics and computer science, the probabilistic method is used to prove the existence of mathematical objects with desired combinatorial properties. The proofs are probabilistic — they work by showing that a random object, chosen from some probability distribution, has the desired properties with positive probability. Consequently, they are nonconstructive — they don't explicitly describe an efficient method for computing the desired objects.

Forensic statistics is the application of probability models and statistical techniques to scientific evidence, such as DNA evidence, and the law. In contrast to "everyday" statistics, to not engender bias or unduly draw conclusions, forensic statisticians report likelihoods as likelihood ratios (LR). This ratio of probabilities is then used by juries or judges to draw inferences or conclusions and decide legal matters. Jurors and judges rely on the strength of a DNA match, given by statistics, to make conclusions and determine guilt or innocence in legal matters.

SNV calling from NGS data is any of a range of methods for identifying the existence of single nucleotide variants (SNVs) from the results of next generation sequencing (NGS) experiments. These are computational techniques, and are in contrast to special experimental methods based on known population-wide single nucleotide polymorphisms. Due to the increasing abundance of NGS data, these techniques are becoming increasingly popular for performing SNP genotyping, with a wide variety of algorithms designed for specific experimental designs and applications. In addition to the usual application domain of SNP genotyping, these techniques have been successfully adapted to identify rare SNPs within a population, as well as detecting somatic SNVs within an individual using multiple tissue samples.

Stan is a probabilistic programming language for statistical inference written in C++. The Stan language is used to specify a (Bayesian) statistical model with an imperative program calculating the log probability density function.

In bioinformatics, a DNA read error occurs when a sequence assembler changes one DNA base for a different base. The reads from the sequence assembler can then be used to create a de Bruijn graph, which can be used in various ways to find errors.

PyMC3 is a Python package for Bayesian statistical modeling and probabilistic machine learning which focuses on advanced Markov chain Monte Carlo and variational fitting algorithms. It is a rewrite from scratch of the previous version of the PyMC software. Unlike PyMC2, which had used Fortran extensions for performing computations, PyMC3 relies on Theano for automatic differentiation and also for computation optimization and dynamic C compilation. From version 3.8 PyMC3 relies on ArviZ to handle plotting, diagnostics, and statistical checks. PyMC3 and Stan are the two most popular probabilistic programming tools. PyMC3 is an open source project, developed by the community and fiscally sponsored by NumFocus.

TrueAllele is a software program by Cybergenetics that analyzes DNA using statistical methods, a process called probabilistic genotyping. It is used in forensic identification. The program can be used in situations unsuited to traditional methods, such as when a mixture of multiple people's DNA is in a sample.

References

- 1 2 Kwong, Katherine (2017). "The Algorithm Says You Did It: The Use of Black Box Algorithms to Analyze Complex DNA Evidence" (PDF). Harvard Journal of Law & Technology: 279–281.

- ↑ "Defense lawyers want to peek behind the curtain of probabilistic genotyping". ABA Journal. December 2017. Retrieved March 12, 2019.