In optics, aberration is a property of optical systems, such as lenses, that causes light to be spread out over some region of space rather than focused to a point. Aberrations cause the image formed by a lens to be blurred or distorted, with the nature of the distortion depending on the type of aberration. Aberration can be defined as a departure of the performance of an optical system from the predictions of paraxial optics. In an imaging system, it occurs when light from one point of an object does not converge into a single point after transmission through the system. Aberrations occur because the simple paraxial theory is not a completely accurate model of the effect of an optical system on light, rather than due to flaws in the optical elements.

Diffraction refers to various phenomena that occur when a wave encounters an obstacle or opening. It is defined as the bending of waves around the corners of an obstacle or through an aperture into the region of geometrical shadow of the obstacle/aperture. The diffracting object or aperture effectively becomes a secondary source of the propagating wave. Italian scientist Francesco Maria Grimaldi coined the word diffraction and was the first to record accurate observations of the phenomenon in 1660.

In optics, the refractive index of a material is a dimensionless number that describes how fast light travels through the material. It is defined as

In optics, a Gaussian beam is a beam of electromagnetic radiation with high monochromaticity whose amplitude envelope in the transverse plane is given by a Gaussian function; this also implies a Gaussian intensity (irradiance) profile. This fundamental (or TEM00) transverse Gaussian mode describes the intended output of most (but not all) lasers, as such a beam can be focused into the most concentrated spot. When such a beam is refocused by a lens, the transverse phase dependence is altered; this results in a different Gaussian beam. The electric and magnetic field amplitude profiles along any such circular Gaussian beam (for a given wavelength and polarization) are determined by a single parameter: the so-called waist w0. At any position z relative to the waist (focus) along a beam having a specified w0, the field amplitudes and phases are thereby determined as detailed below.

In optics, the numerical aperture (NA) of an optical system is a dimensionless number that characterizes the range of angles over which the system can accept or emit light. By incorporating index of refraction in its definition, NA has the property that it is constant for a beam as it goes from one material to another, provided there is no refractive power at the interface. The exact definition of the term varies slightly between different areas of optics. Numerical aperture is commonly used in microscopy to describe the acceptance cone of an objective, and in fiber optics, in which it describes the range of angles within which light that is incident on the fiber will be transmitted along it.

In optics, the f-number of an optical system such as a camera lens is the ratio of the system's focal length to the diameter of the entrance pupil. It is also known as the focal ratio, f-ratio, or f-stop, and is very important in photography. It is a dimensionless number that is a quantitative measure of lens speed; increasing the f-number is referred to as stopping down. The f-number is commonly indicated using a lower-case hooked f with the format f/N, where N is the f-number.

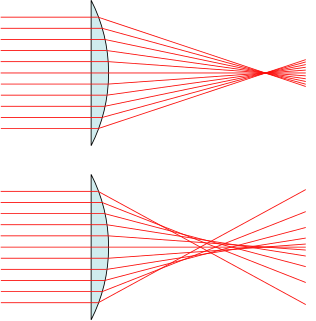

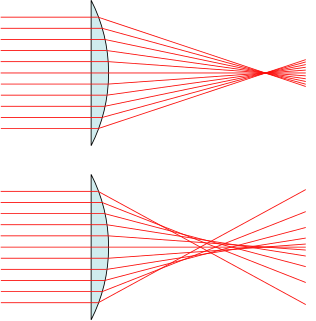

In optics, spherical aberration (SA) is a type of aberration found in optical systems that have elements with spherical surfaces. Lenses and curved mirrors are prime examples, because this shape is easier to manufacture. Light rays that strike a spherical surface off-centre are refracted or reflected more or less than those that strike close to the centre. This deviation reduces the quality of images produced by optical systems.

Angular resolution describes the ability of any image-forming device such as an optical or radio telescope, a microscope, a camera, or an eye, to distinguish small details of an object, thereby making it a major determinant of image resolution. It is used in optics applied to light waves, in antenna theory applied to radio waves, and in acoustics applied to sound waves. The colloquial use of the term "resolution" often causes confusion; when a camera is said to have high resolution because of its good image quality, it actually has a low angular resolution. The closely related term spatial resolution refers to the precision of a measurement with respect to space, which is directly connected to angular resolution in imaging instruments. The Rayleigh criterion shows that the minimum angular spread that can be resolved by an image forming system is limited by diffraction to the ratio of the wavelength of the waves to the aperture width. For this reason, high resolution imaging systems such as astronomical telescopes, long distance telephoto camera lenses and radio telescopes have large apertures.

Fourier optics is the study of classical optics using Fourier transforms (FTs), in which the waveform being considered is regarded as made up of a combination, or superposition, of plane waves. It has some parallels to the Huygens–Fresnel principle, in which the wavefront is regarded as being made up of a combination of spherical wavefronts whose sum is the wavefront being studied. A key difference is that Fourier optics considers the plane waves to be natural modes of the propagation medium, as opposed to Huygens–Fresnel, where the spherical waves originate in the physical medium.

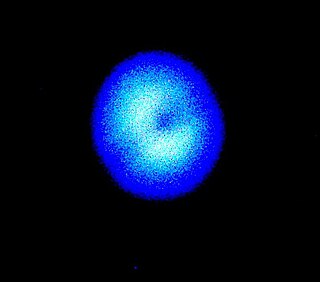

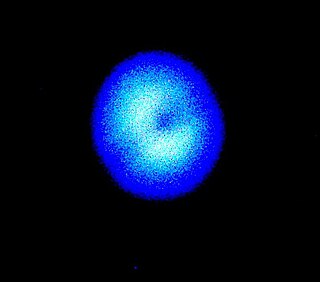

In optics, the Airy disk and Airy pattern are descriptions of the best-focused spot of light that a perfect lens with a circular aperture can make, limited by the diffraction of light. The Airy disk is of importance in physics, optics, and astronomy.

The point spread function (PSF) describes the response of an imaging system to a point source or point object. A more general term for the PSF is a system's impulse response, the PSF being the impulse response of a focused optical system. The PSF in many contexts can be thought of as the extended blob in an image that represents a single point object. In functional terms, it is the spatial domain version of the optical transfer function of the imaging system. It is a useful concept in Fourier optics, astronomical imaging, medical imaging, electron microscopy and other imaging techniques such as 3D microscopy and fluorescence microscopy.

In physics, the wavefront of a time-varying field is the set (locus) of all points where the wave has the same phase of the sinusoid. The term is generally meaningful only for fields that, at each point, vary sinusoidally in time with a single temporal frequency.

In optics, the Fresnel diffraction equation for near-field diffraction is an approximation of the Kirchhoff–Fresnel diffraction that can be applied to the propagation of waves in the near field. It is used to calculate the diffraction pattern created by waves passing through an aperture or around an object, when viewed from relatively close to the object. In contrast the diffraction pattern in the far field region is given by the Fraunhofer diffraction equation.

The optical transfer function (OTF) of an optical system such as a camera, microscope, human eye, or projector specifies how different spatial frequencies are handled by the system. It is used by optical engineers to describe how the optics project light from the object or scene onto a photographic film, detector array, retina, screen, or simply the next item in the optical transmission chain. A variant, the modulation transfer function (MTF), neglects phase effects, but is equivalent to the OTF in many situations.

High-resolution transmission electron microscopy is an imaging mode of specialized transmission electron microscopes that allows for direct imaging of the atomic structure of samples. It is a powerful tool to study properties of materials on the atomic scale, such as semiconductors, metals, nanoparticles and sp2-bonded carbon. While this term is often also used to refer to high resolution scanning transmission electron microscopy, mostly in high angle annular dark field mode, this article describes mainly the imaging of an object by recording the two-dimensional spatial wave amplitude distribution in the image plane, in analogy to a "classic" light microscope. For disambiguation, the technique is also often referred to as phase contrast transmission electron microscopy. At present, the highest point resolution realised in phase contrast transmission electron microscopy is around 0.5 ångströms (0.050 nm). At these small scales, individual atoms of a crystal and its defects can be resolved. For 3-dimensional crystals, it may be necessary to combine several views, taken from different angles, into a 3D map. This technique is called electron crystallography.

The transmission coefficient is used in physics and electrical engineering when wave propagation in a medium containing discontinuities is considered. A transmission coefficient describes the amplitude, intensity, or total power of a transmitted wave relative to an incident wave.

Focus recovery from a defocused image is an ill-posed problem since it loses the component of high frequency. Most of the methods for focus recovery are based on depth estimation theory. The Linear canonical transform (LCT) gives a scalable kernel to fit many well-known optical effects. Using LCTs to approximate an optical system for imaging and inverting this system, theoretically permits recovery of a defocused image.

The contrast transfer function (CTF) mathematically describes how aberrations in a transmission electron microscope (TEM) modify the image of a sample. This contrast transfer function (CTF) sets the resolution of high-resolution transmission electron microscopy (HRTEM), also known as phase contrast TEM.

As described here, white light interferometry is a non-contact optical method for surface height measurement on 3-D structures with surface profiles varying between tens of nanometers and a few centimeters. It is often used as an alternative name for coherence scanning interferometry in the context of areal surface topography instrumentation that relies on spectrally-broadband, visible-wavelength light.

Lightfieldmicroscopy (LFM) is a scanning-free 3-dimensional (3D) microscopic imaging method based on the theory of light field. This technique allows sub-second (~10 Hz) large volumetric imaging with ~1 μm spatial resolution in the condition of weak scattering and semi-transparence, which has never been achieved by other methods. Just as in traditional light field rendering, there are two steps for LFM imaging: light field capture and processing. In most setups, a microlens array is used to capture the light field. As for processing, it can be based on two kinds of representations of light propagation: the ray optics picture and the wave optics picture. The Stanford University Computer Graphics Laboratory published their first prototype LFM in 2006 and has been working on the cutting edge since then.