Reversible reference system propagation algorithm (r-RESPA) is a time stepping algorithm used in molecular dynamics. [1]

It evolves the system state over time,

where the L is the Liouville operator.

Reversible reference system propagation algorithm (r-RESPA) is a time stepping algorithm used in molecular dynamics. [1]

It evolves the system state over time,

where the L is the Liouville operator.

Ionization or ionisation is the process by which an atom or a molecule acquires a negative or positive charge by gaining or losing electrons, often in conjunction with other chemical changes. The resulting electrically charged atom or molecule is called an ion. Ionization can result from the loss of an electron after collisions with subatomic particles, collisions with other atoms, molecules and ions, or through the interaction with electromagnetic radiation. Heterolytic bond cleavage and heterolytic substitution reactions can result in the formation of ion pairs. Ionization can occur through radioactive decay by the internal conversion process, in which an excited nucleus transfers its energy to one of the inner-shell electrons causing it to be ejected.

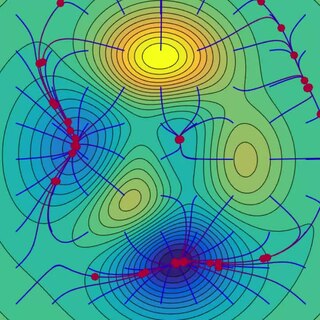

In mathematics gradient descent is a first-order iterative optimization algorithm for finding a local minimum of a differentiable function. The idea is to take repeated steps in the opposite direction of the gradient of the function at the current point, because this is the direction of steepest descent. Conversely, stepping in the direction of the gradient will lead to a local maximum of that function; the procedure is then known as gradient ascent.

The Lotka–Volterra equations, also known as the predator–prey equations, are a pair of first-order nonlinear differential equations, frequently used to describe the dynamics of biological systems in which two species interact, one as a predator and the other as prey. The populations change through time according to the pair of equations:

A granular material is a conglomeration of discrete solid, macroscopic particles characterized by a loss of energy whenever the particles interact. The constituents that compose granular material are large enough such that they are not subject to thermal motion fluctuations. Thus, the lower size limit for grains in granular material is about 1 μm. On the upper size limit, the physics of granular materials may be applied to ice floes where the individual grains are icebergs and to asteroid belts of the Solar System with individual grains being asteroids.

In electrical engineering, statistical computing and bioinformatics, the Baum–Welch algorithm is a special case of the EM algorithm used to find the unknown parameters of a hidden Markov model (HMM). It makes use of the forward-backward algorithm to compute the statistics for the expectation step.

The rigid rotor is a mechanical model of rotating systems. An arbitrary rigid rotor is a 3-dimensional rigid object, such as a top. To orient such an object in space requires three angles, known as Euler angles. A special rigid rotor is the linear rotor requiring only two angles to describe, for example of a diatomic molecule. More general molecules are 3-dimensional, such as water, ammonia, or methane.

The Rabinovich–Fabrikant equations are a set of three coupled ordinary differential equations exhibiting chaotic behaviour for certain values of the parameters. They are named after Mikhail Rabinovich and Anatoly Fabrikant, who described them in 1979.

ALOPEX is a correlation based machine learning algorithm first proposed by Tzanakou and Harth in 1974.

Matching pursuit (MP) is a sparse approximation algorithm which finds the "best matching" projections of multidimensional data onto the span of an over-complete dictionary . The basic idea is to approximately represent a signal from Hilbert space as a weighted sum of finitely many functions taken from . An approximation with atoms has the form

The time-evolving block decimation (TEBD) algorithm is a numerical scheme used to simulate one-dimensional quantum many-body systems, characterized by at most nearest-neighbour interactions. It is dubbed Time-evolving Block Decimation because it dynamically identifies the relevant low-dimensional Hilbert subspaces of an exponentially larger original Hilbert space. The algorithm, based on the Matrix Product States formalism, is highly efficient when the amount of entanglement in the system is limited, a requirement fulfilled by a large class of quantum many-body systems in one dimension.

In computer science, lattice problems are a class of optimization problems related to mathematical objects called lattices. The conjectured intractability of such problems is central to the construction of secure lattice-based cryptosystems: Lattice problems are an example of NP-hard problems which have been shown to be average-case hard, providing a test case for the security of cryptographic algorithms. In addition, some lattice problems which are worst-case hard can be used as a basis for extremely secure cryptographic schemes. The use of worst-case hardness in such schemes makes them among the very few schemes that are very likely secure even against quantum computers. For applications in such cryptosystems, lattices over vector space or free modules are generally considered.

In computer science, online machine learning is a method of machine learning in which data becomes available in a sequential order and is used to update the best predictor for future data at each step, as opposed to batch learning techniques which generate the best predictor by learning on the entire training data set at once. Online learning is a common technique used in areas of machine learning where it is computationally infeasible to train over the entire dataset, requiring the need of out-of-core algorithms. It is also used in situations where it is necessary for the algorithm to dynamically adapt to new patterns in the data, or when the data itself is generated as a function of time, e.g., stock price prediction. Online learning algorithms may be prone to catastrophic interference, a problem that can be addressed by incremental learning approaches.

Alan M. Frieze is a professor in the Department of Mathematical Sciences at Carnegie Mellon University, Pittsburgh, United States. He graduated from the University of Oxford in 1966, and obtained his PhD from the University of London in 1975. His research interests lie in combinatorics, discrete optimisation and theoretical computer science. Currently, he focuses on the probabilistic aspects of these areas; in particular, the study of the asymptotic properties of random graphs, the average case analysis of algorithms, and randomised algorithms. His recent work has included approximate counting and volume computation via random walks; finding edge disjoint paths in expander graphs, and exploring anti-Ramsey theory and the stability of routing algorithms.

Gradient boosting is a machine learning technique used in regression and classification tasks, among others. It gives a prediction model in the form of an ensemble of weak prediction models, which are typically decision trees. When a decision tree is the weak learner, the resulting algorithm is called gradient-boosted trees; it usually outperforms random forest. A gradient-boosted trees model is built in a stage-wise fashion as in other boosting methods, but it generalizes the other methods by allowing optimization of an arbitrary differentiable loss function.

In coding theory, expander codes form a class of error-correcting codes that are constructed from bipartite expander graphs. Along with Justesen codes, expander codes are of particular interest since they have a constant positive rate, a constant positive relative distance, and a constant alphabet size. In fact, the alphabet contains only two elements, so expander codes belong to the class of binary codes. Furthermore, expander codes can be both encoded and decoded in time proportional to the block length of the code.

In statistics and signal processing, step detection is the process of finding abrupt changes in the mean level of a time series or signal. It is usually considered as a special case of the statistical method known as change detection or change point detection. Often, the step is small and the time series is corrupted by some kind of noise, and this makes the problem challenging because the step may be hidden by the noise. Therefore, statistical and/or signal processing algorithms are often required.

A Hindley–Milner (HM) type system is a classical type system for the lambda calculus with parametric polymorphism. It is also known as Damas–Milner or Damas–Hindley–Milner. It was first described by J. Roger Hindley and later rediscovered by Robin Milner. Luis Damas contributed a close formal analysis and proof of the method in his PhD thesis.

In data mining and machine learning, -flats algorithm is an iterative method which aims to partition observations into clusters where each cluster is close to a -flat, where is a given integer.

In statistics and physics, multicanonical ensemble is a Markov chain Monte Carlo sampling technique that uses the Metropolis–Hastings algorithm to compute integrals where the integrand has a rough landscape with multiple local minima. It samples states according to the inverse of the density of states, which has to be known a priori or be computed using other techniques like the Wang and Landau algorithm. Multicanonical sampling is an important technique for spin systems like the Ising model or spin glasses.

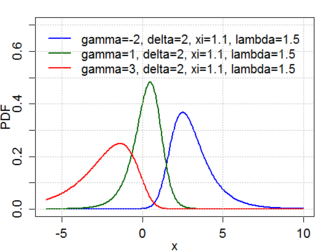

The Johnson's SU-distribution is a four-parameter family of probability distributions first investigated by N. L. Johnson in 1949. Johnson proposed it as a transformation of the normal distribution: