Arithmetic is an elementary branch of mathematics that studies numerical operations like addition, subtraction, multiplication, and division. In a wider sense, it also includes exponentiation, extraction of roots, and taking logarithms.

Discrete mathematics is the study of mathematical structures that can be considered "discrete" rather than "continuous". Objects studied in discrete mathematics include integers, graphs, and statements in logic. By contrast, discrete mathematics excludes topics in "continuous mathematics" such as real numbers, calculus or Euclidean geometry. Discrete objects can often be enumerated by integers; more formally, discrete mathematics has been characterized as the branch of mathematics dealing with countable sets. However, there is no exact definition of the term "discrete mathematics".

In computing, floating-point arithmetic (FP) is arithmetic that represents subsets of real numbers using an integer with a fixed precision, called the significand, scaled by an integer exponent of a fixed base. Numbers of this form are called floating-point numbers. For example, 12.345 is a floating-point number in base ten with five digits of precision:

Numerical analysis is the study of algorithms that use numerical approximation for the problems of mathematical analysis. It is the study of numerical methods that attempt to find approximate solutions of problems rather than the exact ones. Numerical analysis finds application in all fields of engineering and the physical sciences, and in the 21st century also the life and social sciences, medicine, business and even the arts. Current growth in computing power has enabled the use of more complex numerical analysis, providing detailed and realistic mathematical models in science and engineering. Examples of numerical analysis include: ordinary differential equations as found in celestial mechanics, numerical linear algebra in data analysis, and stochastic differential equations and Markov chains for simulating living cells in medicine and biology.

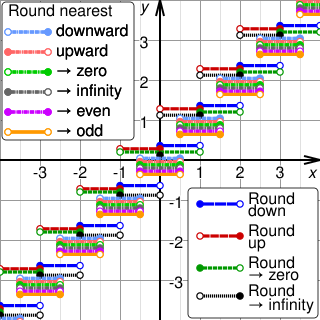

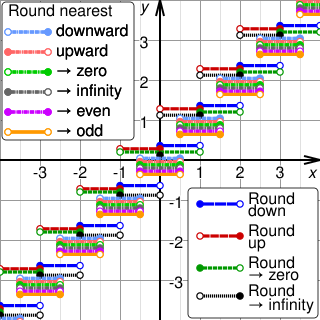

Rounding or rounding off means replacing a number with an approximate value that has a shorter, simpler, or more explicit representation. For example, replacing $23.4476 with $23.45, the fraction 312/937 with 1/3, or the expression √2 with 1.414.

In numerical analysis, the Kahan summation algorithm, also known as compensated summation, significantly reduces the numerical error in the total obtained by adding a sequence of finite-precision floating-point numbers, compared to the obvious approach. This is done by keeping a separate running compensation, in effect extending the precision of the sum by the precision of the compensation variable.

Graham's scan is a method of finding the convex hull of a finite set of points in the plane with time complexity O(n log n). It is named after Ronald Graham, who published the original algorithm in 1972. The algorithm finds all vertices of the convex hull ordered along its boundary. It uses a stack to detect and remove concavities in the boundary efficiently.

The IEEE Standard for Floating-Point Arithmetic is a technical standard for floating-point arithmetic established in 1985 by the Institute of Electrical and Electronics Engineers (IEEE). The standard addressed many problems found in the diverse floating-point implementations that made them difficult to use reliably and portably. Many hardware floating-point units use the IEEE 754 standard.

In computer science, arbitrary-precision arithmetic, also called bignum arithmetic, multiple-precision arithmetic, or sometimes infinite-precision arithmetic, indicates that calculations are performed on numbers whose digits of precision are potentially limited only by the available memory of the host system. This contrasts with the faster fixed-precision arithmetic found in most arithmetic logic unit (ALU) hardware, which typically offers between 8 and 64 bits of precision.

The term arithmetic underflow is a condition in a computer program where the result of a calculation is a number of more precise absolute value than the computer can actually represent in memory on its central processing unit (CPU).

The Library of Efficient Data types and Algorithms (LEDA) is a proprietarily-licensed software library providing C++ implementations of a broad variety of algorithms for graph theory and computational geometry. It was originally developed by the Max Planck Institute for Informatics Saarbrücken. Since 2001, LEDA is further developed and distributed by the Algorithmic Solutions Software GmbH.

In computing, especially computational geometry, a real RAM is a mathematical model of a computer that can compute with exact real numbers instead of the binary fixed point or floating point numbers used by most actual computers. The real RAM was formulated by Michael Ian Shamos in his 1978 Ph.D. dissertation.

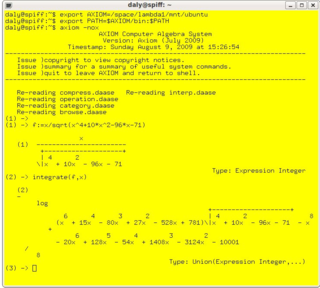

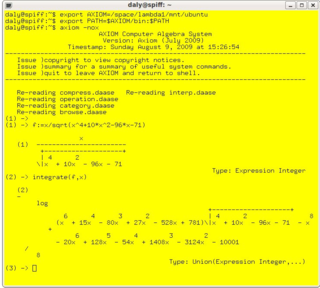

In mathematics and computer science, computer algebra, also called symbolic computation or algebraic computation, is a scientific area that refers to the study and development of algorithms and software for manipulating mathematical expressions and other mathematical objects. Although computer algebra could be considered a subfield of scientific computing, they are generally considered as distinct fields because scientific computing is usually based on numerical computation with approximate floating point numbers, while symbolic computation emphasizes exact computation with expressions containing variables that have no given value and are manipulated as symbols.

Mathematics is a field of study that investigates topics such as number, space, structure, and change.

Mathematics is a broad subject that is commonly divided in many areas that may be defined by their objects of study, by the used methods, or by both. For example, analytic number theory is a subarea of number theory devoted to the use of methods of analysis for the study of natural numbers.

Floating-point error mitigation is the minimization of errors caused by the fact that real numbers cannot, in general, be accurately represented in a fixed space. By definition, floating-point error cannot be eliminated, and, at best, can only be managed.

Validated numerics, or rigorous computation, verified computation, reliable computation, numerical verification is numerics including mathematically strict error evaluation, and it is one field of numerical analysis. For computation, interval arithmetic is used, and all results are represented by intervals. Validated numerics were used by Warwick Tucker in order to solve the 14th of Smale's problems, and today it is recognized as a powerful tool for the study of dynamical systems.

Complexity and Real Computation is a book on the computational complexity theory of real computation. It studies algorithms whose inputs and outputs are real numbers, using the Blum–Shub–Smale machine as its model of computation. For instance, this theory is capable of addressing a question posed in 1991 by Roger Penrose in The Emperor's New Mind: "is the Mandelbrot set computable?"

2Sum is a floating-point algorithm for computing the exact round-off error in a floating-point addition operation.