A supercomputer is a computer with a high level of performance as compared to a general-purpose computer. The performance of a supercomputer is commonly measured in floating-point operations per second (FLOPS) instead of million instructions per second (MIPS). Since 2017, there have existed supercomputers which can perform over 1017 FLOPS (a hundred quadrillion FLOPS, 100 petaFLOPS or 100 PFLOPS). For comparison, a desktop computer has performance in the range of hundreds of gigaFLOPS (1011) to tens of teraFLOPS (1013). Since November 2017, all of the world's fastest 500 supercomputers run on Linux-based operating systems. Additional research is being conducted in the United States, the European Union, Taiwan, Japan, and China to build faster, more powerful and technologically superior exascale supercomputers.

High-performance computing (HPC) uses supercomputers and computer clusters to solve advanced computation problems.

David A. Bader is a Distinguished Professor and Director of the Institute for Data Science at the New Jersey Institute of Technology. Previously, he served as the Chair of the Georgia Institute of Technology School of Computational Science & Engineering, where he was also a founding professor, and the executive director of High-Performance Computing at the Georgia Tech College of Computing. In 2007, he was named the first director of the Sony Toshiba IBM Center of Competence for the Cell Processor at Georgia Tech.

The United States Department of Defense High Performance Computing Modernization Program (HPCMP) was initiated in 1992 in response to Congressional direction to modernize the Department of Defense (DoD) laboratories’ high performance computing capabilities. The HPCMP provides supercomputers, a national research network, high-end software tools, a secure environment, and computational science experts that together enable the Defense laboratories and test centers to conduct research, development, test and technology evaluation activities.

Cloud computing is the on-demand availability of computer system resources, especially data storage and computing power, without direct active management by the user. Large clouds often have functions distributed over multiple locations, each of which is a data center. Cloud computing relies on sharing of resources to achieve coherence and typically uses a pay-as-you-go model, which can help in reducing capital expenses but may also lead to unexpected operating expenses for users.

The IBM HPC Systems Scientific Computing User Group (ScicomP) is an international organization open to all scientific and technical users of IBM systems. At yearly meetings application scientists and staff from HPC centers present talks about, and discuss, ways to develop efficient and scalable scientific applications. These meetings provide an opportunity to give feedback to IBM that will influence the design of future systems. ScicomP is a not-for-profit group and is not affiliated with IBM Corporation.

PERCS is IBM's answer to DARPA's High Productivity Computing Systems (HPCS) initiative. The program resulted in commercial development and deployment of the Power 775, a supercomputer design with extremely high performance ratios in fabric and memory bandwidth, as well as very high performance density and power efficiency.

Shaheen is the name of a series of supercomputers owned and operated by King Abdullah University of Science and Technology (KAUST), Saudi Arabia. Shaheen is named after the Peregrine Falcon. The most recent model, Shaheen II, is the largest and most powerful supercomputer in the Middle East.

Exascale computing refers to computing systems capable of calculating at least "1018 IEEE 754 Double Precision (64-bit) operations (multiplications and/or additions) per second (exaFLOPS)"; it is a measure of supercomputer performance.

SC, the International Conference for High Performance Computing, Networking, Storage and Analysis, is the annual conference established in 1988 by the Association for Computing Machinery and the IEEE Computer Society. In 2019, about 13,950 people participated overall; by 2022 attendance had rebounded to 11,830 both in-person and online. The not-for-profit conference is run by a committee of approximately 600 volunteers who spend roughly three years organizing each conference.

Eurotech is a company dedicated to the research, development, production and marketing of miniature computers (NanoPCs) and high performance computers (HPCs).

The Ken Kennedy Award, established in 2009 by the Association for Computing Machinery and the IEEE Computer Society in memory of Ken Kennedy, is awarded annually and recognizes substantial contributions to programmability and productivity in computing and substantial community service or mentoring contributions. The award includes a $5,000 honorarium and the award recipient will be announced at the ACM - IEEE Supercomputing Conference.

Several centers for supercomputing exist across Europe, and distributed access to them is coordinated by European initiatives to facilitate high-performance computing. One such initiative, the HPC Europa project, fits within the Distributed European Infrastructure for Supercomputing Applications (DEISA), which was formed in 2002 as a consortium of eleven supercomputing centers from seven European countries. Operating within the CORDIS framework, HPC Europa aims to provide access to supercomputers across Europe.

ACM SIGHPC is the Association for Computing Machinery's Special Interest Group on High Performance Computing, an international community of students, faculty, researchers, and practitioners working on research and in professional practice related to supercomputing, high-end computers, and cluster computing. The organization co-sponsors international conferences related to high performance and scientific computing, including: SC, the International Conference for High Performance Computing, Networking, Storage and Analysis; the Platform for Advanced Scientific Computing (PASC) Conference; Practice and Experience in Advanced Research Computing (PEARC); and PPoPP, the Symposium on Principles and Practice of Parallel Programming.

Richard Vuduc is a tenured professor of computer science at the Georgia Institute of Technology. His research lab, The HPC Garage, studies high-performance computing, scientific computing, parallel algorithms, modeling, and engineering. He is a member of the Association for Computing Machinery (ACM). As of 2022, Vuduc serves as Vice President of the SIAM Activity Group on Supercomputing. He has co-authored over 200 articles in peer-reviewed journals and conferences.

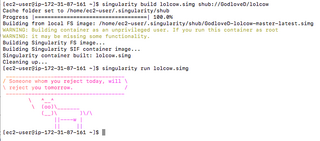

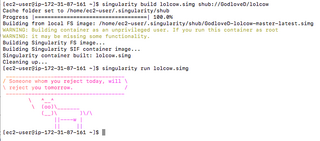

Singularity is a free and open-source computer program that performs operating-system-level virtualization also known as containerization.

Ilkay Altintas is a Turkish-American data and computer scientist, and researcher in the domain of supercomputing and high-performance computing applications. Since 2015, Altintas has served as chief data science officer of the San Diego Supercomputer Center (SDSC), at the University of California, San Diego (UCSD), where she has also served as founder and director of the Workflows for Data Science Center of Excellence (WorDS) since 2014, as well as founder and director of the WIFIRE lab. Altintas is also the co-initiator of the Kepler scientific workflow system, an open-source platform that endows research scientists with the ability to readily collaborate, share, and design scientific workflows.

The European Processor Initiative (EPI) is a European processor project to design and build a new family of European low-power processors for supercomputers, Big Data, automotive, and offering high performance on traditional HPC applications and emerging applications such as on machine learning. It is led by a consortium of European companies and universities. The project is divided in multiple phases funded under Specific Grant Agreements. The first grant agreement is implemented under the European Commission program Horizon 2020 in the December 2018 to November 2021 time span. The second agreement will be implemented afterwards under the EuroHPC Joint Undertaking which issued a call answered to in January 2021 by the same consortium.

Torsten Hoefler is a Professor of Computer Science at ETH Zurich and the Chief Architect for Machine Learning at the Swiss National Supercomputing Centre. Previously, he led the Advanced Application and User Support team at the Blue Waters Directorate of the National Center for Supercomputing Applications, and held an adjunct professor position at the Computer Science Department at the University of Illinois at Urbana Champaign. His expertise lies in large-scale parallel computing and high-performance computing systems. He focuses on applications in large-scale artificial intelligence as well as climate sciences.