Related Research Articles

In computing, multitasking is the concurrent execution of multiple tasks over a certain period of time. New tasks can interrupt already started ones before they finish, instead of waiting for them to end. As a result, a computer executes segments of multiple tasks in an interleaved manner, while the tasks share common processing resources such as central processing units (CPUs) and main memory. Multitasking automatically interrupts the running program, saving its state and loading the saved state of another program and transferring control to it. This "context switch" may be initiated at fixed time intervals, or the running program may be coded to signal to the supervisory software when it can be interrupted.

Real-time computing (RTC) is the computer science term for hardware and software systems subject to a "real-time constraint", for example from event to system response. Real-time programs must guarantee response within specified time constraints, often referred to as "deadlines".

A real-time operating system (RTOS) is an operating system (OS) for real-time computing applications that processes data and events that have critically defined time constraints. A RTOS is distinct from a time-sharing operating system, such as Unix, which manages the sharing of system resources with a scheduler, data buffers, or fixed task prioritization in a multitasking or multiprogramming environment. Processing time requirements need to be fully understood and bound rather than just kept as a minimum. All processing must occur within the defined constraints. Real-time operating systems are event-driven and preemptive, meaning the OS can monitor the relevant priority of competing tasks, and make changes to the task priority. Event-driven systems switch between tasks based on their priorities, while time-sharing systems switch the task based on clock interrupts.

In computing, a process is the instance of a computer program that is being executed by one or many threads. There are many different process models, some of which are light weight, but almost all processes are rooted in an operating system (OS) process which comprises the program code, assigned system resources, physical and logical access permissions, and data structures to initiate, control and coordinate execution activity. Depending on the OS, a process may be made up of multiple threads of execution that execute instructions concurrently.

In computer science, a thread of execution is the smallest sequence of programmed instructions that can be managed independently by a scheduler, which is typically a part of the operating system. In many cases, a thread is a component of a process.

Symmetric multiprocessing or shared-memory multiprocessing (SMP) involves a multiprocessor computer hardware and software architecture where two or more identical processors are connected to a single, shared main memory, have full access to all input and output devices, and are controlled by a single operating system instance that treats all processors equally, reserving none for special purposes. Most multiprocessor systems today use an SMP architecture. In the case of multi-core processors, the SMP architecture applies to the cores, treating them as separate processors.

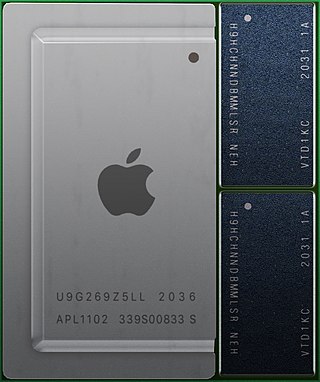

A system on a chip or system-on-chip is an integrated circuit that integrates most or all components of a computer or other electronic system. These components almost always include on-chip central processing unit (CPU), memory interfaces, input/output devices and interfaces, and secondary storage interfaces, often alongside other components such as radio modems and a graphics processing unit (GPU) – all on a single substrate or microchip. SoCs may contain digital and also analog, mixed-signal and often radio frequency signal processing functions.

In computing, scheduling is the action of assigning resources to perform tasks. The resources may be processors, network links or expansion cards. The tasks may be threads, processes or data flows.

In computer science, rate-monotonic scheduling (RMS) is a priority assignment algorithm used in real-time operating systems (RTOS) with a static-priority scheduling class. The static priorities are assigned according to the cycle duration of the job, so a shorter cycle duration results in a higher job priority.

In computer science, a lock or mutex is a synchronization primitive that prevents state from being modified or accessed by multiple threads of execution at once. Locks enforce mutual exclusion concurrency control policies, and with a variety of possible methods there exists multiple unique implementations for different applications.

In concurrent programming, concurrent accesses to shared resources can lead to unexpected or erroneous behavior, so parts of the program where the shared resource is accessed need to be protected in ways that avoid the concurrent access. One way to do so is known as a critical section or critical region. This protected section cannot be entered by more than one process or thread at a time; others are suspended until the first leaves the critical section. Typically, the critical section accesses a shared resource, such as a data structure, a peripheral device, or a network connection, that would not operate correctly in the context of multiple concurrent accesses.

In computer science, the fetch-and-add (FAA) CPU instruction atomically increments the contents of a memory location by a specified value.

DO-178B, Software Considerations in Airborne Systems and Equipment Certification is a guideline dealing with the safety of safety-critical software used in certain airborne systems. It was jointly developed by the safety-critical working group RTCA SC-167 of the Radio Technical Commission for Aeronautics (RTCA) and WG-12 of the European Organisation for Civil Aviation Equipment (EUROCAE). RTCA published the document as RTCA/DO-178B, while EUROCAE published the document as ED-12B. Although technically a guideline, it was a de facto standard for developing avionics software systems until it was replaced in 2012 by DO-178C.

Runtime verification is a computing system analysis and execution approach based on extracting information from a running system and using it to detect and possibly react to observed behaviors satisfying or violating certain properties. Some very particular properties, such as datarace and deadlock freedom, are typically desired to be satisfied by all systems and may be best implemented algorithmically. Other properties can be more conveniently captured as formal specifications. Runtime verification specifications are typically expressed in trace predicate formalisms, such as finite state machines, regular expressions, context-free patterns, linear temporal logics, etc., or extensions of these. This allows for a less ad-hoc approach than normal testing. However, any mechanism for monitoring an executing system is considered runtime verification, including verifying against test oracles and reference implementations. When formal requirements specifications are provided, monitors are synthesized from them and infused within the system by means of instrumentation. Runtime verification can be used for many purposes, such as security or safety policy monitoring, debugging, testing, verification, validation, profiling, fault protection, behavior modification, etc. Runtime verification avoids the complexity of traditional formal verification techniques, such as model checking and theorem proving, by analyzing only one or a few execution traces and by working directly with the actual system, thus scaling up relatively well and giving more confidence in the results of the analysis, at the expense of less coverage. Moreover, through its reflective capabilities runtime verification can be made an integral part of the target system, monitoring and guiding its execution during deployment.

Real-time database has two meanings. The most common use of the term refers to a database system which uses streaming technologies to handle workloads whose state is constantly changing. This differs from traditional databases containing persistent data, mostly unaffected by time. When referring to streaming technologies, real-time processing means that a transaction is processed fast enough for the result to come back and be acted on right away. Such real-time databases are useful for assisting social media platforms in the removal of fake news, in-store surveillance cameras identifying potential shoplifters by their behavior/movements, etc.

In computer science, a concurrent data structure is a particular way of storing and organizing data for access by multiple computing threads on a computer.

For several years parallel hardware was only available for distributed computing but recently it is becoming available for the low end computers as well. Hence it has become inevitable for software programmers to start writing parallel applications. It is quite natural for programmers to think sequentially and hence they are less acquainted with writing multi-threaded or parallel processing applications. Parallel programming requires handling various issues such as synchronization and deadlock avoidance. Programmers require added expertise for writing such applications apart from their expertise in the application domain. Hence programmers prefer to write sequential code and most of the popular programming languages support it. This allows them to concentrate more on the application. Therefore, there is a need to convert such sequential applications to parallel applications with the help of automated tools. The need is also non-trivial because large amount of legacy code written over the past few decades needs to be reused and parallelized.

High performance computing applications run on massively parallel supercomputers consist of concurrent programs designed using multi-threaded, multi-process models. The applications may consist of various constructs with varying degree of parallelism. Although high performance concurrent programs use similar design patterns, models and principles as that of sequential programs, unlike sequential programs, they typically demonstrate non-deterministic behavior. The probability of bugs increases with the number of interactions between the various parallel constructs. Race conditions, data races, deadlocks, missed signals and live lock are common error types.

Earliest deadline first (EDF) or least time to go is a dynamic priority scheduling algorithm used in real-time operating systems to place processes in a priority queue. Whenever a scheduling event occurs the queue will be searched for the process closest to its deadline. This process is the next to be scheduled for execution.

This glossary of computer science is a list of definitions of terms and concepts used in computer science, its sub-disciplines, and related fields, including terms relevant to software, data science, and computer programming.

References

- 1 2 Leung, Joseph; Zhao, Hairong (November 2005). Real-Time Scheduling Analysis (PDF) (Technical report). DOT/FAA/AR-05/27.

- ↑ Liu, Zhiming; Joseph, Mathai (17 February 2001). "Verification, Refinement and Scheduling of Real-time Programs". Theoretical Computer Science. 253 (1): 119–152. CiteSeerX 10.1.1.50.2896 . doi:10.1016/s0304-3975(00)00091-8.

- ↑ Sorin, Manolache; Petru, Eles; Zebo, Peng (November 2004). "Schedulability Analysis of Applications with Stochastic Task Execution Times" (PDF). ACM Transactions on Embedded Computing Systems. 3 (4): 706–735. doi:10.1145/1027794.1027797. S2CID 17526360 . Retrieved 4 December 2015.

- 1 2 3 4 5 6 Audsley, N.; Burns, A. (1990). Real-Time System Scheduling (PDF) (Technical report). University of York, UK.

- ↑ Castanet, R.; Laurençot, P. "Testing real-Time Systems". 15th World Conference on Nondestructive Testing. AIPnD. Retrieved 4 December 2015.