Definition

A Scott information system, A, is an ordered triple

satisfying

Here means

In domain theory, a branch of mathematics and computer science, a Scott information system is a primitive kind of logical deductive system often used as an alternative way of presenting Scott domains.

A Scott information system, A, is an ordered triple

satisfying

Here means

The return value of a partial recursive function, which either returns a natural number or goes into an infinite recursion, can be expressed as a simple Scott information system as follows:

That is, the result can either be a natural number, represented by the singleton set , or "infinite recursion," represented by .

Of course, the same construction can be carried out with any other set instead of .

The propositional calculus gives us a very simple Scott information system as follows:

Let D be a Scott domain. Then we may define an information system as follows

Let be the mapping that takes us from a Scott domain, D, to the information system defined above.

Given an information system, , we can build a Scott domain as follows.

Let denote the set of points of A with the subset ordering. will be a countably based Scott domain when T is countable. In general, for any Scott domain D and information system A

where the second congruence is given by approximable mappings.

Propositional calculus is a branch of logic. It is also called propositional logic, statement logic, sentential calculus, sentential logic, or sometimes zeroth-order logic. It deals with propositions and argument flow. Compound propositions are formed by connecting propositions by logical connectives. The propositions without logical connectives are called atomic propositions.

In mathematics and in particular measure theory, a measurable function is a function between the underlying sets of two measurable spaces that preserves the structure of the spaces: the preimage of any measurable set is measurable, analogous to the definition that a function between topological spaces is continuous if it preserves the topological structure: the preimage of each open set is open. In real analysis, measurable functions are used in the definition of the Lebesgue integral. In probability theory, a measurable function on a probability space is known as a random variable.

Distributions are objects that generalize the classical notion of functions in mathematical analysis. Distributions make it possible to differentiate functions whose derivatives do not exist in the classical sense. In particular, any locally integrable function has a distributional derivative. Distributions are widely used in the theory of partial differential equations, where it may be easier to establish the existence of distributional solutions than classical solutions, or appropriate classical solutions may not exist. Distributions are also important in physics and engineering where many problems naturally lead to differential equations whose solutions or initial conditions are distributions, such as the Dirac delta function.

In predicate logic, a universal quantification is a type of quantifier, a logical constant which is interpreted as "given any" or "for all". It expresses that a propositional function can be satisfied by every member of a domain of discourse. In other words, it is the predication of a property or relation to every member of the domain. It asserts that a predicate within the scope of a universal quantifier is true of every value of a predicate variable.

Hilbert's Nullstellensatz is a theorem that establishes a fundamental relationship between geometry and algebra. This relationship is the basis of algebraic geometry, a branch of mathematics. It relates algebraic sets to ideals in polynomial rings over algebraically closed fields. This relationship was discovered by David Hilbert who proved the Nullstellensatz and several other important related theorems named after him.

In the mathematical discipline of set theory, forcing is a technique for proving consistency and independence results. It was first used by Paul Cohen in 1963, to prove the independence of the axiom of choice and the continuum hypothesis from Zermelo–Fraenkel set theory.

Relevance logic, also called relevant logic, is a kind of non-classical logic requiring the antecedent and consequent of implications to be relevantly related. They may be viewed as a family of substructural or modal logics.

In mathematics, the notion of a germ of an object in/on a topological space is an equivalence class of that object and others of the same kind that captures their shared local properties. In particular, the objects in question are mostly functions and subsets. In specific implementations of this idea, the functions or subsets in question will have some property, such as being analytic or smooth, but in general this is not needed ; it is however necessary that the space on/in which the object is defined is a topological space, in order that the word local have some sense.

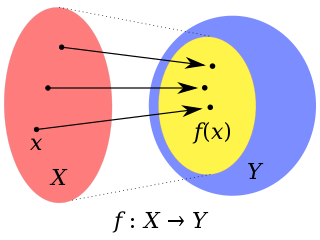

In mathematics, the image of a function is the set of all output values it may produce.

In model theory, a transfer principle states that all statements of some language that are true for some structure are true for another structure. One of the first examples was the Lefschetz principle, which states that any sentence in the first-order language of fields that is true for the complex numbers is also true for any algebraically closed field of characteristic 0.

Independence-friendly logic is an extension of classical first-order logic (FOL) by means of slashed quantifiers of the form and . The intended reading of is "there is a which is functionally independent from the variables in ". IF logic allows one to express more general patterns of dependence between variables than those which are implicit in first-order logic. This greater level of generality leads to an actual increase in expressive power; the set of IF sentences can characterize the same classes of structures as existential second-order logic. For example, it can express branching quantifier sentences, such as the formula which expresses infinity in the empty signature; this cannot be done in FOL. Therefore, first-order logic cannot, in general, express this pattern of dependency, in which depends only on and , and depends only on and . IF logic is more general than branching quantifiers, for example in that it can express dependencies that are not transitive, such as in the quantifier prefix .

In set theory, a prewellordering is a binary relation that is transitive, connex, and wellfounded. In other words, if is a prewellordering on a set , and if we define by

A Dynkin system, named after Eugene Dynkin, is a collection of subsets of another universal set satisfying a set of axioms weaker than those of σ-algebra. Dynkin systems are sometimes referred to as λ-systems or d-system. These set families have applications in measure theory and probability.

In abstract algebraic logic, a branch of mathematical logic, the Leibniz operator is a tool used to classify deductive systems, which have a precise technical definition, and capture a large number of logics. The Leibniz operator was introduced by Wim Blok and Don Pigozzi, two of the founders of the field, as a means to abstract the well-known Lindenbaum–Tarski process, that leads to the association of Boolean algebras to classical propositional calculus, and make it applicable to as wide a variety of sentential logics as possible. It is an operator that assigns to a given theory of a given sentential logic, perceived as a free algebra with a consequence operation on its universe, the largest congruence on the algebra that is compatible with the theory.

In mathematics, a cardinal function is a function that returns cardinal numbers.

In mathematics, near sets are either spatially close or descriptively close. Spatially close sets have nonempty intersection. In other words, spatially close sets are not disjoint sets, since they always have at least one element in common. Descriptively close sets contain elements that have matching descriptions. Such sets can be either disjoint or non-disjoint sets. Spatially near sets are also descriptively near sets.

In mathematics, specifically set theory, the Cartesian product of two sets A and B, denoted A × B, is the set of all ordered pairs (a, b) where a is in A and b is in B. In terms of set-builder notation, that is

Dependence logic is a logical formalism, created by Jouko Väänänen, which adds dependence atoms to the language of first-order logic. A dependence atom is an expression of the form , where are terms, and corresponds to the statement that the value of is functionally dependent on the values of .

In mathematics, a polyadic space is a topological space that is the image under a continuous function of a topological power of an Alexandroff one-point compactification of a discrete topological space.

In mathematics, a filter on a set informally gives a notion of which subsets are "large". Filter quantifiers are a type of logical quantifier which, informally, say whether or not a statement is true for "most" elements of . Such quantifiers are often used in combinatorics, model theory, and in other fields of mathematical logic where (ultra)filters are used.