Blame is the act of censuring, holding responsible, or making negative statements about an individual or group that their actions or inaction are socially or morally irresponsible, the opposite of praise. When someone is morally responsible for doing something wrong, their action is blameworthy. By contrast, when someone is morally responsible for doing something right, it may be said that their action is praiseworthy. There are other senses of praise and blame that are not ethically relevant. One may praise someone's good dress sense, and blame their own sense of style for their own dress sense.

Intercultural communication is a discipline that studies communication across different cultures and social groups, or how culture affects communication. It describes the wide range of communication processes and problems that naturally appear within an organization or social context made up of individuals from different religious, social, ethnic, and educational backgrounds. In this sense, it seeks to understand how people from different countries and cultures act, communicate, and perceive the world around them. Intercultural communication focuses on the recognition and respect of those with cultural differences. The goal is mutual adaptation between two or more distinct cultures which leads to biculturalism/multiculturalism rather than complete assimilation. It promotes the development of cultural sensitivity and allows for empathic understanding across different cultures.

Neville A. Stanton is a British Professor Emeritus of Human Factors and Ergonomics at the University of Southampton. He is a Chartered Engineer (C.Eng), Chartered Psychologist (C.Psychol) and Chartered Ergonomist (C.ErgHF) and has written and edited over sixty books and over four hundred peer-reviewed journal papers on applications of the subject. Stanton is a Fellow of the British Psychological Society, a Fellow of The Institute of Ergonomics and Human Factors and a member of the Institution of Engineering and Technology. He has been published in academic journals including Nature. He has also helped organisations design new human-machine interfaces, such as the Adaptive Cruise Control system for Jaguar Cars.

In the field of human factors and ergonomics, human reliability is the probability that a human performs a task to a sufficient standard. Reliability of humans can be affected by many factors such as age, physical health, mental state, attitude, emotions, personal propensity for certain mistakes, and cognitive biases.

In engineering and systems theory, redundancy is the intentional duplication of critical components or functions of a system with the goal of increasing reliability of the system, usually in the form of a backup or fail-safe, or to improve actual system performance, such as in the case of GNSS receivers, or multi-threaded computer processing.

Safety culture is the element of organizational culture which is concerned with the maintenance of safety and compliance with safety standards. It is informed by the organization's leadership and the beliefs, perceptions and values that employees share in relation to risks within the organization, workplace or community. Safety culture has been described in a variety of ways: notably, the National Academies of Science and the Association of Land Grant and Public Universities have published summaries on this topic in 2014 and 2016.

A high reliability organization (HRO) is an organization that has succeeded in avoiding catastrophes in an environment where normal accidents can be expected due to risk factors and complexity.

Human error is an action that has been done but that was "not intended by the actor; not desired by a set of rules or an external observer; or that led the task or system outside its acceptable limits". Human error has been cited as a primary cause and contributing factor in disasters and accidents in industries as diverse as nuclear power, aviation, space exploration, and medicine. Prevention of human error is generally seen as a major contributor to reliability and safety of (complex) systems. Human error is one of the many contributing causes of risk events.

Instruction creep or rule creep occurs when instructions or rules accumulate over time until they are unmanageable or inappropriate. It is a type of scope creep. The accumulation of bureaucratic requirements results in overly complex procedures that are often misunderstood, irritating, time-wasting, or ignored.

A social-ecological system consists of 'a bio-geo-physical' unit and its associated social actors and institutions. Social-ecological systems are complex and adaptive and delimited by spatial or functional boundaries surrounding particular ecosystems and their context problems.

Blame in organizations may flow between management and staff, or laterally between professionals or partner organizations. In a blame culture, problem-solving is replaced by blame-avoidance. Blame shifting may exist between rival factions. Maintaining one's reputation may be a key factor explaining the relationship between accountability and blame avoidance. The blame culture is a serious issue in certain sectors such as safety-critical domains.

The term use error has recently been introduced to replace the commonly used terms human error and user error. The new term, which has already been adopted by international standards organizations for medical devices, suggests that accidents should be attributed to the circumstances, rather than to the human beings who happened to be there.

Human factors are the physical or cognitive properties of individuals, or social behavior which is specific to humans, and which influence functioning of technological systems as well as human-environment equilibria. The safety of underwater diving operations can be improved by reducing the frequency of human error and the consequences when it does occur. Human error can be defined as an individual's deviation from acceptable or desirable practice which culminates in undesirable or unexpected results. Human factors include both the non-technical skills that enhance safety and the non-technical factors that contribute to undesirable incidents that put the diver at risk.

[Safety is] An active, adaptive process which involves making sense of the task in the context of the environment to successfully achieve explicit and implied goals, with the expectation that no harm or damage will occur. – G. Lock, 2022

Dive safety is primarily a function of four factors: the environment, equipment, individual diver performance and dive team performance. The water is a harsh and alien environment which can impose severe physical and psychological stress on a diver. The remaining factors must be controlled and coordinated so the diver can overcome the stresses imposed by the underwater environment and work safely. Diving equipment is crucial because it provides life support to the diver, but the majority of dive accidents are caused by individual diver panic and an associated degradation of the individual diver's performance. – M.A. Blumenberg, 1996

Maritime resource management (MRM) or bridge resource management (BRM) is a set of human factors and soft skills training aimed at the maritime industry. The MRM training programme was launched in 1993 – at that time under the name bridge resource management – and aims at preventing accidents at sea caused by human error.

Psychological safety is the belief that one will not be punished or humiliated for speaking up with ideas, questions, concerns, or mistakes. In teams, it refers to team members believing that they can take risks without being shamed by other team members. In psychologically safe teams, team members feel accepted and respected contributing to a better "experience in the workplace". It is also the most studied enabling condition in group dynamics and team learning research.

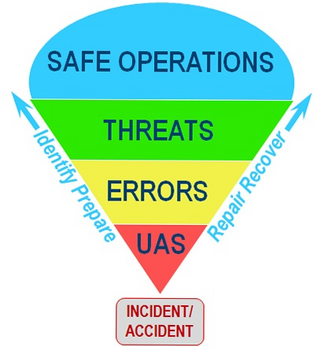

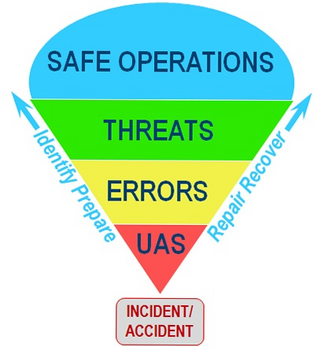

In aviation safety, threat and error management (TEM) is an overarching safety management approach that assumes that pilots will naturally make mistakes and encounter risky situations during flight operations. Rather than try to avoid these threats and errors, its primary focus is on teaching pilots to manage these issues so they do not impair safety. Its goal is to maintain safety margins by training pilots and flight crews to detect and respond to events that are likely to cause damage (threats) as well as mistakes that are most likely to be made (errors) during flight operations.

Just culture is a concept related to systems thinking which emphasizes that mistakes are generally a product of faulty organizational cultures, rather than solely brought about by the person or persons directly involved. In a just culture, after an incident, the question asked is, "What went wrong?" rather than "Who caused the problem?". A just culture is the opposite of a blame culture. A just culture is not the same as a no-blame culture as individuals may still be held accountable for their misconduct or negligence.

David D. Woods is an American safety systems researcher who studies human coordination and automation issues in a wide range safety-critical fields such as nuclear power, aviation, space operations, critical care medicine, and software services. He is one of the founding researchers of the fields of cognitive systems engineering and resilience engineering.

Dr. Richard I. Cook was a system safety researcher, physician, anesthesiologist, university professor, and software engineer. Cook did research in safety, incident analysis, cognitive systems engineering, and resilience engineering across a number of fields, including critical care medicine, aviation, air traffic control, space operations, semiconductor manufacturing, and software services.

Resilience engineering is a subfield of safety science research that focuses on understanding how complex adaptive systems cope when encountering a surprise. The term resilience in this context refers to the capabilities that a system must possess in order to deal effectively with unanticipated events. Resilience engineering examines how systems build, sustain, degrade, and lose these capabilities.