In computer architecture, a bus is a communication system that transfers data between components inside a computer, or between computers. This expression covers all related hardware components and software, including communication protocols.

InfiniBand (IB) is a computer networking communications standard used in high-performance computing that features very high throughput and very low latency. It is used for data interconnect both among and within computers. InfiniBand is also used as either a direct or switched interconnect between servers and storage systems, as well as an interconnect between storage systems. It is designed to be scalable and uses a switched fabric network topology. Between 2014 and June 2016, it was the most commonly used interconnect in the TOP500 list of supercomputers.

PCI Express, officially abbreviated as PCIe or PCI-e, is a high-speed serial computer expansion bus standard, meant to replace the older PCI, PCI-X and AGP bus standards. It is the common motherboard interface for personal computers' graphics cards, capture cards, sound cards, hard disk drive host adapters, SSDs, Wi-Fi, and Ethernet hardware connections. PCIe has numerous improvements over the older standards, including higher maximum system bus throughput, lower I/O pin count, smaller physical footprint, better performance scaling for bus devices, a more detailed error detection and reporting mechanism, and native hot-swap functionality. More recent revisions of the PCIe standard provide hardware support for I/O virtualization.

In a computer system, a chipset is a set of electronic components on one or more integrated circuits that manages the data flow between the processor, memory and peripherals. The chipset is usually found on the motherboard of computers. Chipsets are usually designed to work with a specific family of microprocessors. Because it controls communications between the processor and external devices, the chipset plays a crucial role in determining system performance. Sometimes the term "chipset" is used to describe a system on chip (SoC) used in a mobile phone.

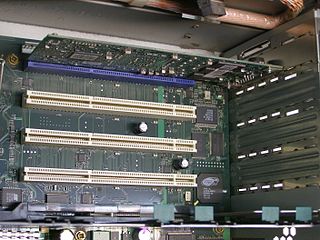

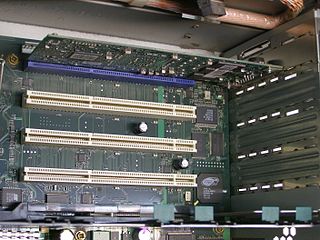

PCI-X, short for Peripheral Component Interconnect eXtended, is a computer bus and expansion card standard that enhances the 32-bit PCI local bus for higher bandwidth demanded mostly by servers and workstations. It uses a modified protocol to support higher clock speeds, but is otherwise similar in electrical implementation. PCI-X 2.0 added speeds up to 533 MHz, with a reduction in electrical signal levels.

x86 virtualization is the use of hardware-assisted virtualization capabilities on an x86/x86-64 CPU.

The RapidIO architecture is a high-performance packet-switched electrical connection technology. It supports messaging, read/write and cache coherency semantics. Based on industry-standard electrical specifications such as those for Ethernet, RapidIO can be used as a chip-to-chip, board-to-board, and chassis-to-chassis interconnect.

In computing, an input–output memory management unit (IOMMU) is a memory management unit (MMU) connecting a direct-memory-access–capable (DMA-capable) I/O bus to the main memory. Like a traditional MMU, which translates CPU-visible virtual addresses to physical addresses, the IOMMU maps device-visible virtual addresses to physical addresses. Some units also provide memory protection from faulty or malicious devices.

Logical Domains is the server virtualization and partitioning technology for SPARC V9 processors. It was first released by Sun Microsystems in April 2007. After the Oracle acquisition of Sun in January 2010, the product has been re-branded as Oracle VM Server for SPARC from version 2.0 onwards.

In virtualization, input/output virtualization is a methodology to simplify management, lower costs and improve performance of servers in enterprise environments. I/O virtualization environments are created by abstracting the upper layer protocols from the physical connections.

Temporal isolation or performance isolation among virtual machine (VMs) refers to the capability of isolating the temporal behavior of multiple VMs among each other, despite them running on the same physical host and sharing a set of physical resources such as processors, memory, and disks.

NVM Express (NVMe) or Non-Volatile Memory Host Controller Interface Specification (NVMHCIS) is an open, logical-device interface specification for accessing a computer's non-volatile storage media usually attached via the PCI Express bus. The initial NVM stands for non-volatile memory, which is often NAND flash memory that comes in several physical form factors, including solid-state drives (SSDs), PCIe add-in cards, and M.2 cards, the successor to mSATA cards. NVM Express, as a logical-device interface, has been designed to capitalize on the low latency and internal parallelism of solid-state storage devices.

The eXtensible Host Controller Interface (xHCI) is a technical specification that provides a detailed framework for the functioning of a computer's host controller for Universal Serial Bus (USB). Known alternately as the USB 3.0 host controller specification, xHCI is designed to be backward compatible, supporting a wide range of USB devices from older USB 1.x to the more recent USB 3.x versions.

Google Compute Engine (GCE) is the infrastructure as a service (IaaS) component of Google Cloud Platform which is built on the global infrastructure that runs Google's search engine, Gmail, YouTube and other services. Google Compute Engine enables users to launch virtual machines (VMs) on demand. VMs can be launched from the standard images or custom images created by users. Google Compute Engine can be accessed via the Developer Console, RESTful API or command-line interface (CLI).

M.2, pronounced m dot two and formerly known as the Next Generation Form Factor (NGFF), is a specification for internally mounted computer expansion cards and associated connectors. M.2 replaces the Mini SATA (mSATA) standard and the Mini PCIe (mPCIe) standard. Employing a more flexible physical specification, M.2 allows different module widths and lengths, which, paired with the availability of more advanced interfacing features, makes M.2 more suitable than mSATA in general for solid-state storage applications, particularly in smaller devices such as ultrabooks and tablets.

NVLink is a wire-based serial multi-lane near-range communications link developed by Nvidia. Unlike PCI Express, a device can consist of multiple NVLinks, and devices use mesh networking to communicate instead of a central hub. The protocol was first announced in March 2014 and uses a proprietary high-speed signaling interconnect (NVHS).

QPACE 2 is a massively parallel and scalable supercomputer. It was designed for applications in lattice quantum chromodynamics but is also suitable for a wider range of applications..

Coherent Accelerator Processor Interface (CAPI), is a high-speed processor expansion bus standard for use in large data center computers, initially designed to be layered on top of PCI Express, for directly connecting central processing units (CPUs) to external accelerators like graphics processing units (GPUs), ASICs, FPGAs or fast storage. It offers low latency, high speed, direct memory access connectivity between devices of different instruction set architectures.

ExpEther is a System Hardware Virtualization Technology that expands standard PCI Express beyond 1 km having thousands of roots and endpoint devices together on a single network connected through the standard Ethernet. Abundant commodities of PCI Express-based software and hardware can be utilized without any modification. It also provides software-defined re-configurability to make a disaggregated computing system with device-level.

Compute Express Link (CXL) is an open standard interconnect for high-speed, high capacity central processing unit (CPU)-to-device and CPU-to-memory connections, designed for high performance data center computers. CXL is built on the serial PCI Express (PCIe) physical and electrical interface and includes PCIe-based block input/output protocol (CXL.io) and new cache-coherent protocols for accessing system memory (CXL.cache) and device memory (CXL.mem). The serial communication and pooling capabilities allows CXL memory to overcome performance and socket packaging limitations of common DIMM memory when implementing high storage capacities.