Artificial intelligence (AI) is the intelligence of machines or software, as opposed to the intelligence of living beings, primarily of humans. It is a field of study in computer science that develops and studies intelligent machines. Such machines may be called AIs.

A chatbot is a software application or web interface that is designed to mimic human conversation through text or voice interactions. Modern chatbots are typically online and use generative artificial intelligence systems that are capable of maintaining a conversation with a user in natural language and simulating the way a human would behave as a conversational partner. Such chatbots often use deep learning and natural language processing, but simpler chatbots have existed for decades.

An artificial general intelligence (AGI) is a type of artificial intelligence (AI) that can perform as well or better than humans on a wide range of cognitive tasks. As opposed to narrow AI, which is designed for specific tasks.

DeepMind Technologies Limited, doing business as Google DeepMind, is a British-American artificial intelligence research laboratory which serves as a subsidiary of Google. Founded in the UK in 2010, it was acquired by Google in 2014, The company is based in London, with research centres in Canada, France, Germany and the United States.

OpenAI is a U.S. based artificial intelligence (AI) research organization founded in December 2015, researching artificial intelligence with the goal of developing "safe and beneficial" artificial general intelligence, which it defines as "highly autonomous systems that outperform humans at most economically valuable work". As one of the leading organizations of the AI spring, it has developed several large language models, advanced image generation models, and previously, released open-source models. Its release of ChatGPT has been credited with starting the AI spring.

In the field of artificial intelligence (AI), AI alignment research aims to steer AI systems towards a person or group's intended goals, preferences, and ethical principles. An AI system is considered aligned if it advances its intended objectives. A misaligned AI system may pursue some objectives, but not the intended ones.

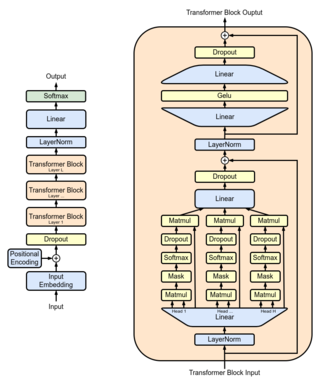

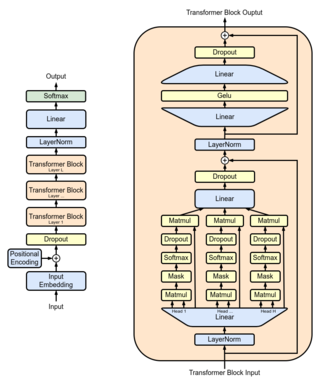

Generative Pre-trained Transformer 3 (GPT-3) is a large language model released by OpenAI in 2020. Like its predecessor, GPT-2, it is a decoder-only transformer model of deep neural network, which supersedes recurrence and convolution-based architectures with a technique known as "attention". This attention mechanism allows the model to selectively focus on segments of input text it predicts to be most relevant. It uses a 2048-tokens-long context, float16 (16-bit) precision, and a hitherto-unprecedented 175 billion parameters, requiring 350GB of storage space as each parameter takes 2 bytes of space, and has demonstrated strong "zero-shot" and "few-shot" learning abilities on many tasks.

Generative Pre-trained Transformer 2 (GPT-2) is a large language model by OpenAI and the second in their foundational series of GPT models. GPT-2 was pre-trained a dataset of 8 million web pages. It was partially released in February 2019, followed by full release of the 1.5-billion-parameter model on November 5, 2019.

LaMDA is a family of conversational large language models developed by Google. Originally developed and introduced as Meena in 2020, the first-generation LaMDA was announced during the 2021 Google I/O keynote, while the second generation was announced the following year. In June 2022, LaMDA gained widespread attention when Google engineer Blake Lemoine made claims that the chatbot had become sentient. The scientific community has largely rejected Lemoine's claims, though it has led to conversations about the efficacy of the Turing test, which measures whether a computer can pass for a human. In February 2023, Google announced Bard, a conversational artificial intelligence chatbot powered by LaMDA, to counter the rise of OpenAI's ChatGPT.

ChatGPT is a chatbot developed by OpenAI and launched on November 30, 2022. Based on a large language model, it enables users to refine and steer a conversation towards a desired length, format, style, level of detail, and language. Successive prompts and replies, known as prompt engineering, are considered at each conversation stage as a context.

In the field of artificial intelligence (AI), a hallucination or artificial hallucination is a response generated by an AI which contains false or misleading information presented as fact.

Generative Pre-trained Transformer 4 (GPT-4) is a multimodal large language model created by OpenAI, and the fourth in its series of GPT foundation models. It was launched on March 14, 2023, and made publicly available via the paid chatbot product ChatGPT Plus, via OpenAI's API, and via the free chatbot Microsoft Copilot. As a transformer-based model, GPT-4 uses a paradigm where pre-training using both public data and "data licensed from third-party providers" is used to predict the next token. After this step, the model was then fine-tuned with reinforcement learning feedback from humans and AI for human alignment and policy compliance.

Generative pre-trained transformers (GPT) are a type of large language model (LLM) and a prominent framework for generative artificial intelligence. They are artificial neural networks that are used in natural language processing tasks. GPTs are based on the transformer architecture, pre-trained on large data sets of unlabelled text, and able to generate novel human-like content. As of 2023, most LLMs have these characteristics and are sometimes referred to broadly as GPTs.

In machine learning, reinforcement learning from human feedback (RLHF), also called reinforcement learning from human preferences, is a technique to align an AI agent to human preferences. In classical reinforcement learning, such an agent learns a policy that maximizes a reward function that measures how well it performed its task. However, it is difficult to explicitly define such a reward function that approximates human preferences. Therefore, RLHF seeks to train a "reward model" directly from human feedback. This model can then function as a reward function to optimize an agent's policy through an optimization algorithm like Proximal Policy Optimization. The reward model is trained in advance to the policy being optimized to predict if a given output is good or bad. RLHF can improve the robustness and exploration of RL agents, especially when the reward function is sparse or noisy.

A large language model (LLM) is a language model notable for its ability to achieve general-purpose language generation and other natural language processing tasks such as classification. LLMs acquire these abilities by learning statistical relationships from text documents during a computationally intensive self-supervised and semi-supervised training process. LLMs can be used for text generation, a form of generative AI, by taking an input text and repeatedly predicting the next token or word.

Generative artificial intelligence is artificial intelligence capable of generating text, images or other data using generative models, often in response to prompts. Generative AI models learn the patterns and structure of their input training data and then generate new data that has similar characteristics.

The AI boom, or AI spring, is the ongoing period of rapid progress in the field of artificial intelligence. Prominent examples include protein folding prediction and generative AI, led by laboratories including Google DeepMind and OpenAI.

LLaMA is a family of autoregressive large language models (LLMs), released by Meta AI starting in February 2023.

Gemini is a family of multimodal large language models developed by Google DeepMind, serving as the successor to LaMDA and PaLM 2. Comprising Gemini Ultra, Gemini Pro, and Gemini Nano, it was announced on December 6, 2023, positioned as a competitor to OpenAI's GPT-4. It powers the generative artificial intelligence chatbot of the same name.