Rendering or image synthesis is the process of generating a photorealistic or non-photorealistic image from a 2D or 3D model by means of a computer program. The resulting image is referred to as a rendering. Multiple models can be defined in a scene file containing objects in a strictly defined language or data structure. The scene file contains geometry, viewpoint, textures, lighting, and shading information describing the virtual scene. The data contained in the scene file is then passed to a rendering program to be processed and output to a digital image or raster graphics image file. The term "rendering" is analogous to the concept of an artist's impression of a scene. The term "rendering" is also used to describe the process of calculating effects in a video editing program to produce the final video output.

Global illumination (GI), or indirect illumination, is a group of algorithms used in 3D computer graphics that are meant to add more realistic lighting to 3D scenes. Such algorithms take into account not only the light that comes directly from a light source, but also subsequent cases in which light rays from the same source are reflected by other surfaces in the scene, whether reflective or not.

In 3D computer graphics, radiosity is an application of the finite element method to solving the rendering equation for scenes with surfaces that reflect light diffusely. Unlike rendering methods that use Monte Carlo algorithms, which handle all types of light paths, typical radiosity only account for paths which leave a light source and are reflected diffusely some number of times before hitting the eye. Radiosity is a global illumination algorithm in the sense that the illumination arriving on a surface comes not just directly from the light sources, but also from other surfaces reflecting light. Radiosity is viewpoint independent, which increases the calculations involved, but makes them useful for all viewpoints.

In computer graphics, photon mapping is a two-pass global illumination rendering algorithm developed by Henrik Wann Jensen between 1995 and 2001 that approximately solves the rendering equation for integrating light radiance at a given point in space. Rays from the light source and rays from the camera are traced independently until some termination criterion is met, then they are connected in a second step to produce a radiance value. The algorithm is used to realistically simulate the interaction of light with different types of objects. Specifically, it is capable of simulating the refraction of light through a transparent substance such as glass or water, diffuse interreflection between illuminated objects, the subsurface scattering of light in translucent materials, and some of the effects caused by particulate matter such as smoke or water vapor. Photon mapping can also be extended to more accurate simulations of light, such as spectral rendering. Progressive photon mapping (PPM) starts with ray tracing and then adds more and more photon mapping passes to provide a progressively more accurate render.

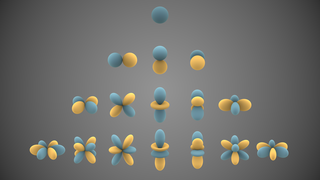

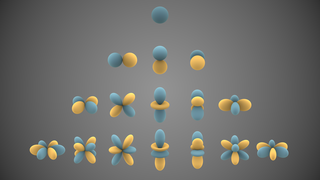

In mathematics and physical science, spherical harmonics are special functions defined on the surface of a sphere. They are often employed in solving partial differential equations in many scientific fields. The table of spherical harmonics contains a list of common spherical harmonics.

Autodesk 3ds Max, formerly 3D Studio and 3D Studio Max, is a professional 3D computer graphics program for making 3D animations, models, games and images. It is developed and produced by Autodesk Media and Entertainment. It has modeling capabilities and a flexible plugin architecture and must be used on the Microsoft Windows platform. It is frequently used by video game developers, many TV commercial studios, and architectural visualization studios. It is also used for movie effects and movie pre-visualization. 3ds Max features shaders, dynamic simulation, particle systems, radiosity, normal map creation and rendering, global illumination, a customizable user interface, and its own scripting language.

In computing, D3DX is a high level API library which is written to supplement Microsoft's Direct3D graphics API. The D3DX library was introduced in Direct3D 7, and subsequently was improved in Direct3D 9. It provides classes for common calculations on vectors, matrices and colors, calculating look-at and projection matrices, spline interpolations, and several more complicated tasks, such as compiling or assembling shaders used for 3D graphic programming, compressed skeletal animation storage and matrix stacks. There are several functions that provide complex operations over 3D meshes like tangent-space computation, mesh simplification, precomputed radiance transfer, optimizing for vertex cache friendliness and strip reordering, and generators for 3D text meshes. 2D features include classes for drawing screen-space lines, text and sprite based particle systems. Spatial functions include various intersection routines, conversion from/to barycentric coordinates and bounding box and sphere generators.

A lightmap is a data structure used in lightmapping, a form of surface caching in which the brightness of surfaces in a virtual scene is pre-calculated and stored in texture maps for later use. Lightmaps are most commonly applied to static objects in applications that use real-time 3D computer graphics, such as video games, in order to provide lighting effects such as global illumination at a relatively low computational cost.

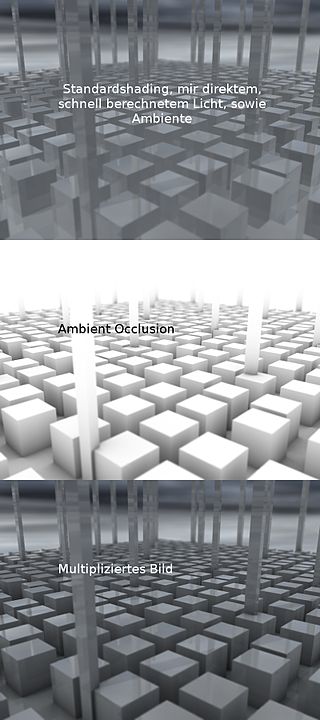

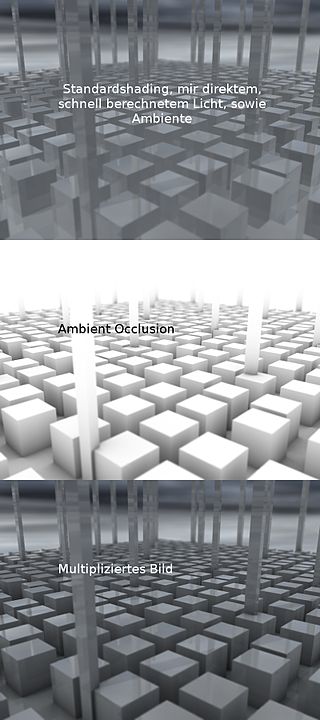

In 3D computer graphics, modeling, and animation, ambient occlusion is a shading and rendering technique used to calculate how exposed each point in a scene is to ambient lighting. For example, the interior of a tube is typically more occluded than the exposed outer surfaces, and becomes darker the deeper inside the tube one goes.

Subsurface scattering (SSS), also known as subsurface light transport (SSLT), is a mechanism of light transport in which light that penetrates the surface of a translucent object is scattered by interacting with the material and exits the surface potentially at a different point. Light generally penetrates the surface and gets scattered a number of times at irregular angles inside the material before passing back out of the material at a different angle than it would have had if it had been reflected directly off the surface.

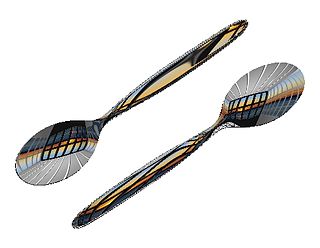

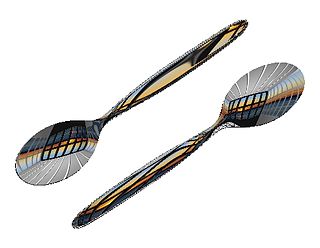

In computer graphics, reflection mapping or environment mapping is an efficient image-based lighting technique for approximating the appearance of a reflective surface by means of a precomputed texture. The texture is used to store the image of the distant environment surrounding the rendered object.

Path tracing is a computer graphics Monte Carlo method of rendering images of three-dimensional scenes such that the global illumination is faithful to reality. Fundamentally, the algorithm is integrating over all the illuminance arriving to a single point on the surface of an object. This illuminance is then reduced by a surface reflectance function (BRDF) to determine how much of it will go towards the viewpoint camera. This integration procedure is repeated for every pixel in the output image. When combined with physically accurate models of surfaces, accurate models of real light sources, and optically correct cameras, path tracing can produce still images that are indistinguishable from photographs.

Precomputed Radiance Transfer (PRT) is a computer graphics technique used to render a scene in real time with complex light interactions being precomputed to save time. Radiosity methods can be used to determine the diffuse lighting of the scene, however PRT offers a method to dynamically change the lighting environment.

In computer graphics, cube mapping is a method of environment mapping that uses the six faces of a cube as the map shape. The environment is projected onto the sides of a cube and stored as six square textures, or unfolded into six regions of a single texture.

3D rendering is the 3D computer graphics process of converting 3D models into 2D images on a computer. 3D renders may include photorealistic effects or non-photorealistic styles.

Form·Z is a general-purpose solid and surface modeling software. It offers 2D/3D form manipulating and sculpting capabilities. It can be used on Windows and Macintosh computers. It is available in English, German, Italian, Spanish, French, Greek, Korean and Japanese languages.

Computer graphics lighting is the collection of techniques used to simulate light in computer graphics scenes. While lighting techniques offer flexibility in the level of detail and functionality available, they also operate at different levels of computational demand and complexity. Graphics artists can choose from a variety of light sources, models, shading techniques, and effects to suit the needs of each application.

False Radiosity is a 3D computer graphics technique used to create texture mapping for objects that emulates patch interaction algorithms in radiosity rendering. Though practiced in some form since the late 90s, this term was coined only around 2002 by architect Andrew Hartness, then head of 3D and real-time design at Ateliers Jean Nouvel.

This is a glossary of terms relating to computer graphics.

A neural radiance field (NeRF) is a method based on deep learning for reconstructing a three-dimensional representation of a scene from two-dimensional images. The NeRF model enables downstream applications of novel view synthesis, scene geometry reconstruction, and obtaining the reflectance properties of the scene. Additional scene properties such as camera poses may also be jointly learned. First introduced in 2020, it has since gained significant attention for its potential applications in computer graphics and content creation.