In abstract algebra, the center of a group G is the set of elements that commute with every element of G. It is denoted Z(G), from German Zentrum, meaning center. In set-builder notation,

In mathematics, specifically group theory, given a prime number p, a p-group is a group in which the order of every element is a power of p. That is, for each element g of a p-group G, there exists a nonnegative integer n such that the product of pn copies of g, and not fewer, is equal to the identity element. The orders of different elements may be different powers of p.

In abstract algebra, the symmetric group defined over any set is the group whose elements are all the bijections from the set to itself, and whose group operation is the composition of functions. In particular, the finite symmetric group defined over a finite set of symbols consists of the permutations that can be performed on the symbols. Since there are such permutation operations, the order of the symmetric group is .

In mathematics, specifically group theory, a subgroup H of a group G may be used to decompose the underlying set of G into disjoint, equal-size subsets called cosets. There are left cosets and right cosets. Cosets have the same number of elements (cardinality) as does H. Furthermore, H itself is both a left coset and a right coset. The number of left cosets of H in G is equal to the number of right cosets of H in G. This common value is called the index of H in G and is usually denoted by [G : H].

In mathematics, a presentation is one method of specifying a group. A presentation of a group G comprises a set S of generators—so that every element of the group can be written as a product of powers of some of these generators—and a set R of relations among those generators. We then say G has presentation

In abstract algebra, a generating set of a group is a subset of the group set such that every element of the group can be expressed as a combination of finitely many elements of the subset and their inverses.

In mathematics, more specifically in the field of group theory, a solvable group or soluble group is a group that can be constructed from abelian groups using extensions. Equivalently, a solvable group is a group whose derived series terminates in the trivial subgroup.

In mathematics, specifically group theory, a nilpotent groupG is a group that has an upper central series that terminates with G. Equivalently, it has a central series of finite length or its lower central series terminates with {1}.

In mathematics, a modular form is a (complex) analytic function on the upper half-plane, , that satisfies:

In mathematics, in particular the theory of Lie algebras, the Weyl group of a root system Φ is a subgroup of the isometry group of that root system. Specifically, it is the subgroup which is generated by reflections through the hyperplanes orthogonal to the roots, and as such is a finite reflection group. In fact it turns out that most finite reflection groups are Weyl groups. Abstractly, Weyl groups are finite Coxeter groups, and are important examples of these.

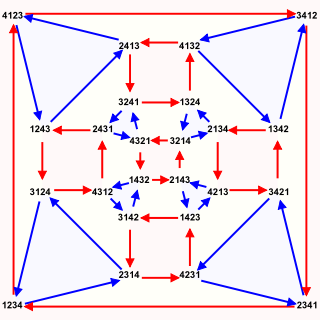

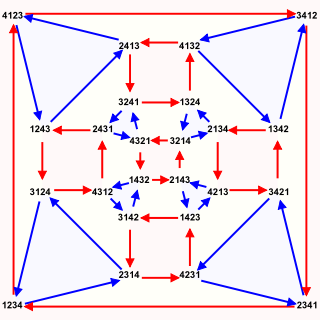

In mathematics, a Cayley graph, also known as a Cayley color graph, Cayley diagram, group diagram, or color group, is a graph that encodes the abstract structure of a group. Its definition is suggested by Cayley's theorem, and uses a specified set of generators for the group. It is a central tool in combinatorial and geometric group theory. The structure and symmetry of Cayley graphs makes them particularly good candidates for constructing expander graphs.

In group theory, a word metric on a discrete group is a way to measure distance between any two elements of . As the name suggests, the word metric is a metric on , assigning to any two elements , of a distance that measures how efficiently their difference can be expressed as a word whose letters come from a generating set for the group. The word metric on G is very closely related to the Cayley graph of G: the word metric measures the length of the shortest path in the Cayley graph between two elements of G.

In mathematics, a Frobenius group is a transitive permutation group on a finite set, such that no non-trivial element fixes more than one point and some non-trivial element fixes a point. They are named after F. G. Frobenius.

In number theory and algebraic geometry, the Tate conjecture is a 1963 conjecture of John Tate that would describe the algebraic cycles on a variety in terms of a more computable invariant, the Galois representation on étale cohomology. The conjecture is a central problem in the theory of algebraic cycles. It can be considered an arithmetic analog of the Hodge conjecture.

In mathematics, specifically in group theory, the direct product is an operation that takes two groups G and H and constructs a new group, usually denoted G × H. This operation is the group-theoretic analogue of the Cartesian product of sets and is one of several important notions of direct product in mathematics.

In mathematics, the Burnside ring of a finite group is an algebraic construction that encodes the different ways the group can act on finite sets. The ideas were introduced by William Burnside at the end of the nineteenth century. The algebraic ring structure is a more recent development, due to Solomon (1967).

In mathematics, a group is called boundedly generated if it can be expressed as a finite product of cyclic subgroups. The property of bounded generation is also closely related with the congruence subgroup problem.

In mathematics, an approximately finite-dimensional (AF) C*-algebra is a C*-algebra that is the inductive limit of a sequence of finite-dimensional C*-algebras. Approximate finite-dimensionality was first defined and described combinatorially by Ola Bratteli. Later, George A. Elliott gave a complete classification of AF algebras using the K0 functor whose range consists of ordered abelian groups with sufficiently nice order structure.

In the mathematical subject of group theory, the Grushko theorem or the Grushko–Neumann theorem is a theorem stating that the rank of a free product of two groups is equal to the sum of the ranks of the two free factors. The theorem was first obtained in a 1940 article of Grushko and then, independently, in a 1943 article of Neumann.

Non-commutative cryptography is the area of cryptology where the cryptographic primitives, methods and systems are based on algebraic structures like semigroups, groups and rings which are non-commutative. One of the earliest applications of a non-commutative algebraic structure for cryptographic purposes was the use of braid groups to develop cryptographic protocols. Later several other non-commutative structures like Thompson groups, polycyclic groups, Grigorchuk groups, and matrix groups have been identified as potential candidates for cryptographic applications. In contrast to non-commutative cryptography, the currently widely used public-key cryptosystems like RSA cryptosystem, Diffie–Hellman key exchange and elliptic curve cryptography are based on number theory and hence depend on commutative algebraic structures.