Related Research Articles

A head-related transfer function (HRTF), also known as anatomical transfer function (ATF), is a response that characterizes how an ear receives a sound from a point in space. As sound strikes the listener, the size and shape of the head, ears, ear canal, density of the head, size and shape of nasal and oral cavities, all transform the sound and affect how it is perceived, boosting some frequencies and attenuating others. Generally speaking, the HRTF boosts frequencies from 2–5 kHz with a primary resonance of +17 dB at 2,700 Hz. But the response curve is more complex than a single bump, affects a broad frequency spectrum, and varies significantly from person to person.

The precedence effect or law of the first wavefront is a binaural psychoacoustical effect. When a sound is followed by another sound separated by a sufficiently short time delay, listeners perceive a single auditory event; its perceived spatial location is dominated by the location of the first-arriving sound. The lagging sound also affects the perceived location. However, its effect is suppressed by the first-arriving sound.

Simultaneous localization and mapping (SLAM) is the computational problem of constructing or updating a map of an unknown environment while simultaneously keeping track of an agent's location within it. While this initially appears to be a chicken-and-egg problem there are several algorithms known for solving it, at least approximately, in tractable time for certain environments. Popular approximate solution methods include the particle filter, extended Kalman filter, covariance intersection, and GraphSLAM. SLAM algorithms are based on concepts in computational geometry and computer vision, and are used in robot navigation, robotic mapping and odometry for virtual reality or augmented reality.

In psychoacoustics and signal processing, a pure tone is a sound or a signal with a sinusoidal waveform; that is, a sine wave of any frequency, phase-shift, and amplitude.

Sound localization is a listener's ability to identify the location or origin of a detected sound in direction and distance.

In audio engineering, a fade is a gradual increase or decrease in the level of an audio signal. The term can also be used for film cinematography or theatre lighting in much the same way.

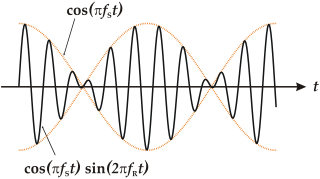

In acoustics, a beat is an interference pattern between two sounds of slightly different frequencies, perceived as a periodic variation in volume whose rate is the difference of the two frequencies.

In acoustics, the dummy head recording is a method of recording used to generate binaural recordings. The tracks are then listened to through headphones allowing for the listener to hear from the dummy’s perspective. The dummy head is designed to record multiple sounds at the same time enabling it to be exceptional at recording music as well as in other industries where multiple sound sources are involved.

Virtual acoustic space (VAS), also known as virtual auditory space, is a technique in which sounds presented over headphones appear to originate from any desired direction in space. The illusion of a virtual sound source outside the listener's head is created.

Acoustic location is the use of sound to determine the distance and direction of its source or reflector. Location can be done actively or passively, and can take place in gases, liquids, and in solids.

A sensory cue is a statistic or signal that can be extracted from the sensory input by a perceiver, that indicates the state of some property of the world that the perceiver is interested in perceiving.

Binaural fusion or binaural integration is a cognitive process that involves the combination of different auditory information presented binaurally, or to each ear. In humans, this process is essential in understanding speech as one ear may pick up more information about the speech stimuli than the other.

In physics, sound is a vibration that propagates as an acoustic wave, through a transmission medium such as a gas, liquid or solid. In human physiology and psychology, sound is the reception of such waves and their perception by the brain. Only acoustic waves that have frequencies lying between about 20 Hz and 20 kHz, the audio frequency range, elicit an auditory percept in humans. In air at atmospheric pressure, these represent sound waves with wavelengths of 17 meters (56 ft) to 1.7 centimeters (0.67 in). Sound waves above 20 kHz are known as ultrasound and are not audible to humans. Sound waves below 20 Hz are known as infrasound. Different animal species have varying hearing ranges.

The Franssen effect is an auditory illusion where the listener incorrectly localizes a sound. It was found in 1960 by Nico Valentinus Franssen (1926–1979), a Dutch physicist and inventor. There are two classical experiments, which are related to the Franssen effect, called Franssen effect F1 and Franssen effect F2.

Psychoacoustics is the branch of psychophysics involving the scientific study of sound perception and audiology—how humans perceive various sounds. More specifically, it is the branch of science studying the psychological responses associated with sound. Psychoacoustics is an interdisciplinary field of many areas, including psychology, acoustics, electronic engineering, physics, biology, physiology, and computer science.

In audio engineering, joint encoding refers to a joining of several channels of similar information during encoding in order to obtain higher quality, a smaller file size, or both.

An acoustic camera is an imaging device used to locate sound sources and to characterize them. It consists of a group of microphones, also called a microphone array, from which signals are simultaneously collected and processed to form a representation of the location of the sound sources.

3D sound localization refers to an acoustic technology that is used to locate the source of a sound in a three-dimensional space. The source location is usually determined by the direction of the incoming sound waves and the distance between the source and sensors. It involves the structure arrangement design of the sensors and signal processing techniques.

Perceptual-based 3D sound localization is the application of knowledge of the human auditory system to develop 3D sound localization technology.

3D sound reconstruction is the application of reconstruction techniques to 3D sound localization technology. These methods of reconstructing three-dimensional sound are used to recreate sounds to match natural environments and provide spatial cues of the sound source. They also see applications in creating 3D visualizations on a sound field to include physical aspects of sound waves including direction, pressure, and intensity. This technology is used in entertainment to reproduce a live performance through computer speakers. The technology is also used in military applications to determine location of sound sources. Reconstructing sound fields is also applicable to medical imaging to measure points in ultrasound.

References

- Jens Blauert "Spatial Hearing", The MIT Press; Rev Sub edition (October 2, 1996)

- On the Localisation in the superimposed Soundfield by Günther Theile