Related Research Articles

Binaural recording is a method of recording sound that uses two microphones, arranged with the intent to create a 3D stereo sound sensation for the listener of actually being in the room with the performers or instruments. This effect is often created using a technique known as dummy head recording, wherein a mannequin head is fitted with a microphone in each ear. Binaural recording is intended for replay using headphones and will not translate properly over stereo speakers. This idea of a three-dimensional or "internal" form of sound has also translated into useful advancement of technology in many things such as stethoscopes creating "in-head" acoustics and IMAX movies being able to create a three-dimensional acoustic experience.

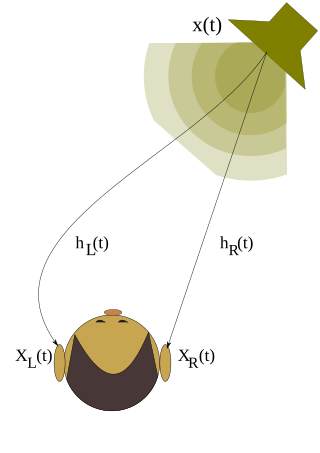

A head-related transfer function (HRTF) is a response that characterizes how an ear receives a sound from a point in space. As sound strikes the listener, the size and shape of the head, ears, ear canal, density of the head, size and shape of nasal and oral cavities, all transform the sound and affect how it is perceived, boosting some frequencies and attenuating others. Generally speaking, the HRTF boosts frequencies from 2–5 kHz with a primary resonance of +17 dB at 2,700 Hz. But the response curve is more complex than a single bump, affects a broad frequency spectrum, and varies significantly from person to person.

In audio signal processing and acoustics, an echo is a reflection of sound that arrives at the listener with a delay after the direct sound. The delay is directly proportional to the distance of the reflecting surface from the source and the listener. Typical examples are the echo produced by the bottom of a well, by a building, or by the walls of an enclosed room and an empty room.

Surround sound is a technique for enriching the fidelity and depth of sound reproduction by using multiple audio channels from speakers that surround the listener. Its first application was in movie theaters. Prior to surround sound, theater sound systems commonly had three screen channels of sound that played from three loudspeakers located in front of the audience. Surround sound adds one or more channels from loudspeakers to the side or behind the listener that are able to create the sensation of sound coming from any horizontal direction around the listener.

3D audio effects are a group of sound effects that manipulate the sound produced by stereo speakers, surround-sound speakers, speaker-arrays, or headphones. This frequently involves the virtual placement of sound sources anywhere in three-dimensional space, including behind, above or below the listener.

Sound localization is a listener's ability to identify the location or origin of a detected sound in direction and distance.

Stereophonic sound, or more commonly stereo, is a method of sound reproduction that recreates a multi-directional, 3-dimensional audible perspective. This is usually achieved by using two independent audio channels through a configuration of two loudspeakers in such a way as to create the impression of sound heard from various directions, as in natural hearing.

Virtual surround is an audio system that attempts to create the perception that there are many more sources of sound than are actually present. In order to achieve this, it is necessary to devise some means of tricking the human auditory system into thinking that a sound is coming from somewhere that it is not. Most recent examples of such systems are designed to simulate the true (physical) surround sound experience using one, two or three loudspeakers. Such systems are popular among consumers who want to enjoy the experience of surround sound without the large number of speakers that are traditionally required to do so.

Wave field synthesis (WFS) is a spatial audio rendering technique, characterized by creation of virtual acoustic environments. It produces artificial wavefronts synthesized by a large number of individually driven loudspeakers from elementary waves. Such wavefronts seem to originate from a virtual starting point, the virtual sound source. Contrary to traditional phantom sound sources, the localization of WFS established virtual sound sources does not depend on the listener's position. Like as a genuine sound source the virtual source remains at fixed starting point.

Binaural fusion or binaural integration is a cognitive process that involves the combination of different auditory information presented binaurally, or to each ear. In humans, this process is essential in understanding speech as one ear may pick up more information about the speech stimuli than the other.

Latency refers to a short period of delay between when an audio signal enters a system and when it emerges. Potential contributors to latency in an audio system include analog-to-digital conversion, buffering, digital signal processing, transmission time, digital-to-analog conversion and the speed of sound in the transmission medium.

Computational auditory scene analysis (CASA) is the study of auditory scene analysis by computational means. In essence, CASA systems are "machine listening" systems that aim to separate mixtures of sound sources in the same way that human listeners do. CASA differs from the field of blind signal separation in that it is based on the mechanisms of the human auditory system, and thus uses no more than two microphone recordings of an acoustic environment. It is related to the cocktail party problem.

Ambiophonics is a method in the public domain that employs digital signal processing (DSP) and two loudspeakers directly in front of the listener in order to improve reproduction of stereophonic and 5.1 surround sound for music, movies, and games in home theaters, gaming PCs, workstations, or studio monitoring applications. First implemented using mechanical means in 1986, today a number of hardware and VST plug-in makers offer Ambiophonic DSP. Ambiophonics eliminates crosstalk inherent in the conventional stereo triangle speaker placement, and thereby generates a speaker-binaural soundfield that emulates headphone-binaural sound, and creates for the listener improved perception of reality of recorded auditory scenes. A second speaker pair can be added in back in order to enable 360° surround sound reproduction. Additional surround speakers may be used for hall ambience, including height, if desired.

The Franssen effect is an auditory illusion where the listener incorrectly localizes a sound. It was found in 1960 by Nico Valentinus Franssen (1926–1979), a Dutch physicist and inventor. There are two classical experiments, which are related to the Franssen effect, called Franssen effect F1 and Franssen effect F2.

Psychoacoustics is the branch of psychophysics involving the scientific study of sound perception and audiology—how the human auditory system perceives various sounds. More specifically, it is the branch of science studying the psychological responses associated with sound. Psychoacoustics is an interdisciplinary field including psychology, acoustics, electronic engineering, physics, biology, physiology, and computer science.

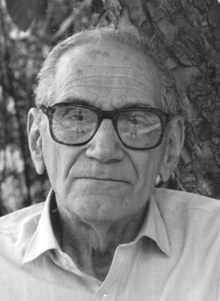

Hans Wallach was a German-American experimental psychologist whose research focused on perception and learning. Although he was trained in the Gestalt psychology tradition, much of his later work explored the adaptability of perceptual systems based on the perceiver's experience, whereas most Gestalt theorists emphasized inherent qualities of stimuli and downplayed the role of experience. Wallach's studies of achromatic surface color laid the groundwork for subsequent theories of lightness constancy, and his work on sound localization elucidated the perceptual processing that underlies stereophonic sound. He was a member of the National Academy of Sciences, a Guggenheim Fellow, and recipient of the Howard Crosby Warren Medal of the Society of Experimental Psychologists.

Perceptual-based 3D sound localization is the application of knowledge of the human auditory system to develop 3D sound localization technology.

3D sound reconstruction is the application of reconstruction techniques to 3D sound localization technology. These methods of reconstructing three-dimensional sound are used to recreate sounds to match natural environments and provide spatial cues of the sound source. They also see applications in creating 3D visualizations on a sound field to include physical aspects of sound waves including direction, pressure, and intensity. This technology is used in entertainment to reproduce a live performance through computer speakers. The technology is also used in military applications to determine location of sound sources. Reconstructing sound fields is also applicable to medical imaging to measure points in ultrasound.

3D sound is most commonly defined as the daily human experience of sounds. The sounds arrive to the ears from every direction and varying distances, which contribute to the three-dimensional aural image humans hear. Scientists and engineers who work with 3D sound work to accurately synthesize the complexity of real-world sounds.

Apparent source width (ASW) is the audible impression of a spatially extended sound source. This psychoacoustic impression results from the sound radiation characteristics of the source and the properties of the acoustic space into which it is radiating. Wide source widths are desired by listeners of music because these are associated with the sound of acoustic music, opera, classical music, and historically informed performance. Research concerning ASW comes from the field of room acoustics, architectural acoustics and auralization, as well as musical acoustics, psychoacoustics and systematic musicology.

References

- ↑ "Pro Audio Reference" . Retrieved 2020-04-18.

After Helmut Haas's doctorate dissertation presented to the University of Gottingen, Gottingen, Germany as "Über den Einfluss eines Einfachechos auf die Hörsamkeit von Sprache;" translated into English by Dr. Ing. K.P.R. Ehrenberg, Building Research Station, Watford, Herts., England Library Communication no. 363, December, 1949; reproduced in the United States as "The Influence of a Single Echo on the Audibility of Speech," J. Audio Eng. Soc., Vol. 20 (Mar. 1972), pp. 145-159.

- ↑ Proceedings American Association Advancement of Science vol v, pp 42, 48, May 6, 1851. Reprinted in "The Scientific Writings of Joseph Henry Vol 1," Smithsonian Institution, 1886, pp 296-296

- ↑ Cremer, L. (1948): "Die wissenschaftlichen Grundlagen der Raumakustik", Bd. 1. Hirzel-Verlag Stuttgart.

- ↑ Wallach, H., Newman, E. B., & Rosenzweig, M. R. (1949). "The precedence effect in sound localization," The American Journal of Psychology, 62, 315–336.

- ↑ Langmuir, I., Schaefer, V. J., Ferguson, C. V., & Hennelly, E. F. (1944). "A study of binaural perception of the direction of a sound source," OSRD Report 4079, PB number 31014, Office of Technical Services, U. S. Department of Commerce.

- ↑ Haas, H. (1951). "Uber den Einfluss eines Einfachechos auf die Horsamkeit von Sprache," Acustica, 1, 49–58.

- ↑ Arthur H. Benade (1990). Fundamentals of musical acoustics. Courier Dover Publications. p. 204. ISBN 978-0-486-26484-4.

- ↑ Haas, H. "The Influence of a Single Echo on the Audibility of Speech", JAES Volume 20 Issue 2 pp. 146-159; March 1972

- ↑ Blauert, J.: Spatial hearing - the psychophysics of human sound localization; MIT Press; Cambridge, Massachusetts (1983), chapter 3.1

- ↑ Litovsky, R.Y.; Colburn, H.S.; Yost, W.A.; Guzman, S.J. (1999). "The precedence effect" (PDF). The Journal of the Acoustical Society of America. 106 (4 Pt 1): 1633–16. Bibcode:1999ASAJ..106.1633L. doi:10.1121/1.427914. PMID 10530009.

- ↑ Audio, NTi. "How To Setup for a Live Sound Event" (PDF). www.nti-audio.com.

- ↑ Madsen, E. Roerbaek (October 1970). "Extraction of Ambiance Information from Ordinary Recordings". Journal of the Audio Engineering Society. 18 (5): 490–496.

- ↑ Katz, Bob (March 1988). "Extraction vs Generation". Stereophile.

- ↑ Katz, Bob (2007). Mastering Audio: The Art and the Science. Taylor & Francis. pp. 229–237. ISBN 978-0240808376.

- ↑ Davis, Patronis "Sound System Engineering", Focal Press; 3 edition (September 20, 2006)

- ↑ Philip Newell "Recording Studio Design", Focal Press; 2 edition (December 22, 2007)