In programming language theory, lazy evaluation, or call-by-need is an evaluation strategy which delays the evaluation of an expression until its value is needed and which also avoids repeated evaluations (sharing). The sharing can reduce the running time of certain functions by an exponential factor over other non-strict evaluation strategies, such as call-by-name, which repeatedly evaluate the same function, blindly, regardless whether the function can be memoized.

A one-instruction set computer (OISC), sometimes called an ultimate reduced instruction set computer (URISC), is an abstract machine that uses only one instruction – obviating the need for a machine language opcode. With a judicious choice for the single instruction and given infinite resources, an OISC is capable of being a universal computer in the same manner as traditional computers that have multiple instructions. OISCs have been recommended as aids in teaching computer architecture and have been used as computational models in structural computing research.

F# is a general purpose, strongly typed, multi-paradigm programming language that encompasses functional, imperative, and object-oriented programming methods. F# is most often used as a cross-platform Common Language Infrastructure (CLI) language, but it can also generate JavaScript and graphics processing unit (GPU) code.

Oz is a multiparadigm programming language, developed in the Programming Systems Lab at Université catholique de Louvain, for programming language education. It has a canonical textbook: Concepts, Techniques, and Models of Computer Programming.

Theoretical computer science (TCS) is a subset of general computer science and mathematics that focuses on more mathematical topics of computing, and includes the theory of computation.

An approximation is anything that is intentionally similar but not exactly equal to something else.

In compiler theory, dead code elimination is a compiler optimization to remove code which does not affect the program results. Removing such code has several benefits: it shrinks program size, an important consideration in some contexts, and it allows the running program to avoid executing irrelevant operations, which reduces its running time. It can also enable further optimizations by simplifying program structure. Dead code includes code that can never be executed, and code that only affects dead variables, that is, irrelevant to the program.

In computer programming, program slicing is the computation of the set of program statements, the program slice, that may affect the values at some point of interest, referred to as a slicing criterion. Program slicing can be used in debugging to locate source of errors more easily. Other applications of slicing include software maintenance, optimization, program analysis, and information flow control.

In computer science, a loop invariant is a property of a program loop that is true before each iteration. It is a logical assertion, sometimes checked within the code by an assertion call. Knowing its invariant(s) is essential in understanding the effect of a loop.

In functional programming, a monad is a design pattern that allows structuring programs generically while automating away boilerplate code needed by the program logic. Monads achieve this by providing their own data type, which represents a specific form of computation, along with one procedure to wrap values of any basic type within the monad and another to compose functions that output monadic values.

General-purpose computing on graphics processing units is the use of a graphics processing unit (GPU), which typically handles computation only for computer graphics, to perform computation in applications traditionally handled by the central processing unit (CPU). The use of multiple video cards in one computer, or large numbers of graphics chips, further parallelizes the already parallel nature of graphics processing. In addition, even a single GPU-CPU framework provides advantages that multiple CPUs on their own do not offer due to the specialization in each chip.

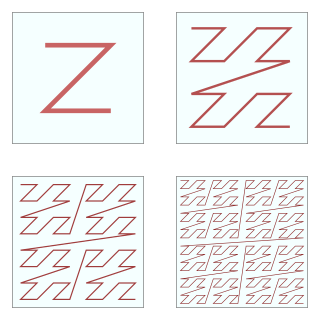

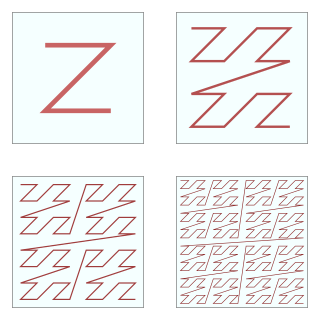

In mathematical analysis and computer science, functions which are Z-order, Lebesgue curve, Morton space filling curve, Morton order or Morton code map multidimensional data to one dimension while preserving locality of the data points. It is named after Guy Macdonald Morton, who first applied the order to file sequencing in 1966. The z-value of a point in multidimensions is simply calculated by interleaving the binary representations of its coordinate values. Once the data are sorted into this ordering, any one-dimensional data structure can be used such as binary search trees, B-trees, skip lists or hash tables. The resulting ordering can equivalently be described as the order one would get from a depth-first traversal of a quadtree.

Biclustering, block clustering , co-clustering, or two-mode clustering is a data mining technique which allows simultaneous clustering of the rows and columns of a matrix. The term was first introduced by Boris Mirkin to name a technique introduced many years earlier, in 1972, by J. A. Hartigan.

In computing, hardware acceleration is the use of computer hardware specially made to perform some functions more efficiently than is possible in software running on a general-purpose central processing unit (CPU). Any transformation of data or routine that can be computed, can be calculated purely in software running on a generic CPU, purely in custom-made hardware, or in some mix of both. An operation can be computed faster in application-specific hardware designed or programmed to compute the operation than specified in software and performed on a general-purpose computer processor. Each approach has advantages and disadvantages. The implementation of computing tasks in hardware to decrease latency and increase throughput is known as hardware acceleration.

Compile-time function execution is the ability of a compiler, that would normally compile a function to machine code and execute it at run time, to execute the function at compile time. This is possible if the arguments to the function are known at compile time, and the function does not make any reference to or attempt to modify any global state.

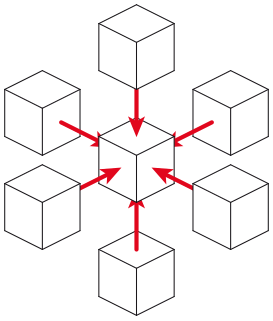

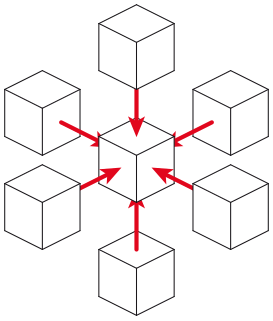

Stencil codes are a class of iterative kernels which update array elements according to some fixed pattern, called a stencil. They are most commonly found in the codes of computer simulations, e.g. for computational fluid dynamics in the context of scientific and engineering applications. Other notable examples include solving partial differential equations, the Jacobi kernel, the Gauss–Seidel method, image processing and cellular automata. The regular structure of the arrays sets stencil codes apart from other modeling methods such as the Finite element method. Most finite difference codes which operate on regular grids can be formulated as stencil codes.

Stochastic computing is a collection of techniques that represent continuous values by streams of random bits. Complex computations can then be computed by simple bit-wise operations on the streams. Stochastic computing is distinct from the study of randomized algorithms.

OptimJ is an extension for Java with language support for writing optimization models and abstractions for bulk data processing. The extensions and the proprietary product implementing the extensions were developed by Ateji which went out of business in September 2011. OptimJ aims at providing a clear and concise algebraic notation for optimization modeling, removing compatibility barriers between optimization modeling and application programming tools, and bringing software engineering techniques such as object-orientation and modern IDE support to optimization experts.

Approximate computing is a computation technique which returns a possibly inaccurate result rather than a guaranteed accurate result, and can be used for applications where an approximate result is sufficient for its purpose. One example of such situation is for a search engine where no exact answer may exist for a certain search query and hence, many answers may be acceptable. Similarly, occasional dropping of some frames in a video application can go undetected due to perceptual limitations of humans. Approximate computing is based on the observation that in many scenarios, although performing exact computation requires large amount of resources, allowing bounded approximation can provide disproportionate gains in performance and energy, while still achieving acceptable result accuracy. For example, in k-means clustering algorithm, allowing only 5% loss in classification accuracy can provide 50 times energy saving compared to the fully accurate classification.

Loop perforation is an approximate computing technique that allows to regularly skip some iterations of a loop.