Related Research Articles

The Dublin Core, also known as the Dublin Core Metadata Element Set (DCMES), is a set of fifteen main metadata items for describing digital or physical resources. The Dublin Core Metadata Initiative (DCMI) is responsible for formulating the Dublin Core; DCMI is a project of the Association for Information Science and Technology (ASIS&T), a non-profit organization.

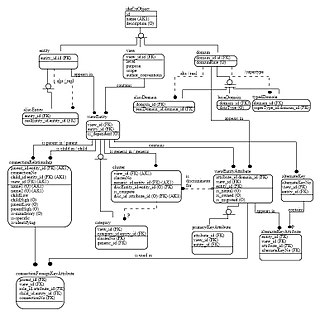

The Meta-Object Facility (MOF) is an Object Management Group (OMG) standard for model-driven engineering. Its purpose is to provide a type system for entities in the CORBA architecture and a set of interfaces through which those types can be created and manipulated. MOF may be used for domain-driven software design and object-oriented modelling.

Data modeling in software engineering is the process of creating a data model for an information system by applying certain formal techniques. It may be applied as part of broader Model-driven engineering (MDD) concept.

An information model in software engineering is a representation of concepts and the relationships, constraints, rules, and operations to specify data semantics for a chosen domain of discourse. Typically it specifies relations between kinds of things, but may also include relations with individual things. It can provide sharable, stable, and organized structure of information requirements or knowledge for the domain context.

Controlled vocabularies provide a way to organize knowledge for subsequent retrieval. They are used in subject indexing schemes, subject headings, thesauri, taxonomies and other knowledge organization systems. Controlled vocabulary schemes mandate the use of predefined, preferred terms that have been preselected by the designers of the schemes, in contrast to natural language vocabularies, which have no such restriction.

The ISO/IEC 11179 metadata registry (MDR) standard is an international ISO/IEC standard for representing metadata for an organization in a metadata registry. It documents the standardization and registration of metadata to make data understandable and shareable.

NIEMOpen, frequently referred to as NIEM, originated as an XML-based information exchange framework from the United States, but has transitioned to an OASISOpen Project. This initiative formalizes NIEM's designation as an official standard in national and international policy and procurement. NIEMOpen's Project Governing Board recently approved the first standard under this new project; the Conformance Targets Attribute Specification (CTAS) Version 3.0. A full collection of NIEMOpen standards are anticipated by end of year 2024.

The semantic spectrum, sometimes referred to as the ontology spectrum, the smart data continuum, or semantic precision, is a series of increasingly precise or rather semantically expressive definitions for data elements in knowledge representations, especially for machine use.

The term conceptual model refers to any model that is formed after a conceptualization or generalization process. Conceptual models are often abstractions of things in the real world, whether physical or social. Semantic studies are relevant to various stages of concept formation. Semantics is fundamentally a study of concepts, the meaning that thinking beings give to various elements of their experience.

The Clinical Data Interchange Standards Consortium (CDISC) is a standards developing organization (SDO) dealing with medical research data linked with healthcare, to "enable information system interoperability to improve medical research and related areas of healthcare". The standards support medical research from protocol through analysis and reporting of results and have been shown to decrease resources needed by 60% overall and 70–90% in the start-up stages when they are implemented at the beginning of the research process.

The ISO 15926 is a standard for data integration, sharing, exchange, and hand-over between computer systems.

Fully Communication Oriented Information Modeling (FCO-IM) is a method for building conceptual information models. Such models can then be automatically transformed into entity-relationship models (ERM), Unified Modeling Language (UML), relational or dimensional models with the FCO-IM Bridge toolset, and it is possible to generate complete end-user applications from them with the IMAGine toolset. Both toolsets were developed by the Research and Competence Group Data Architectures & Metadata Management of the HAN University of Applied Sciences in Arnhem, the Netherlands.

The AgMES initiative was developed by the Food and Agriculture Organization (FAO) of the United Nations and aims to encompass issues of semantic standards in the domain of agriculture with respect to description, resource discovery, interoperability, and data exchange for different types of information resources.

Gellish is an ontology language for data storage and communication, designed and developed by Andries van Renssen since mid-1990s. It started out as an engineering modeling language but evolved into a universal and extendable conceptual data modeling language with general applications. Because it includes domain-specific terminology and definitions, it is also a semantic data modelling language and the Gellish modeling methodology is a member of the family of semantic modeling methodologies.

Metadata is "data that provides information about other data", but not the content of the data itself, such as the text of a message or the image itself. There are many distinct types of metadata, including:

A metadata standard is a requirement which is intended to establish a common understanding of the meaning or semantics of the data, to ensure correct and proper use and interpretation of the data by its owners and users. To achieve this common understanding, a number of characteristics, or attributes of the data have to be defined, also known as metadata.

Object-oriented programming (OOP) is a programming paradigm based on the concept of objects, which can contain data and code: data in the form of fields, and code in the form of procedures.

ODABA is a terminology-oriented database management system, which is a conceptual extension of an object-oriented database system, and implements concepts defined in a terminology model. ODABA supports typical standards and technologies for object-oriented databases, but also terminology-oriented database extensions. ODABA also behaves like an object–relational database management system, i.e. data is seen as being stored in a database rather than accessing persistent objects in a programming environment. ODABA supports active data link (ADL) and provides an ADL-based GUI framework.

A terminology-oriented database or terminology-oriented database management system is a conceptual extension of an object-oriented database. It implements concepts defined in a terminology model. Compared with object-oriented databases, the terminology-oriented database requires some minor conceptual extensions on the schema level as supporting set relations, weak-typed collections or shared inheritance.

In computing, a data definition specification (DDS) is a guideline to ensure comprehensive and consistent data definition. It represents the attributes required to quantify data definition. A comprehensive data definition specification encompasses enterprise data, the hierarchy of data management, prescribed guidance enforcement and criteria to determine compliance.

References

- ↑ Karge, R. (April 2005). A terminology model approach for defining and managing statistical metadata (Power Point). Eighth Open Forum on Metadata Registries. Berlin. Archived (PDF) from the original on May 10, 2023.

- ↑ "ISO 704:2009 - Terminology work -- Principles and methods". ISO. 2009-11-01. Archived from the original on Aug 9, 2016.

- ↑ "Neuchâtel Terminology Model PART I: Classification database object types and their attributes (Version 2.1)" (PDF). 19 August 2004. Archived from the original (PDF) on Mar 3, 2016.

- ↑ "Neuchâtel Terminology Model PART II: Variables and related concepts (Version 1.0)" (PDF). December 2006. Archived from the original (PDF) on Jan 19, 2022.

- ↑ "Reference Model". METANET. Archived from the original on 2012-03-10. Retrieved 2010-08-25.