Computer vision tasks include methods for acquiring, processing, analyzing and understanding digital images, and extraction of high-dimensional data from the real world in order to produce numerical or symbolic information, e.g. in the forms of decisions. Understanding in this context means the transformation of visual images into descriptions of the world that make sense to thought processes and can elicit appropriate action. This image understanding can be seen as the disentangling of symbolic information from image data using models constructed with the aid of geometry, physics, statistics, and learning theory.

Gesture recognition is an area of research and development in computer science and language technology concerned with the recognition and interpretation of human gestures. A subdiscipline of computer vision, it employs mathematical algorithms to interpret gestures.

3D scanning is the process of analyzing a real-world object or environment to collect three dimensional data of its shape and possibly its appearance. The collected data can then be used to construct digital 3D models.

In computer graphics and computer vision, image-based modeling and rendering (IBMR) methods rely on a set of two-dimensional images of a scene to generate a three-dimensional model and then render some novel views of this scene.

Facial motion capture is the process of electronically converting the movements of a person's face into a digital database using cameras or laser scanners. This database may then be used to produce computer graphics (CG), computer animation for movies, games, or real-time avatars. Because the motion of CG characters is derived from the movements of real people, it results in a more realistic and nuanced computer character animation than if the animation were created manually.

Structure from motion (SfM) is a photogrammetric range imaging technique for estimating three-dimensional structures from two-dimensional image sequences that may be coupled with local motion signals. It is studied in the fields of computer vision and visual perception.

Articulated body pose estimation in computer vision is the study of algorithms and systems that recover the pose of an articulated body, which consists of joints and rigid parts using image-based observations. It is one of the longest-lasting problems in computer vision because of the complexity of the models that relate observation with pose, and because of the variety of situations in which it would be useful.

Object recognition – technology in the field of computer vision for finding and identifying objects in an image or video sequence. Humans recognize a multitude of objects in images with little effort, despite the fact that the image of the objects may vary somewhat in different view points, in many different sizes and scales or even when they are translated or rotated. Objects can even be recognized when they are partially obstructed from view. This task is still a challenge for computer vision systems. Many approaches to the task have been implemented over multiple decades.

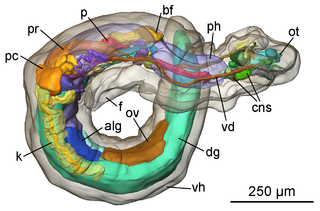

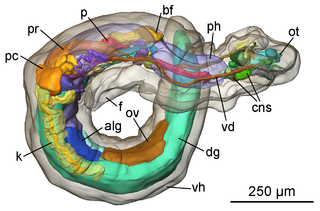

In computer vision and computer graphics, 3D reconstruction is the process of capturing the shape and appearance of real objects. This process can be accomplished either by active or passive methods. If the model is allowed to change its shape in time, this is referred to as non-rigid or spatio-temporal reconstruction.

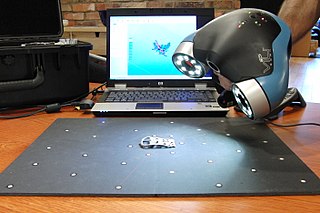

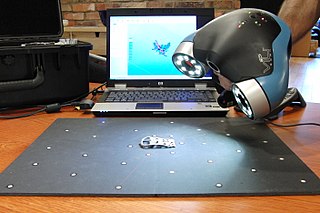

A structured-light 3D scanner is a 3D scanning device for measuring the three-dimensional shape of an object using projected light patterns and a camera system.

2.5D is an effect in visual perception. It is the construction of an apparently three-dimensional environment from 2D retinal projections. While the result is technically 2D, it allows for the illusion of depth. It is easier for the eye to discern the distance between two items than the depth of a single object in the view field. Computers can use 2.5D to make images of human faces look lifelike.

In computer science, landmark detection is the process of finding significant landmarks in an image. This originally referred to finding landmarks for navigational purposes – for instance, in robot vision or creating maps from satellite images. Methods used in navigation have been extended to other fields, notably in facial recognition where it is used to identify key points on a face. It also has important applications in medicine, identifying anatomical landmarks in medical images.

DeepFace is a deep learning facial recognition system created by a research group at Facebook. It identifies human faces in digital images. The program employs a nine-layer neural network with over 120 million connection weights and was trained on four million images uploaded by Facebook users. The Facebook Research team has stated that the DeepFace method reaches an accuracy of 97.35% ± 0.25% on Labeled Faces in the Wild (LFW) data set where human beings have 97.53%. This means that DeepFace is sometimes more successful than human beings. As a result of growing societal concerns Meta announced that it plans to shut down Facebook facial recognition system, deleting the face scan data of more than one billion users. This change will represent one of the largest shifts in facial recognition usage in the technology's history. Facebook planned to delete by December 2021 more than one billion facial recognition templates, which are digital scans of facial features. However, it did not plan to eliminate DeepFace which is the software that powers the facial recognition system. The company has also not ruled out incorporating facial recognition technology into future products, according to Meta spokesperson.

Michael J. Black is an American-born computer scientist working in Tübingen, Germany. He is a founding director at the Max Planck Institute for Intelligent Systems where he leads the Perceiving Systems Department in research focused on computer vision, machine learning, and computer graphics. He is also an Honorary Professor at the University of Tübingen.

In the domain of physics and probability, the filters, random fields, and maximum entropy (FRAME) model is a Markov random field model of stationary spatial processes, in which the energy function is the sum of translation-invariant potential functions that are one-dimensional non-linear transformations of linear filter responses. The FRAME model was originally developed by Song-Chun Zhu, Ying Nian Wu, and David Mumford for modeling stochastic texture patterns, such as grasses, tree leaves, brick walls, water waves, etc. This model is the maximum entropy distribution that reproduces the observed marginal histograms of responses from a bank of filters, where for each filter tuned to a specific scale and orientation, the marginal histogram is pooled over all the pixels in the image domain. The FRAME model is also proved to be equivalent to the micro-canonical ensemble, which was named the Julesz ensemble. Gibbs sampler is adopted to synthesize texture images by drawing samples from the FRAME model.

Lyndon Neal Smith is an English academic who is Professor in Computer Simulation and Machine Vision at the School of Engineering at the University of the West of England. He is also Director of the Centre for Machine Vision at the Bristol Robotics Laboratory.

Xiaoming Liu is a Chinese-American computer scientist and an academic. He is a Professor in the Department of Computer Science and Engineering, MSU Foundation Professor as well as Anil K. and Nandita Jain Endowed Professor of Engineering at Michigan State University.

Gérard G. Medioni is a computer scientist, author, academic and inventor. He is a vice president and distinguished scientist at Amazon and serves as emeritus professor of Computer Science at the University of Southern California.

In computer vision and computer graphics, the 3D Face Morphable Model (3DFMM) is a generative technique for modeling textured 3D faces. The generation of new faces is based on a pre-existing database of example faces acquired through a 3D scanning procedure. All these faces are in dense point-to-point correspondence, which enables the generation of a new realistic face (morph) by combining the acquired faces. A new 3D face can be inferred from one or multiple existing images of a face or by arbitrarily combining the example faces. 3DFMM provides a way to represent face shape and texture disentangled from external factors, such as camera parameters and illumination.