In complex analysis, an entire function, also called an integral function, is a complex-valued function that is holomorphic on the whole complex plane. Typical examples of entire functions are polynomials and the exponential function, and any finite sums, products and compositions of these, such as the trigonometric functions sine and cosine and their hyperbolic counterparts sinh and cosh, as well as derivatives and integrals of entire functions such as the error function. If an entire function has a root at , then , taking the limit value at , is an entire function. On the other hand, the natural logarithm, the reciprocal function, and the square root are all not entire functions, nor can they be continued analytically to an entire function.

In probability theory and statistics, a normal distribution or Gaussian distribution is a type of continuous probability distribution for a real-valued random variable. The general form of its probability density function is

In probability theory and statistics, the exponential distribution or negative exponential distribution is the probability distribution of the distance between events in a Poisson point process, i.e., a process in which events occur continuously and independently at a constant average rate; the distance parameter could be any meaningful mono-dimensional measure of the process, such as time between production errors, or length along a roll of fabric in the weaving manufacturing process. It is a particular case of the gamma distribution. It is the continuous analogue of the geometric distribution, and it has the key property of being memoryless. In addition to being used for the analysis of Poisson point processes it is found in various other contexts.

In probability theory, a log-normal (or lognormal) distribution is a continuous probability distribution of a random variable whose logarithm is normally distributed. Thus, if the random variable X is log-normally distributed, then Y = ln(X) has a normal distribution. Equivalently, if Y has a normal distribution, then the exponential function of Y, X = exp(Y), has a log-normal distribution. A random variable which is log-normally distributed takes only positive real values. It is a convenient and useful model for measurements in exact and engineering sciences, as well as medicine, economics and other topics (e.g., energies, concentrations, lengths, prices of financial instruments, and other metrics).

In probability theory and statistics, the gamma distribution is a versatile two-parameter family of continuous probability distributions. The exponential distribution, Erlang distribution, and chi-squared distribution are special cases of the gamma distribution. There are two equivalent parameterizations in common use:

- With a shape parameter α and a scale parameter θ

- With a shape parameter and a rate parameter

In mathematics, a Gaussian function, often simply referred to as a Gaussian, is a function of the base form and with parametric extension for arbitrary real constants a, b and non-zero c. It is named after the mathematician Carl Friedrich Gauss. The graph of a Gaussian is a characteristic symmetric "bell curve" shape. The parameter a is the height of the curve's peak, b is the position of the center of the peak, and c controls the width of the "bell".

In probability theory and statistics, the Gumbel distribution is used to model the distribution of the maximum of a number of samples of various distributions.

In probability theory, a distribution is said to be stable if a linear combination of two independent random variables with this distribution has the same distribution, up to location and scale parameters. A random variable is said to be stable if its distribution is stable. The stable distribution family is also sometimes referred to as the Lévy alpha-stable distribution, after Paul Lévy, the first mathematician to have studied it.

Variational Bayesian methods are a family of techniques for approximating intractable integrals arising in Bayesian inference and machine learning. They are typically used in complex statistical models consisting of observed variables as well as unknown parameters and latent variables, with various sorts of relationships among the three types of random variables, as might be described by a graphical model. As typical in Bayesian inference, the parameters and latent variables are grouped together as "unobserved variables". Variational Bayesian methods are primarily used for two purposes:

- To provide an analytical approximation to the posterior probability of the unobserved variables, in order to do statistical inference over these variables.

- To derive a lower bound for the marginal likelihood of the observed data. This is typically used for performing model selection, the general idea being that a higher marginal likelihood for a given model indicates a better fit of the data by that model and hence a greater probability that the model in question was the one that generated the data.

In probability theory and statistics, the generalized extreme value (GEV) distribution is a family of continuous probability distributions developed within extreme value theory to combine the Gumbel, Fréchet and Weibull families also known as type I, II and III extreme value distributions. By the extreme value theorem the GEV distribution is the only possible limit distribution of properly normalized maxima of a sequence of independent and identically distributed random variables. that a limit distribution needs to exist, which requires regularity conditions on the tail of the distribution. Despite this, the GEV distribution is often used as an approximation to model the maxima of long (finite) sequences of random variables.

In probability theory and statistics, the inverse gamma distribution is a two-parameter family of continuous probability distributions on the positive real line, which is the distribution of the reciprocal of a variable distributed according to the gamma distribution.

In statistics and information theory, a maximum entropy probability distribution has entropy that is at least as great as that of all other members of a specified class of probability distributions. According to the principle of maximum entropy, if nothing is known about a distribution except that it belongs to a certain class, then the distribution with the largest entropy should be chosen as the least-informative default. The motivation is twofold: first, maximizing entropy minimizes the amount of prior information built into the distribution; second, many physical systems tend to move towards maximal entropy configurations over time.

In physics, the Tsallis entropy is a generalization of the standard Boltzmann–Gibbs entropy. It is proportional to the expectation of the q-logarithm of a distribution.

In statistics, a power transform is a family of functions applied to create a monotonic transformation of data using power functions. It is a data transformation technique used to stabilize variance, make the data more normal distribution-like, improve the validity of measures of association, and for other data stabilization procedures.

In statistics, a Tsallis distribution is a probability distribution derived from the maximization of the Tsallis entropy under appropriate constraints. There are several different families of Tsallis distributions, yet different sources may reference an individual family as "the Tsallis distribution". The q-Gaussian is a generalization of the Gaussian in the same way that Tsallis entropy is a generalization of standard Boltzmann–Gibbs entropy or Shannon entropy. Similarly, if the domain of the variable is constrained to be positive in the maximum entropy procedure, the q-exponential distribution is derived.

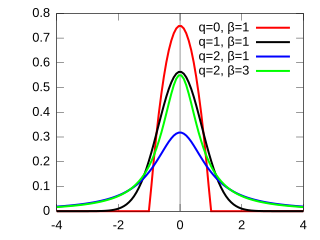

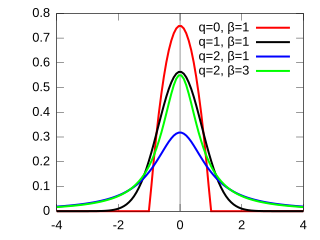

The q-Gaussian is a probability distribution arising from the maximization of the Tsallis entropy under appropriate constraints. It is one example of a Tsallis distribution. The q-Gaussian is a generalization of the Gaussian in the same way that Tsallis entropy is a generalization of standard Boltzmann–Gibbs entropy or Shannon entropy. The normal distribution is recovered as q → 1.

The q-exponential distribution is a probability distribution arising from the maximization of the Tsallis entropy under appropriate constraints, including constraining the domain to be positive. It is one example of a Tsallis distribution. The q-exponential is a generalization of the exponential distribution in the same way that Tsallis entropy is a generalization of standard Boltzmann–Gibbs entropy or Shannon entropy. The exponential distribution is recovered as

In probability and statistics, the reciprocal distribution, also known as the log-uniform distribution, is a continuous probability distribution. It is characterised by its probability density function, within the support of the distribution, being proportional to the reciprocal of the variable.

In statistics, the q-Weibull distribution is a probability distribution that generalizes the Weibull distribution and the Lomax distribution. It is one example of a Tsallis distribution.

Kaniadakis statistics is a generalization of Boltzmann–Gibbs statistical mechanics, based on a relativistic generalization of the classical Boltzmann–Gibbs–Shannon entropy. Introduced by the Greek Italian physicist Giorgio Kaniadakis in 2001, κ-statistical mechanics preserve the main features of ordinary statistical mechanics and have attracted the interest of many researchers in recent years. The κ-distribution is currently considered one of the most viable candidates for explaining complex physical, natural or artificial systems involving power-law tailed statistical distributions. Kaniadakis statistics have been adopted successfully in the description of a variety of systems in the fields of cosmology, astrophysics, condensed matter, quantum physics, seismology, genomics, economics, epidemiology, and many others.