In logic and computer science, the Boolean satisfiability problem is the problem of determining if there exists an interpretation that satisfies a given Boolean formula. In other words, it asks whether the variables of a given Boolean formula can be consistently replaced by the values TRUE or FALSE in such a way that the formula evaluates to TRUE. If this is the case, the formula is called satisfiable. On the other hand, if no such assignment exists, the function expressed by the formula is FALSE for all possible variable assignments and the formula is unsatisfiable. For example, the formula "a AND NOT b" is satisfiable because one can find the values a = TRUE and b = FALSE, which make = TRUE. In contrast, "a AND NOT a" is unsatisfiable.

First-order logic—also known as predicate logic, quantificational logic, and first-order predicate calculus—is a collection of formal systems used in mathematics, philosophy, linguistics, and computer science. First-order logic uses quantified variables over non-logical objects, and allows the use of sentences that contain variables, so that rather than propositions such as "Socrates is a man", one can have expressions in the form "there exists x such that x is Socrates and x is a man", where "there exists" is a quantifier, while x is a variable. This distinguishes it from propositional logic, which does not use quantifiers or relations; in this sense, propositional logic is the foundation of first-order logic.

In mathematical logic, model theory is the study of the relationship between formal theories, and their models. The aspects investigated include the number and size of models of a theory, the relationship of different models to each other, and their interaction with the formal language itself. In particular, model theorists also investigate the sets that can be defined in a model of a theory, and the relationship of such definable sets to each other. As a separate discipline, model theory goes back to Alfred Tarski, who first used the term "Theory of Models" in publication in 1954. Since the 1970s, the subject has been shaped decisively by Saharon Shelah's stability theory.

In Boolean logic, a formula is in conjunctive normal form (CNF) or clausal normal form if it is a conjunction of one or more clauses, where a clause is a disjunction of literals; otherwise put, it is a product of sums or an AND of ORs. As a canonical normal form, it is useful in automated theorem proving and circuit theory.

In computer science, model checking or property checking is a method for checking whether a finite-state model of a system meets a given specification. This is typically associated with hardware or software systems, where the specification contains liveness requirements as well as safety requirements.

In logic and mathematics, second-order logic is an extension of first-order logic, which itself is an extension of propositional logic. Second-order logic is in turn extended by higher-order logic and type theory.

In mathematical logic, propositional logic and predicate logic, a well-formed formula, abbreviated WFF or wff, often simply formula, is a finite sequence of symbols from a given alphabet that is part of a formal language. A formal language can be identified with the set of formulas in the language.

In mathematical logic, a formula of first-order logic is in Skolem normal form if it is in prenex normal form with only universal first-order quantifiers.

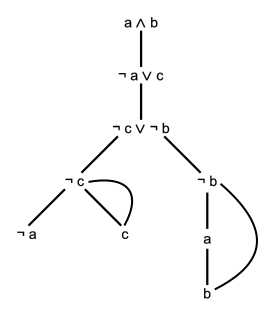

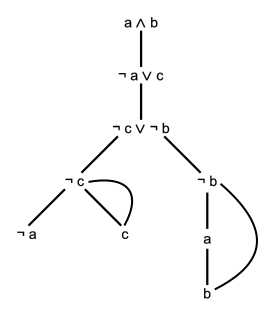

In proof theory, the semantic tableau is a decision procedure for sentential and related logics, and a proof procedure for formulae of first-order logic. An analytic tableau is a tree structure computed for a logical formula, having at each node a subformula of the original formula to be proved or refuted. Computation constructs this tree and uses it to prove or refute the whole formula. The tableau method can also determine the satisfiability of finite sets of formulas of various logics. It is the most popular proof procedure for modal logics.

Descriptive complexity is a branch of computational complexity theory and of finite model theory that characterizes complexity classes by the type of logic needed to express the languages in them. For example, PH, the union of all complexity classes in the polynomial hierarchy, is precisely the class of languages expressible by statements of second-order logic. This connection between complexity and the logic of finite structures allows results to be transferred easily from one area to the other, facilitating new proof methods and providing additional evidence that the main complexity classes are somehow "natural" and not tied to the specific abstract machines used to define them.

In formal logic, Horn-satisfiability, or HORNSAT, is the problem of deciding whether a given set of propositional Horn clauses is satisfiable or not. Horn-satisfiability and Horn clauses are named after Alfred Horn.

In computer science and mathematical logic, satisfiability modulo theories (SMT) is the problem of determining whether a mathematical formula is satisfiable. It generalizes the Boolean satisfiability problem (SAT) to more complex formulas involving real numbers, integers, and/or various data structures such as lists, arrays, bit vectors, and strings. The name is derived from the fact that these expressions are interpreted within ("modulo") a certain formal theory in first-order logic with equality. SMT solvers are tools which aim to solve the SMT problem for a practical subset of inputs. SMT solvers such as Z3 and cvc5 have been used as a building block for a wide range of applications across computer science, including in automated theorem proving, program analysis, program verification, and software testing.

In mathematical logic, Skolem arithmetic is the first-order theory of the natural numbers with multiplication, named in honor of Thoralf Skolem. The signature of Skolem arithmetic contains only the multiplication operation and equality, omitting the addition operation entirely.

An interpretation is an assignment of meaning to the symbols of a formal language. Many formal languages used in mathematics, logic, and theoretical computer science are defined in solely syntactic terms, and as such do not have any meaning until they are given some interpretation. The general study of interpretations of formal languages is called formal semantics.

In mathematical logic, monadic second-order logic (MSO) is the fragment of second-order logic where the second-order quantification is limited to quantification over sets. It is particularly important in the logic of graphs, because of Courcelle's theorem, which provides algorithms for evaluating monadic second-order formulas over graphs of bounded treewidth. It is also of fundamental importance in automata theory, where the Büchi-Elgot-Trakhtenbrot theorem gives a logical characterization of the regular languages.

In computational complexity theory, the language TQBF is a formal language consisting of the true quantified Boolean formulas. A (fully) quantified Boolean formula is a formula in quantified propositional logic where every variable is quantified, using either existential or universal quantifiers, at the beginning of the sentence. Such a formula is equivalent to either true or false. If such a formula evaluates to true, then that formula is in the language TQBF. It is also known as QSAT.

In mathematical logic, a formula is satisfiable if it is true under some assignment of values to its variables. For example, the formula is satisfiable because it is true when and , while the formula is not satisfiable over the integers. The dual concept to satisfiability is validity; a formula is valid if every assignment of values to its variables makes the formula true. For example, is valid over the integers, but is not.

Dependence logic is a logical formalism, created by Jouko Väänänen, which adds dependence atoms to the language of first-order logic. A dependence atom is an expression of the form , where are terms, and corresponds to the statement that the value of is functionally dependent on the values of .

In mathematical logic, the spectrum of a sentence is the set of natural numbers occurring as the size of a finite model in which a given sentence is true.

In the mathematical fields of graph theory and finite model theory, the logic of graphs deals with formal specifications of graph properties using sentences of mathematical logic. There are several variations in the types of logical operation that can be used in these sentences. The first-order logic of graphs concerns sentences in which the variables and predicates concern individual vertices and edges of a graph, while monadic second-order graph logic allows quantification over sets of vertices or edges. Logics based on least fixed point operators allow more general predicates over tuples of vertices, but these predicates can only be constructed through fixed-point operators, restricting their power.