In machine learning, a neural network is a model inspired by the structure and function of biological neural networks in animal brains.

Bio-inspired computing, short for biologically inspired computing, is a field of study which seeks to solve computer science problems using models of biology. It relates to connectionism, social behavior, and emergence. Within computer science, bio-inspired computing relates to artificial intelligence and machine learning. Bio-inspired computing is a major subset of natural computation.

In computer programming, gene expression programming (GEP) is an evolutionary algorithm that creates computer programs or models. These computer programs are complex tree structures that learn and adapt by changing their sizes, shapes, and composition, much like a living organism. And like living organisms, the computer programs of GEP are also encoded in simple linear chromosomes of fixed length. Thus, GEP is a genotype–phenotype system, benefiting from a simple genome to keep and transmit the genetic information and a complex phenotype to explore the environment and adapt to it.

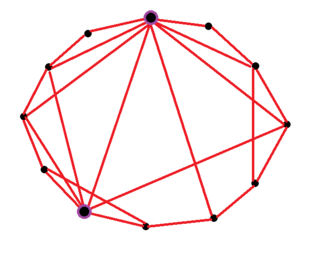

A small-world network is a graph characterized by a high clustering coefficient and low distances. On an example of social network, high clustering implies the high probability that two friends of one person are friends themselves. The low distances, on the other hand, mean that there is a short chain of social connections between any two people. Specifically, a small-world network is defined to be a network where the typical distance L between two randomly chosen nodes grows proportionally to the logarithm of the number of nodes N in the network, that is:

A recurrent neural network (RNN) is one of the two broad types of artificial neural network, characterized by direction of the flow of information between its layers. In contrast to the uni-directional feedforward neural network, it is a bi-directional artificial neural network, meaning that it allows the output from some nodes to affect subsequent input to the same nodes. Their ability to use internal state (memory) to process arbitrary sequences of inputs makes them applicable to tasks such as unsegmented, connected handwriting recognition or speech recognition. The term "recurrent neural network" is used to refer to the class of networks with an infinite impulse response, whereas "convolutional neural network" refers to the class of finite impulse response. Both classes of networks exhibit temporal dynamic behavior. A finite impulse recurrent network is a directed acyclic graph that can be unrolled and replaced with a strictly feedforward neural network, while an infinite impulse recurrent network is a directed cyclic graph that cannot be unrolled.

A neural circuit is a population of neurons interconnected by synapses to carry out a specific function when activated. Multiple neural circuits interconnect with one another to form large scale brain networks.

A neural network, also called a neuronal network, is an interconnected population of neurons. Biological neural networks are studied to understand the organization and functioning of nervous systems.

An artificial brain is software and hardware with cognitive abilities similar to those of the animal or human brain.

Neurophilosophy or the philosophy of neuroscience is the interdisciplinary study of neuroscience and philosophy that explores the relevance of neuroscientific studies to the arguments traditionally categorized as philosophy of mind. The philosophy of neuroscience attempts to clarify neuroscientific methods and results using the conceptual rigor and methods of philosophy of science.

Neuroinformatics is the emergent field that combines informatics and neuroscience. Neuroinformatics is related with neuroscience data and information processing by artificial neural networks. There are three main directions where neuroinformatics has to be applied:

An and-inverter graph (AIG) is a directed, acyclic graph that represents a structural implementation of the logical functionality of a circuit or network. An AIG consists of two-input nodes representing logical conjunction, terminal nodes labeled with variable names, and edges optionally containing markers indicating logical negation. This representation of a logic function is rarely structurally efficient for large circuits, but is an efficient representation for manipulation of boolean functions. Typically, the abstract graph is represented as a data structure in software.

Computational neurogenetic modeling (CNGM) is concerned with the study and development of dynamic neuronal models for modeling brain functions with respect to genes and dynamic interactions between genes. These include neural network models and their integration with gene network models. This area brings together knowledge from various scientific disciplines, such as computer and information science, neuroscience and cognitive science, genetics and molecular biology, as well as engineering.

Hierarchical temporal memory (HTM) is a biologically constrained machine intelligence technology developed by Numenta. Originally described in the 2004 book On Intelligence by Jeff Hawkins with Sandra Blakeslee, HTM is primarily used today for anomaly detection in streaming data. The technology is based on neuroscience and the physiology and interaction of pyramidal neurons in the neocortex of the mammalian brain.

In recent years, the use of biologically inspired methods such as the evolutionary algorithm have been increasingly employed to solve and analyze complex computational problems. BELBIC is one such controller which is proposed by Caro Lucas, Danial Shahmirzadi and Nima Sheikholeslami and adopts the network model developed by Moren and Balkenius to mimic those parts of the brain which are known to produce emotion.

The Turing test, originally called the imitation game by Alan Turing in 1950, is a test of a machine's ability to exhibit intelligent behaviour equivalent to, or indistinguishable from, that of a human. Turing proposed that a human evaluator would judge natural language conversations between a human and a machine designed to generate human-like responses. The evaluator would be aware that one of the two partners in conversation was a machine, and all participants would be separated from one another. The conversation would be limited to a text-only channel, such as a computer keyboard and screen, so the result would not depend on the machine's ability to render words as speech. If the evaluator could not reliably tell the machine from the human, the machine would be said to have passed the test. The test results would not depend on the machine's ability to give correct answers to questions, only on how closely its answers resembled those a human would give. Since the Turing test is a test of indistinguishability in performance capacity, the verbal version generalizes naturally to all of human performance capacity, verbal as well as nonverbal (robotic).

Natural computing, also called natural computation, is a terminology introduced to encompass three classes of methods: 1) those that take inspiration from nature for the development of novel problem-solving techniques; 2) those that are based on the use of computers to synthesize natural phenomena; and 3) those that employ natural materials to compute. The main fields of research that compose these three branches are artificial neural networks, evolutionary algorithms, swarm intelligence, artificial immune systems, fractal geometry, artificial life, DNA computing, and quantum computing, among others.

There are many types of artificial neural networks (ANN).

The network of the human nervous system is composed of nodes that are connected by links. The connectivity may be viewed anatomically, functionally, or electrophysiologically. These are presented in several Wikipedia articles that include Connectionism, Biological neural network, Artificial neural network, Computational neuroscience, as well as in several books by Ascoli, G. A. (2002), Sterratt, D., Graham, B., Gillies, A., & Willshaw, D. (2011), Gerstner, W., & Kistler, W. (2002), and Rumelhart, J. L., McClelland, J. L., and PDP Research Group (1986) among others. The focus of this article is a comprehensive view of modeling a neural network. Once an approach based on the perspective and connectivity is chosen, the models are developed at microscopic, mesoscopic, or macroscopic (system) levels. Computational modeling refers to models that are developed using computing tools.

This glossary of artificial intelligence is a list of definitions of terms and concepts relevant to the study of artificial intelligence, its sub-disciplines, and related fields. Related glossaries include Glossary of computer science, Glossary of robotics, and Glossary of machine vision.

Claudia Clopath is a Professor of Computational Neuroscience at Imperial College London and research leader at the Sainsbury Wellcome Centre for Neural Circuits and Behaviour. She develops mathematical models to predict synaptic plasticity for both medical applications and the design of human-like machines.