Related Research Articles

In programming and information security, a buffer overflow or buffer overrun is an anomaly whereby a program writes data to a buffer beyond the buffer's allocated memory, overwriting adjacent memory locations.

C is a general-purpose programming language. It was created in the 1970s by Dennis Ritchie and remains very widely used and influential. By design, C's features cleanly reflect the capabilities of the targeted CPUs. It has found lasting use in operating systems code, device drivers, and protocol stacks, but its use in application software has been decreasing. C is commonly used on computer architectures that range from the largest supercomputers to the smallest microcontrollers and embedded systems.

An optimizing compiler is a compiler designed to generate code that is optimized in aspects such as minimizing program execution time, memory use, storage size, and power consumption. Optimization is generally implemented as a sequence of optimizing transformations, algorithms that transform code to produce semantically equivalent code optimized for some aspect. It is typically CPU and memory intensive. In practice, factors such as available memory and a programmer's willingness to wait for compilation limit the optimizations that a compiler might provide. Research indicates that some optimization problems are NP-complete, or even undecidable.

Constant folding and constant propagation are related compiler optimizations used by many modern compilers. An advanced form of constant propagation known as sparse conditional constant propagation can more accurately propagate constants and simultaneously remove dead code.

In compiler design, static single assignment form is a type of intermediate representation (IR) where each variable is assigned exactly once. SSA is used in most high-quality optimizing compilers for imperative languages, including LLVM, the GNU Compiler Collection, and many commercial compilers.

OpenMP is an application programming interface (API) that supports multi-platform shared-memory multiprocessing programming in C, C++, and Fortran, on many platforms, instruction-set architectures and operating systems, including Solaris, AIX, FreeBSD, HP-UX, Linux, macOS, and Windows. It consists of a set of compiler directives, library routines, and environment variables that influence run-time behavior.

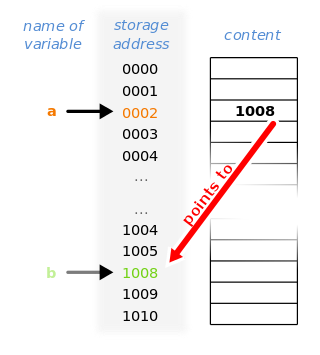

In computer science, a pointer is an object in many programming languages that stores a memory address. This can be that of another value located in computer memory, or in some cases, that of memory-mapped computer hardware. A pointer references a location in memory, and obtaining the value stored at that location is known as dereferencing the pointer. As an analogy, a page number in a book's index could be considered a pointer to the corresponding page; dereferencing such a pointer would be done by flipping to the page with the given page number and reading the text found on that page. The actual format and content of a pointer variable is dependent on the underlying computer architecture.

printf is a C standard library function that formats text and writes it to standard output.

In computer programming, bounds checking is any method of detecting whether a variable is within some bounds before it is used. It is usually used to ensure that a number fits into a given type, or that a variable being used as an array index is within the bounds of the array. A failed bounds check usually results in the generation of some sort of exception signal.

In computer programming, undefined behavior (UB) is the result of executing a program whose behavior is prescribed to be unpredictable, in the language specification of the programming language in which the source code is written. This is different from unspecified behavior, for which the language specification does not prescribe a result, and implementation-defined behavior that defers to the documentation of another component of the platform.

Buffer overflow protection is any of various techniques used during software development to enhance the security of executable programs by detecting buffer overflows on stack-allocated variables, and preventing them from causing program misbehavior or from becoming serious security vulnerabilities. A stack buffer overflow occurs when a program writes to a memory address on the program's call stack outside of the intended data structure, which is usually a fixed-length buffer. Stack buffer overflow bugs are caused when a program writes more data to a buffer located on the stack than what is actually allocated for that buffer. This almost always results in corruption of adjacent data on the stack, which could lead to program crashes, incorrect operation, or security issues.

In computer programming, an inline assembler is a feature of some compilers that allows low-level code written in assembly language to be embedded within a program, among code that otherwise has been compiled from a higher-level language such as C or Ada.

In computer science, a tail call is a subroutine call performed as the final action of a procedure. If the target of a tail is the same subroutine, the subroutine is said to be tail recursive, which is a special case of direct recursion. Tail recursion is particularly useful, and is often easy to optimize in implementations.

In software, a stack overflow occurs if the call stack pointer exceeds the stack bound. The call stack may consist of a limited amount of address space, often determined at the start of the program. The size of the call stack depends on many factors, including the programming language, machine architecture, multi-threading, and amount of available memory. When a program attempts to use more space than is available on the call stack, the stack is said to overflow, typically resulting in a program crash.

In computer programming, thread-local storage (TLS) is a memory management method that uses static or global memory local to a thread. The concept allows storage of data that appears to be global in a system with separate threads.

In computer science, rematerialization or remat is a compiler optimization which saves time by recomputing a value instead of loading it from memory. It is typically tightly integrated with register allocation, where it is used as an alternative to spilling registers to memory. It was conceived by Gregory Chaitin, Marc Auslander, Ashok Chandra, John Cocke, Martin Hopkins and Peter Markstein and implemented in the Pl.8 compiler for the 801 Minicomputer in the late 1970s. Later improvements were made by Preston Briggs, Keith D. Cooper, and Linda Torczon in 1992.

In computer programming, an integer overflow occurs when an arithmetic operation on integers attempts to create a numeric value that is outside of the range that can be represented with a given number of digits – either higher than the maximum or lower than the minimum representable value.

In computer programming, the block starting symbol is the portion of an object file, executable, or assembly language code that contains statically allocated variables that are declared but have not been assigned a value yet. It is often referred to as the "bss section" or "bss segment".

Interprocedural optimization (IPO) is a collection of compiler techniques used in computer programming to improve performance in programs containing many frequently used functions of small or medium length. IPO differs from other compiler optimizations by analyzing the entire program as opposed to a single function or block of code.

Objective-C is a high-level general-purpose, object-oriented programming language that adds Smalltalk-style message passing (messaging) to the C programming language. Originally developed by Brad Cox and Tom Love in the early 1980s, it was selected by NeXT for its NeXTSTEP operating system. Due to Apple macOS’s direct lineage from NeXTSTEP, Objective-C was the standard language used, supported, and promoted by Apple for developing macOS and iOS applications from 1997, when Apple purchased NeXT until the introduction of the Swift language in 2014.

References

- ↑ Harrison, William H. (1977). "Compiler Analysis of the Value Ranges for Variables". IEEE Transactions on Software Engineering. 3 (3): 243–250. doi:10.1109/TSE.1977.231133. S2CID 17018610.

- ↑ Wagner, D.; Foster, J. S.; Brewer, E. A.; Aiken, A. (2000). A First Step Towards Automated Detection of Buffer Overrun Vulnerabilities. NDSS.

- ↑ Birch, Johnnie; van Engelen, Robert; Gallivan, Kyle. "Value Range Analysis of Conditionally Updated Variables and Pointers" (PDF).

- ↑ MacLeod, Andrew (28 April 2021). "Value range propagation in GCC with Project Ranger".