External links

| Look up VHML in Wiktionary, the free dictionary. |

The Virtual Human Markup Language often abbreviated as VHML is a markup language used for the computer animation of human bodies and facial expressions. [1] The language is designed to describe various aspects of human-computer interactions with regards to facial animation, text to Speech, and multimedia information.

VHML consists of the following so-called "sub-languages":

| Look up VHML in Wiktionary, the free dictionary. |

Computer animation is the process used for digitally generating animated images. The more general term computer-generated imagery (CGI) encompasses both static scenes and dynamic images, while computer animation only refers to moving images. Modern computer animation usually uses 3D computer graphics to generate a two-dimensional picture, although 2D computer graphics are still used for stylistic, low bandwidth, and faster real-time renderings. Sometimes, the target of the animation is the computer itself, but sometimes film as well.

In computing, plain text is a loose term for data that represent only characters of readable material but not its graphical representation nor other objects. It may also include a limited number of "whitespace" characters that affect simple arrangement of text, such as spaces, line breaks, or tabulation characters. Plain text is different from formatted text, where style information is included; from structured text, where structural parts of the document such as paragraphs, sections, and the like are identified; and from binary files in which some portions must be interpreted as binary objects.

Speech synthesis is the artificial production of human speech. A computer system used for this purpose is called a speech computer or speech synthesizer, and can be implemented in software or hardware products. A text-to-speech (TTS) system converts normal language text into speech; other systems render symbolic linguistic representations like phonetic transcriptions into speech.

A computer language is a method of communication with a computer. Types of computer languages include:

Affective computing is the study and development of systems and devices that can recognize, interpret, process, and simulate human affects. It is an interdisciplinary field spanning computer science, psychology, and cognitive science. While some core ideas in the field may be traced as far back as to early philosophical inquiries into emotion, the more modern branch of computer science originated with Rosalind Picard's 1995 paper on affective computing and her book Affective Computing published by MIT Press. One of the motivations for the research is the ability to give machines emotional intelligence, including to simulate empathy. The machine should interpret the emotional state of humans and adapt its behavior to them, giving an appropriate response to those emotions.

A human-readable medium or human-readable format is any encoding of data or information that can be naturally read by humans.

Poser is a 3D computer graphics program distributed by Bondware. Poser is optimized for the 3D modeling of human figures. By enabling beginners to produce basic animations and digital images, along with the extensive availability of third-party digital 3D models, it has attained much popularity.

Extensible Application Markup Language is a declarative XML-based language developed by Microsoft that is used for initializing structured values and objects. It is available under Microsoft's Open Specification Promise. The acronym originally stood for Extensible Avalon Markup Language, Avalon being the code-name for Windows Presentation Foundation (WPF).

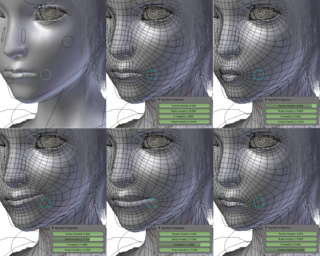

Computer facial animation is primarily an area of computer graphics that encapsulates methods and techniques for generating and animating images or models of a character face. The character can be a human, a humanoid, an animal, a legendary creature or character, etc. Due to its subject and output type, it is also related to many other scientific and artistic fields from psychology to traditional animation. The importance of human faces in verbal and non-verbal communication and advances in computer graphics hardware and software have caused considerable scientific, technological, and artistic interests in computer facial animation.

Human image synthesis is technology that can be applied to make believable and even photorealistic renditions of human-likenesses, moving or still. It has effectively existed since the early 2000s. Many films using computer generated imagery have featured synthetic images of human-like characters digitally composited onto the real or other simulated film material. Towards the end of the 2010s deep learning artificial intelligence has been applied to synthesize images and video that look like humans, without need for human assistance, once the training phase has been completed, whereas the old school 7D-route required massive amounts of human work.

Keith Waters is a British animator who is best known for his work in the field of computer facial animation. He has received international awards from Parigraph, the National Computer Graphics Association and the Computer Animation Film Festival.

An Emotion Markup Language has first been defined by the W3C Emotion Incubator Group (EmoXG) as a general-purpose emotion annotation and representation language, which should be usable in a large variety of technological contexts where emotions need to be represented. Emotion-oriented computing is gaining importance as interactive technological systems become more sophisticated. Representing the emotional states of a user or the emotional states to be simulated by a user interface requires a suitable representation format; in this case a markup language is used.

SABLE is an XML markup language used to annotate texts for speech synthesis. It defines tags that control how written words, numbers, and sentences are audibly reproduced by a computer. SABLE was developed as an informal joint project between Sun Microsystems, AT&T, Bell Labs, and the University of Edinburgh as an initiative to combine three previous speech synthesis markup languages SSML, STML, and JSML.

The Rich Representation Language, often abbreviated as RRL, is a computer animation language specifically designed to facilitate the interaction of two or more animated characters. The research effort was funded by the European Commission as part of the NECA Project. The NECA framework within which RRL was developed was not oriented towards the animation of movies, but the creation of intelligent "virtual characters" that interact within a virtual world and hold conversations with emotional content, coupled with suitable facial expressions.

The NECA Project was a research project that focused on multimodal communication with animated agents in a virtual world. NECA was funded by the European Commission from 1998–2002 and the research results were published up to 2005.

A Face Animation Parameter (FAP) is a component of the MPEG-4 Face and Body Animation (FBA) International Standard developed by the Moving Pictures Experts Group. It describes a standard for virtually representing humans and humanoids in a way that adequately achieves visual speech intelligibility as well as the mood and gesture of the speaker, and allows for very low bitrate compression and transmission of animation parameters. FAPs control key feature points on a face model mesh that are used to produce animated visemes and facial expressions, as well as head and eye movement. These feature points are part of the Face Definition Parameters (FDPs) also defined in the MPEG-4 standard.

Behavior authoring is a technique that is widely used in crowd simulations and in simulations and computer games that involve multiple autonomous or non-player characters (NPCs). There has been growing academic and industry interest in the behavioral animation of autonomous actors in virtual worlds. However, it remains a considerable challenge to author complicated interactions between multiple actors in a way that balances automation and control flexibility.

Virtual Humans is a research domain concerned with the simulation of human beings on computers. It involves their representation, movement and behavior. There is a wide range of applications: simulation, games, film and TV productions, human factors and ergonomic and usability studies in various industries, clothing industry, telecommunications (avatars), medicine, etc. These applications require different know-hows. A medical application might require an exact simulation of specific internal organs; film industry requires highest aesthetic standards, natural movements, and facial expressions; ergonomic studies require faithful body proportions for a particular population segment and realistic locomotion with constraints, etc. The merging